Client Goal

OrioleDB is a new storage engine built atop PostgreSQL with the aim of delivering improved scalability and modern storage models. With its current alpha status and limited production guidance, the OrioleDB team needed objective evidence showing how their engine performs compared with vanilla PostgreSQL. The core goal of this benchmarking engagement was to collect transparent, repeatable performance data across realistic workloads so that OrioleDB developers could:

- Quantify performance gains and trade‑offs relative to PostgreSQL.

- Identify bottlenecks that limit throughput or stability under load.

- Provide credible proof points for prospective users and investors.

Why OrioleDB Chose PFLB

The OrioleDB team sought an independent partner with a proven track record in impartial performance testing. PFLB’s published case studies illustrate how their benchmark testing services deliver neutral, repeatable results and clear insights — qualities that made us a natural fit. Key reasons for selecting PFLB included:

Scope of Work

To evaluate OrioleDB’s performance against PostgreSQL, PFLB engineers planned and executed synthetic benchmarks using two widely adopted tools:

- TPC‑C simulation via BenchBase – This workload models a mixed transactional system with New‑Order, Payment, Order‑Status, Delivery, and Stock‑Level transactions. It stresses both read and write paths and is a standard for relational databases.

- pgBench tests – Two scenarios were executed: a read‑only workload (SELECT queries) and a mixed read‑write workload (INSERT + UPDATE + SELECT). These micro‑benchmarks help isolate query‑processing performance.

Both databases were deployed on identical virtual machines with 4‑core AMD EPYC processors, 64 GB RAM, and 200 GB of storage. Equal hardware configuration ensured that performance differences stemmed from the database engines rather than the environment.

Our Benchmark Testing Methodology

Testing Environment Setup

We set up a consistent, cloud‑based infrastructure on virtual machines to ensure fair comparisons. Each DBMS instance used the same operating system, storage, and network settings. Monitoring agents collected system‑level metrics (CPU usage, RAM consumption, I/O throughput) and Docker‑specific metrics when applicable.

Tools Used

- BenchBase – a multi‑threaded load generator capable of producing variable rates and transactional mixes. It records per‑transaction latency and throughput.

- pgBench – a standard PostgreSQL benchmarking tool that runs custom transaction scripts repeatedly and reports transactions per second (TPS).

Test Execution

- TPC‑C tests were run for 10 minutes after initial data loads. Four to five iterations were performed for each scenario to ensure statistical validity, replicating PFLB’s practice of running multiple iterations for reliability.

- pgBench tests also ran for 10 minutes, with separate runs for read‑only and read‑write scripts. Each test emulated multiple concurrent sessions.

During the tests, PFLB engineers closely monitored CPU, memory, disk I/O, and TPS metrics for both systems. Our team also collected detailed query‑level statistics from pg_stat_statements to highlight long‑running SQL statements.

Reporting & Conclusions

Evaluation Focus

The reporting phase was designed to document and structure observations gathered during benchmarking, without interpreting results or prioritizing outcomes. The goal was to clearly capture how each database behaves across different operational dimensions under controlled, repeatable conditions.

The evaluation focused on the following aspects:

- Stability and recovery behavior

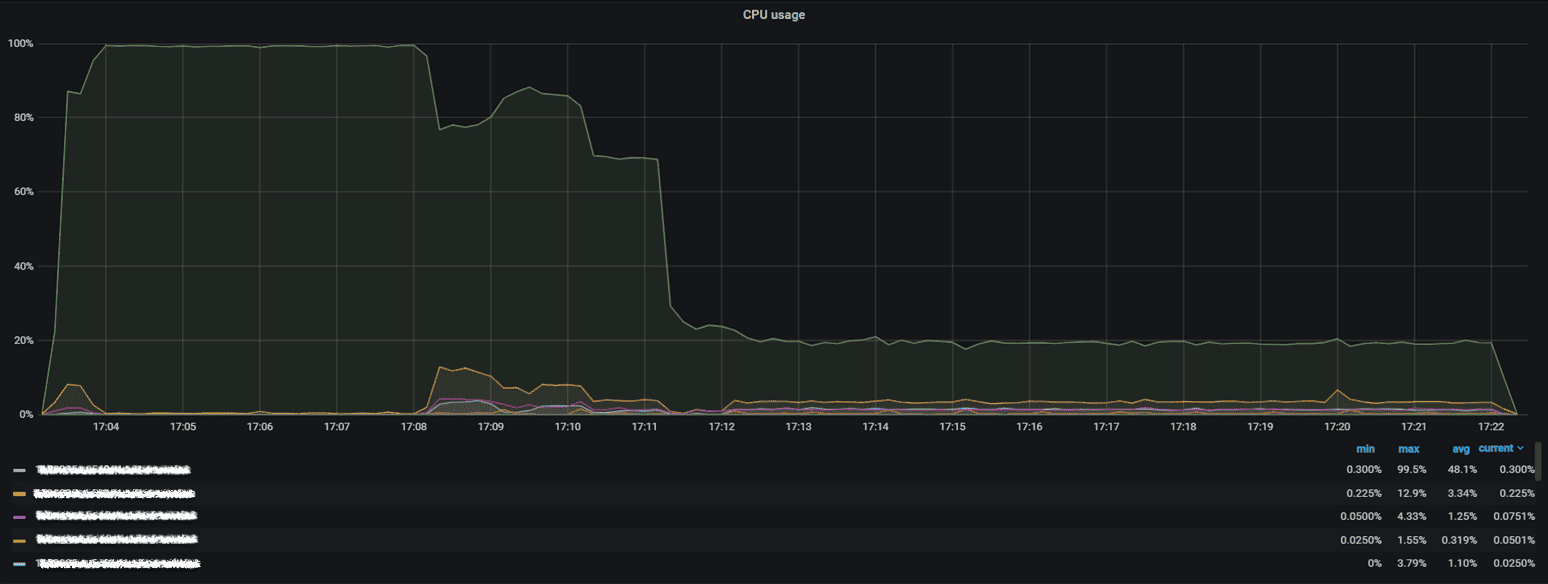

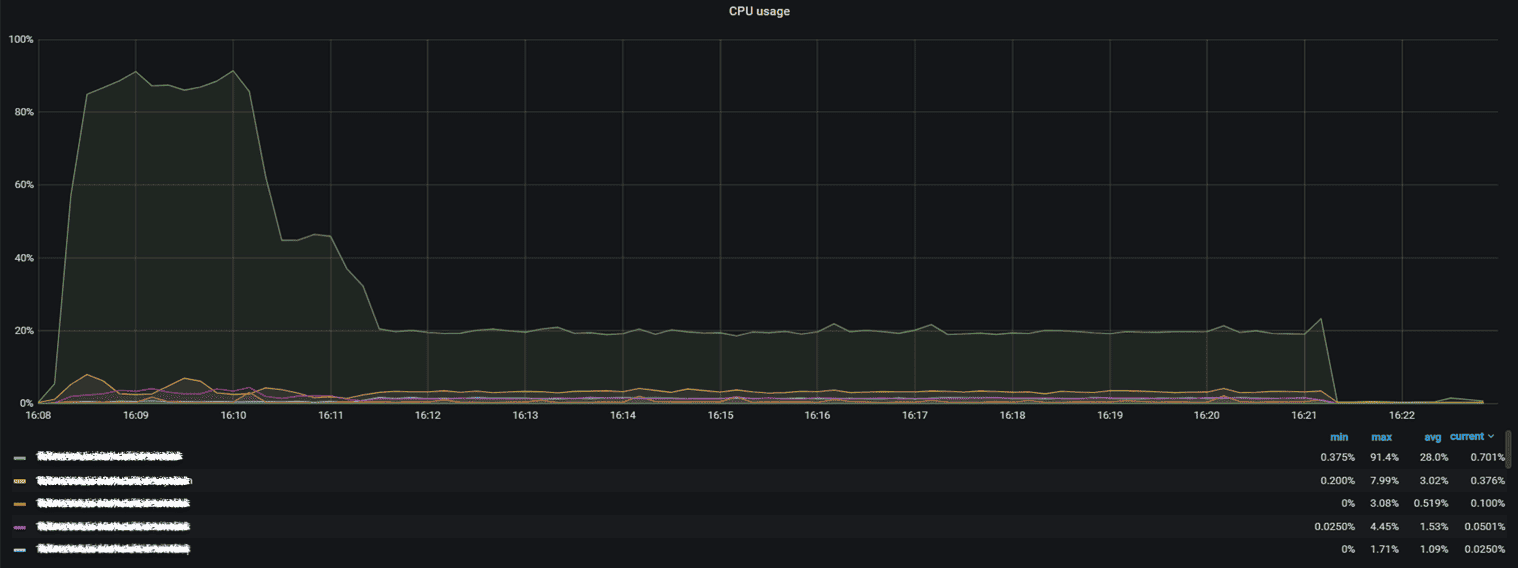

The assessment included observing how each database responds to sustained load, error conditions, and recovery scenarios. This covered system responsiveness during stress, restart behavior, and recovery workflows triggered during benchmarking. - CPU utilization during critical phases

CPU usage was monitored throughout the tests, with particular attention to periods of intensive activity such as initial data population and sustained transactional execution. This allowed for analysis of how workload phases affect processor utilization patterns.

- Database initialization and load processes

The scope included tracking the time and system behavior associated with populating benchmark datasets. Bulk data-loading operations were examined to understand how each database handles large-scale write activity during setup. - Transaction throughput after initialization

Once datasets were fully loaded, transaction processing was observed under steady-state conditions. This made it possible to analyze how each system behaves during continuous transactional workloads independent of initialization overhead. - Read-only and mixed workload execution

Separate benchmarking scenarios were used to examine read-only workloads and mixed read/write workloads. This distinction allowed for analysis of system behavior across different access patterns commonly seen in production environments.

Comprehensive Monitoring

Throughout all test scenarios, PFLB collected detailed monitoring data, including CPU and memory utilization, load averages, context switches, and disk I/O activity. These metrics were captured consistently across both systems and across all benchmark phases to provide a complete operational view of system behavior.

Unified Reporting

All collected metrics were consolidated into unified reports and comparison tables. These reports summarized transaction throughput, query execution characteristics, and resource utilization trends across test scenarios. The reporting structure was designed to support transparent analysis and enable stakeholders to independently review system behavior, configurations, and execution contexts without embedded interpretation or bias.

Client Benefits & the PFLB Advantage

Partnering with PFLB provided the OrioleDB team with a credible, data‑driven assessment of their storage engine’s performance. By leveraging our impartial methodology and transparent reporting, OrioleDB gained:

- Clear performance benchmarks against PostgreSQL across multiple workloads.

- Identification of bottlenecks that affect reliability and write throughput, informing future engineering priorities.

- Evidence of strengths, notably better read performance, that can be used in marketing and investor communications.

- Actionable recommendations for configuration tuning and further testing, based on PFLB’s extensive performance‑engineering expertise.

Much like PFLB’s Chainguard Docker container case study, where the client received unbiased performance data to inform product decisions, this benchmarking project empowered OrioleDB to iterate confidently. With transparent results and clear next steps, the OrioleDB team can continue improving their engine while articulating its advantages to the broader PostgreSQL community.