Comparative Load Testing of a DBMS for SAP BW in Retail

No one disputes the fact that retail sales analysis is critically important to retail sales. It allows potential ways to increase sales volumes to be extracted from the ocean of current data. The faster this process occurs, the more successful the retailer will be in the market.

By then, PFLB had gained extensive skills in SAP load testing, so our client invited our company to join in this work.

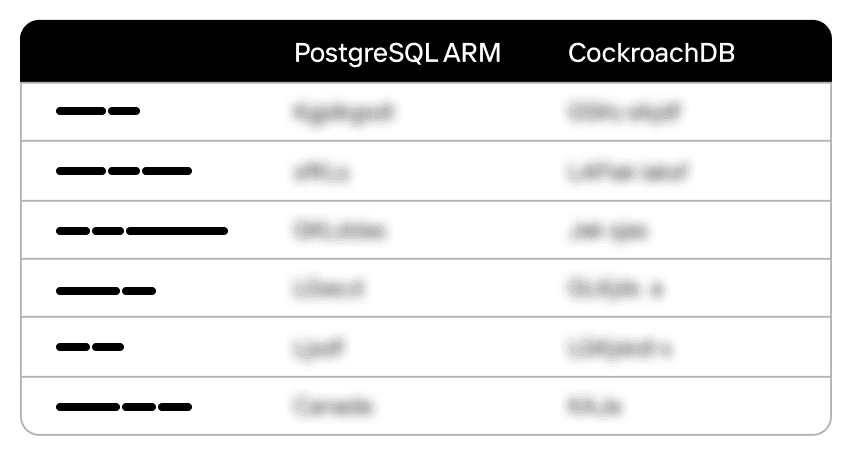

We performed comparative load testing of the SAP BW platform against three database configurations:

We had to identify the system with the best performance so that the client could relate our research results to its financial capabilities and select the best technology for itself.

Since we were potentially spending several tens of millions of dollars, we felt the seriousness of the responsibility.

Project Features

Comparing high-tech software and hardware solutions, which include servers, data storage systems, communications equipment, and other know-how and specialized software, is not as simple as it seems at first glance.

However, database management systems have a rather large cache; some have a multi-tiered cache. After the first test run, the data is cached in memory. The second test run would only access the cache without changing the startup parameters.

It became clear that the testing must be more reproducible and that we would need to recreate a load identical to the load on the production server. As you can understand, the test benches could not work with real data, so the customer created its own method.

Step One: Create a Methodology

The customer’s methodology included several requirements for the project.

Requirement 1: The frequency of launching reports must be proportional to the frequency in the real system.

To satisfy this requirement, six months of report generation statistics were analyzed. The most time-intensive reports were selected. After all, any large organization does not have dozens but hundreds of reports, and their execution time depends on the launch conditions.

There’s a subtlety here: some reports are rarely launched but take hours to generate. Others are generated in a minute but launched thousands of times daily by different users. Therefore, the indicator of report generation intensity was the total time spent executing a report over six months, regardless of the launch parameters.

Requirement 2: The model must replicate the business day.

The customer’s typical business day was divided into two parts: users interacting with the system during the daytime and regular background tasks running at night. So, we created two profiles: a day profile and a night profile.

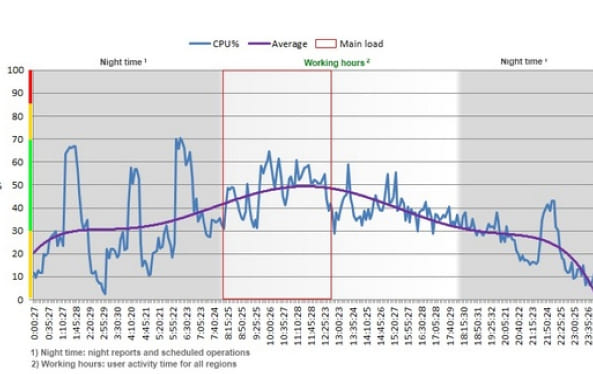

For clarity, I’ll show a real graph of the CPU load from one of the application servers. It shows the behavior during both daytime user activity and nighttime background tasks:

To make the picture as accurate as possible, in addition to the main profiles, a background load, or “noise,” was created since other operations occur on the server. This trick lets us imitate a similar load.

The daytime and background profiles were built based on statistics from the most loaded days of the month.

Step Two: Project Implementation

The project needed to achieve more than an understanding of how the system would behave. We also needed to test the scalability of the solutions being tested. For this, our team developed special load tests with increased user activity and profiles with data volumes increased by a factor of two, three, and five.

Moreover, it was important not only to compare quantitative indicators but also to assess qualitative characteristics. So, in addition to measuring the response time, we captured load profiles for the CPU, I/O, LAN, etc., from all infrastructure components and performed a qualitative analysis and interpretation of the data.

Of course, several difficulties did arise during the implementation of the project.

Project Features

Despite all the problems listed above, the pilot project slipped only one week, thanks to solid teamwork.

Step Three: Analysis of the Results

The project’s overall impact was a fourfold increase in performance and a reduction in data storage requirements due to compression and other technologies. In some cases, it accelerated user tasks 10–20 times, but in other cases, the processing speed decreased several times (not usually mentioned in marketing articles).

Although this option was the most expensive, the client followed our recommendation and purchased both SAP HANA and the hardware necessary to run it. Thus, PFLB has contributed to our customer’s subsequent growth as one of the most significant retailers.

Moreover, our joint project helped identify factors that limit the performance of the client’s SAP BW system. PFLB pointed out these problems and suggested ways to solve them.

In other words, such projects are not theoretical: they allow customers to choose a well-founded IT strategy for their business.

Related insights in case studies

Benchmark Testing Case Study: How PFLB Validated Chainguard Docker Container Performance

Chainguard specializes in providing highly secure Docker container images — regularly updated, tightly managed, and designed to minimize vulnerabilities. While their reputation for security is well-established, Chainguard wanted to ensure their containers also delivered competitive performance. After all, strong security is crucial, but it shouldn’t slow you down. Client Goal Chainguard needed clear, objective proof […]

From Weeks to Hours: Accelerating Data Masking and Enabling Easy B2B Data Sharing for a Leading Bank

A leading bank, ranked among the top 20 in its market, provides services to millions of customers daily. Staying at the forefront of this competitive market requires not only stable and updated infrastructure but also rapid feature delivery to maintain the highest service quality. Challenge The bank faced a critical challenge in enabling safe sharing […]

Leading Oil & Gas Innovator, NOV Excels with Real-Time Drilling Data After Load Testing

NOV, a renowned global provider of equipment, components, and IT products for the oil and gas industry, which is located in Texas, USA, empowers extraction companies worldwide with innovative technological solutions that enable the safe and efficient production of abundant energy while minimizing environmental impact. Under its CTES brand, NOV offers a range of IT […]

From Hundreds to Thousands: Scaling MEFA Pathway Software for Mass Student Registration

FolderWave, Inc. is a leading digital services provider in the Massachusetts e-learning sector. It aids millions of students in researching and planning a job-oriented education. The company delivers IT solutions, platforms, and services in partnership with notable non-profit organizations like MEFA Pathway and College Board, which connect a vast network of colleges, schools, and universities […]

Be the first one to know

We’ll send you a monthly e-mail with all the useful insights that we will have found and analyzed

People love to read

Explore the most popular articles we’ve written so far