Load testing has always been essential for ensuring applications can handle real-world traffic, but the process traditionally demands deep technical expertise, time-intensive setup, and painstaking manual analysis. AI is changing that.

By automating scenario creation, optimizing test parameters, and delivering clear, data-driven reports, AI is lowering the barrier to entry and speeding up feedback loops. In 2025, several load testing platforms have moved from theory to practice, offering AI capabilities that can be used today — while others remain in experimental stages.

AI in Load Testing Today

AI for Test Authoring & Operations

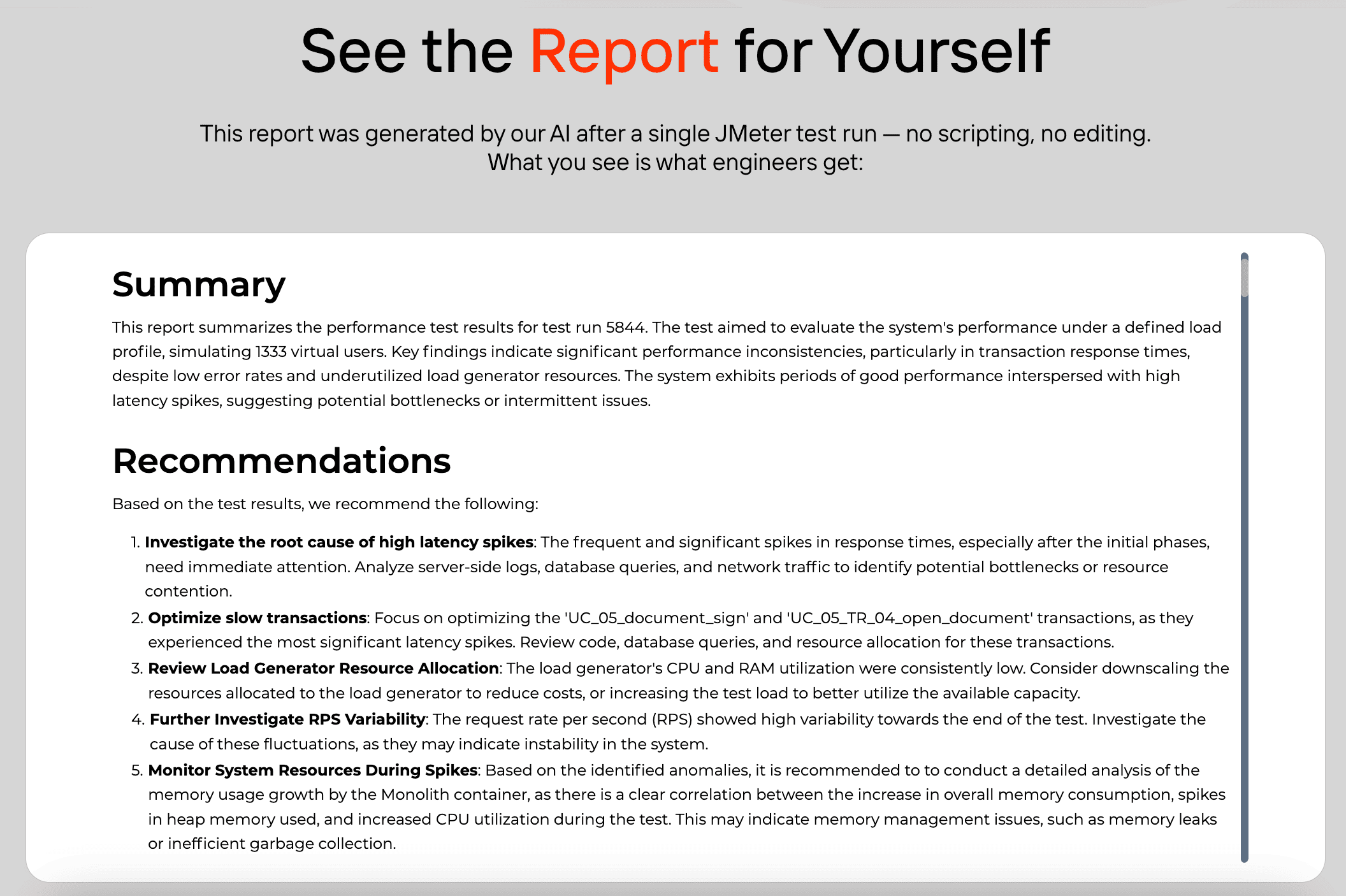

PFLB

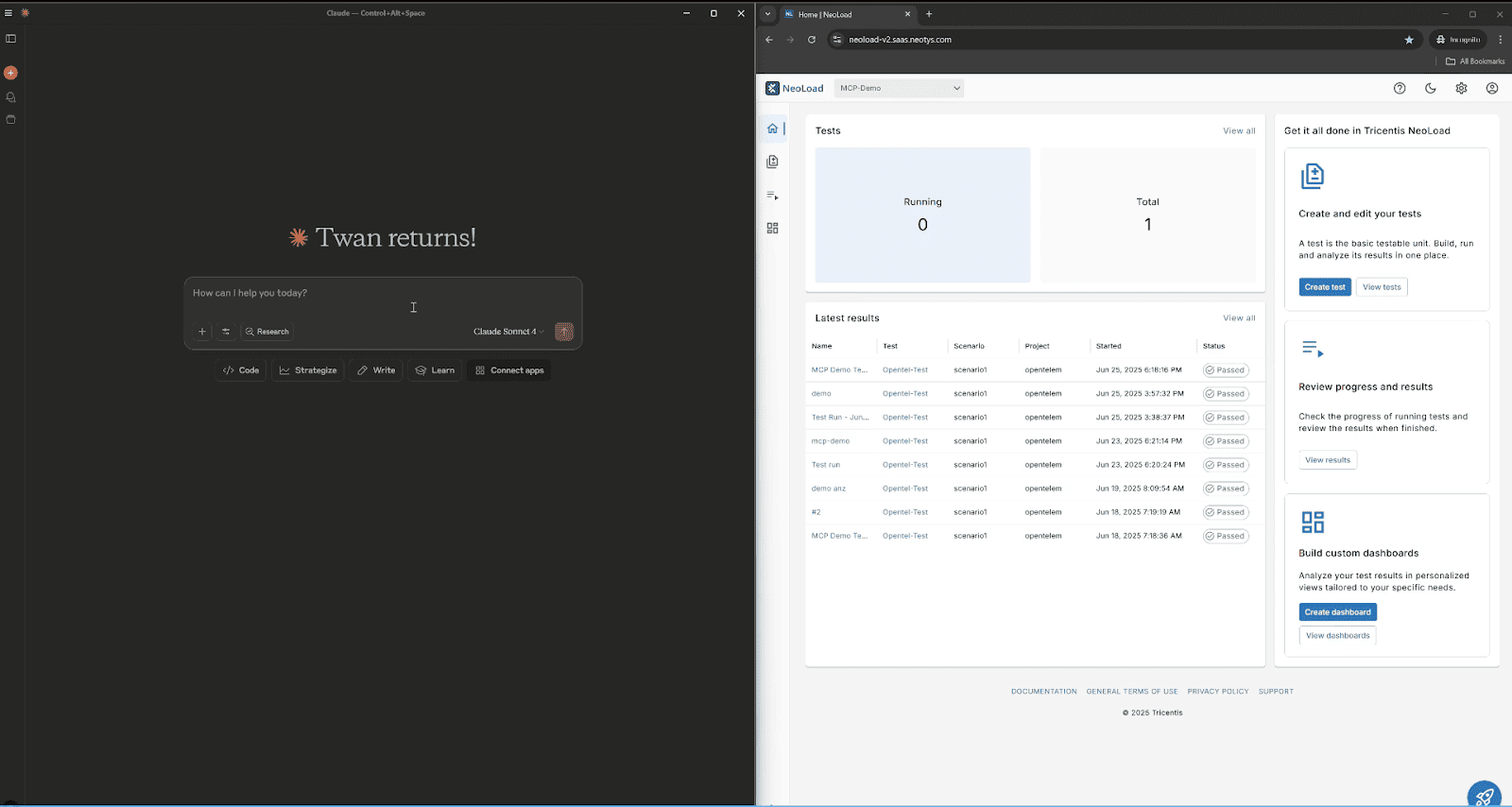

NeoLoad MCP (Tricentis)

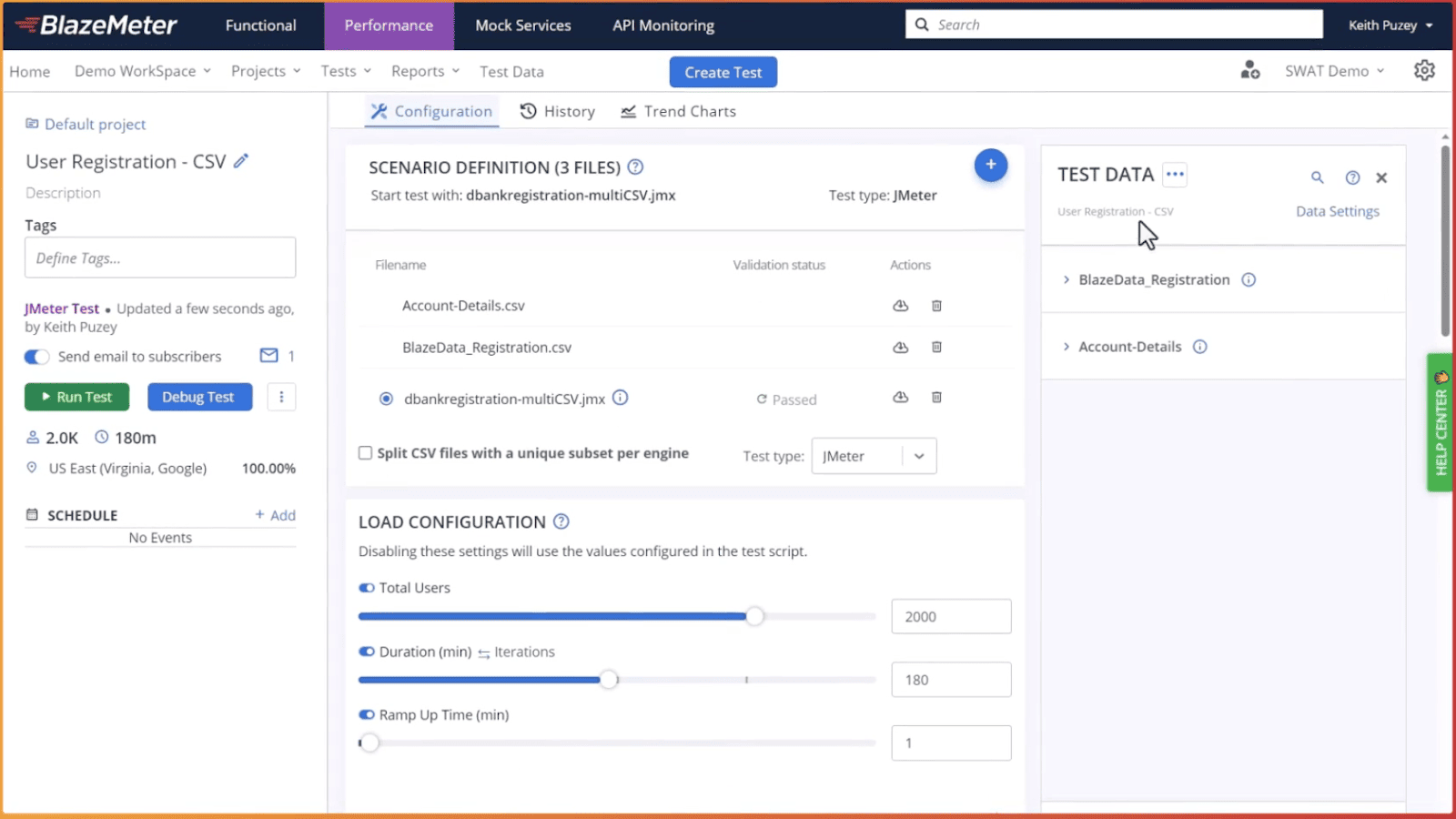

BlazeMeter AI Test Data

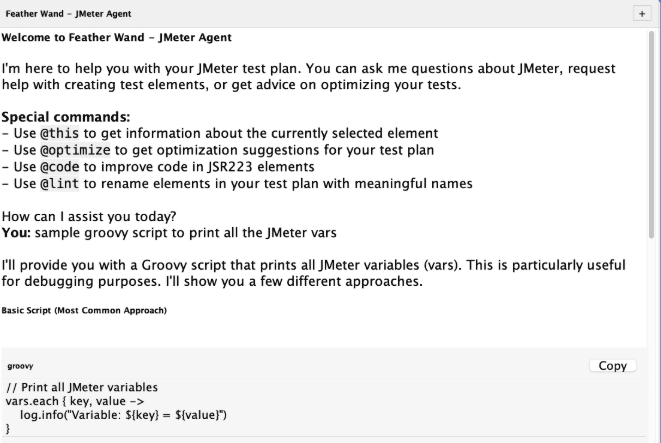

Featherwand

Where AI Is Absent or Experimental

k6

Gatling

AI-Generated Network Sniffers

Limitations of AI in Load Testing

AI features in load testing tools have clear benefits, but each comes with constraints that affect reliability, scope, and adoption. These limitations are tied directly to how AI works in practice today — from data quality to integration dependencies — and they should be factored into any implementation plan.

Scope of AI Features

Data Quality and Model Context

Lack of Real-Time Adaptation

Integration Fragility

Risk of Over-Reliance on AI

Emerging AI Trends in Load Testing

AI in load testing is still evolving, with most current features covering specific, well-defined tasks. However, several trends are starting to reshape how performance testing will be conducted in the next few years. These developments are based on real vendor roadmaps, industry experiments, and patterns in adjacent testing technologies.

Fully AI-Run Load Testing Scenarios (PFLB Development)

Expansion of Natural-Language Interfaces

AI-Driven Synthetic Data at Scale

Embedded AI Authoring Inside Open-Source Tools

Pre-Run Test Design Optimization

Towards Real-Time Adaptive Load Testing

Cross-Integration with AIOps Pipelines

Conclusion

AI is reshaping load testing by lowering skill barriers, speeding up test creation, and delivering faster insights. Current capabilities focus on specific stages — authoring assistance, synthetic data generation, and post-run reporting — rather than replacing the full process.

Limitations remain: results depend on data quality, most systems don’t adapt in real time, and end-to-end autonomous testing is still in development. Even so, the trajectory is clear. As AI integrates more deeply into the testing lifecycle, it will shift performance testing from a specialist task to a more collaborative, continuous practice — with humans guiding strategy and AI handling execution and analysis at scale.