Performance testing tools already rely on AI methods. Monitoring platforms use anomaly detection, cloud providers forecast demand for autoscaling, and log systems group patterns with machine learning. These features are often treated as standard, but they are all examples of AI applied in practice. This article looks at six areas where testers already work with AI every day.

Key Takeaways

1. Anomaly Detection in APM Tools

What it is

Anomaly detection in APM is about automatically learning what “normal” behavior looks like (with respect to trends, seasonality, etc.) and then alerting you when metrics deviate. It’s different from simple fixed thresholds — it can adapt to cycles in your data (hour-of-day, day-of-week), gradual baseline shifts, and noisy behavior.

How it works in practice (Datadog example)

Strengths and limitations

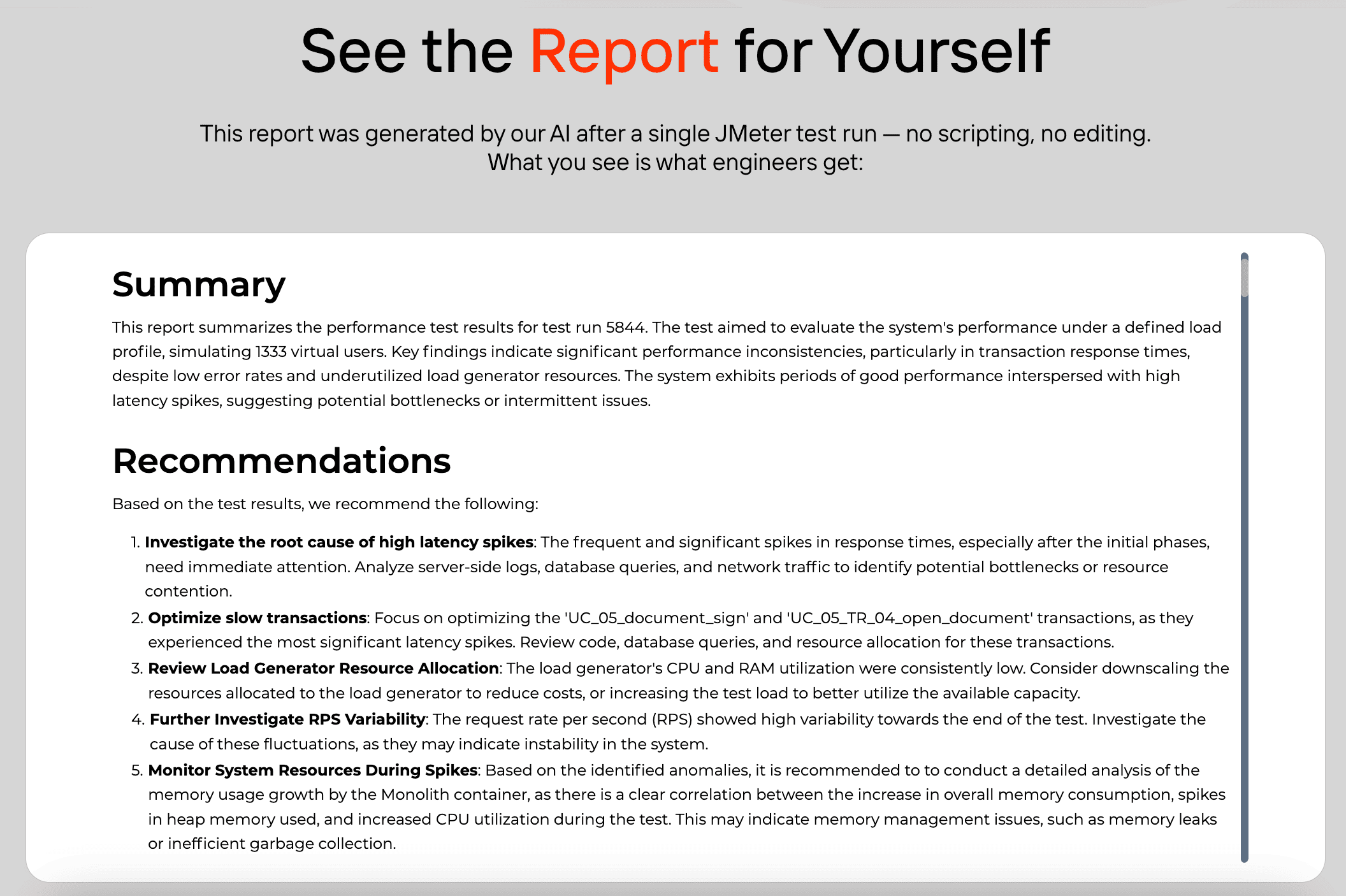

2. AI-Assisted Reporting in Load Testing Platforms

What it is

Load testing platforms are starting to integrate AI to reduce the manual effort of interpreting results and running tests. Instead of requiring engineers to sift through graphs or memorize tool-specific syntax, AI generates reports, summarizes findings, and even provides conversational interfaces for executing scenarios.

How it works in practice

Strengths and limitations

| Strengths | Limitations / Conditions |

| Produces first-draft reports and summaries immediately after a run. | Some AI capabilities may require enterprise or paid tiers. |

| Makes load testing results easier to understand for business stakeholders. | Depth and accuracy vary between platforms; not all AI features are production-ready. |

| Reduces repetitive setup and reporting work through conversational interfaces. | Advanced features like AI scripting are in development, not yet mainstream. |

3. Predictive Autoscaling in Cloud Platforms (AWS, Azure, Google Cloud)

What it is

Autoscaling is the ability of cloud services to add or remove compute resources based on demand. Traditionally, this was reactive: the system scaled after metrics such as CPU usage or request rate crossed a threshold. Predictive autoscaling goes a step further — it uses historical data to forecast traffic patterns and adjust capacity before the demand spike arrives.

How it works in practice

Strengths and limitations

| Strengths | Limitations / Conditions |

| Reduces latency at the start of traffic surges, because instances are already running. | Requires consistent traffic cycles (e.g. business hours, weekly peaks). With irregular or spiky workloads, predictive models may be less effective. |

| Optimizes cost by avoiding both over-provisioning and lagging reactive scale-outs. | Needs a baseline of historical usage; for new applications without stable traffic, predictive scaling won’t work well. |

| Improves reliability for scheduled events (marketing campaigns, batch jobs, etc.). | Forecasts are statistical/ML models — not infallible. Unexpected load outside learned patterns still requires reactive scaling as a safety net. |

4. AI Coding Assistants for Test Scripting

What it is

AI coding assistants such as GitHub Copilot and JetBrains AI Assistant apply large language models trained on public code to suggest completions, generate functions, or explain existing code. For performance testers, these assistants are useful when writing load scripts, setting up test data, or automating repetitive tasks.

How it works in practice

Strengths and limitations

| Strengths | Limitations / Conditions |

| Saves time on repetitive boilerplate such as request definitions, assertions, and data factories. | Output may be incorrect or inefficient; all generated code needs review. |

| Can speed up learning for new frameworks (e.g., showing examples of k6 or Locust syntax). | Models have no knowledge of your system’s constraints or non-public APIs. |

| Provides quick explanations or documentation for less familiar parts of a test suite. | Requires internet connectivity and may raise compliance concerns in restricted environments. |

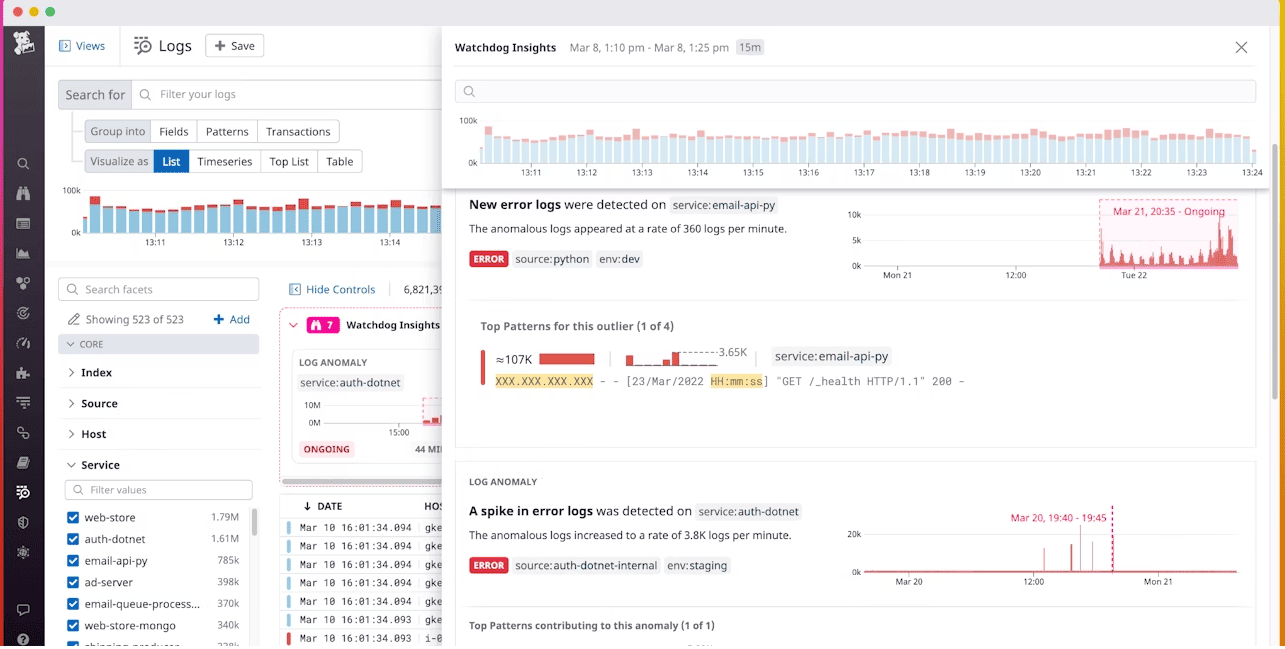

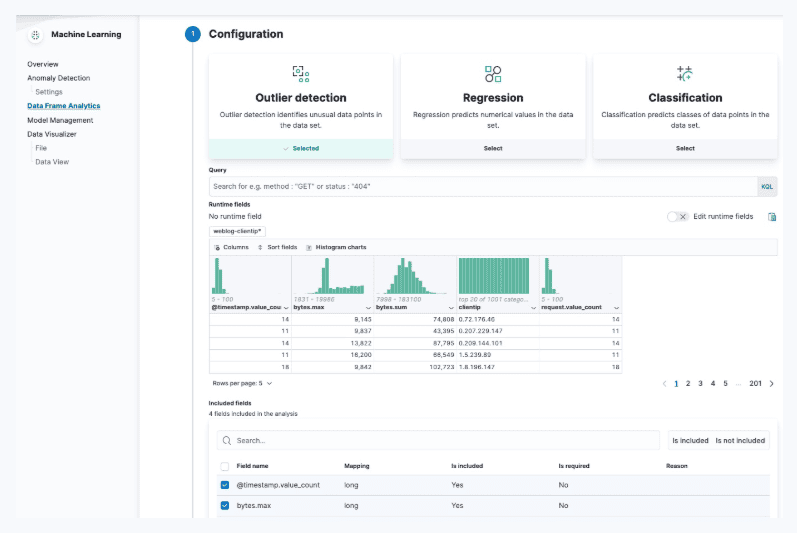

5. Log Analysis with Clustering and Outlier Detection

What it is

Modern log platforms use AI and machine learning techniques to reduce noise in large volumes of log data. Instead of showing every raw line, they group similar messages together, highlight new patterns, and flag unusual activity. This helps performance testers detect emerging issues without manually searching through thousands of entries.

How it works in practice

Strengths and limitations

| Strengths | Limitations / Conditions |

| Reduces manual triage by grouping repeated errors into clusters. | Requires well-structured and clean log ingestion. Poor parsing leads to poor clustering. |

| Surfaces new or rare error messages quickly, without writing custom regex searches. | Models rely on historical baselines. Sudden changes from deployments or configuration shifts can trigger false positives. |

| Works across large data volumes, where manual review would be impractical. | Advanced anomaly detection often requires paid tiers or additional setup (e.g., Elastic ML jobs). |

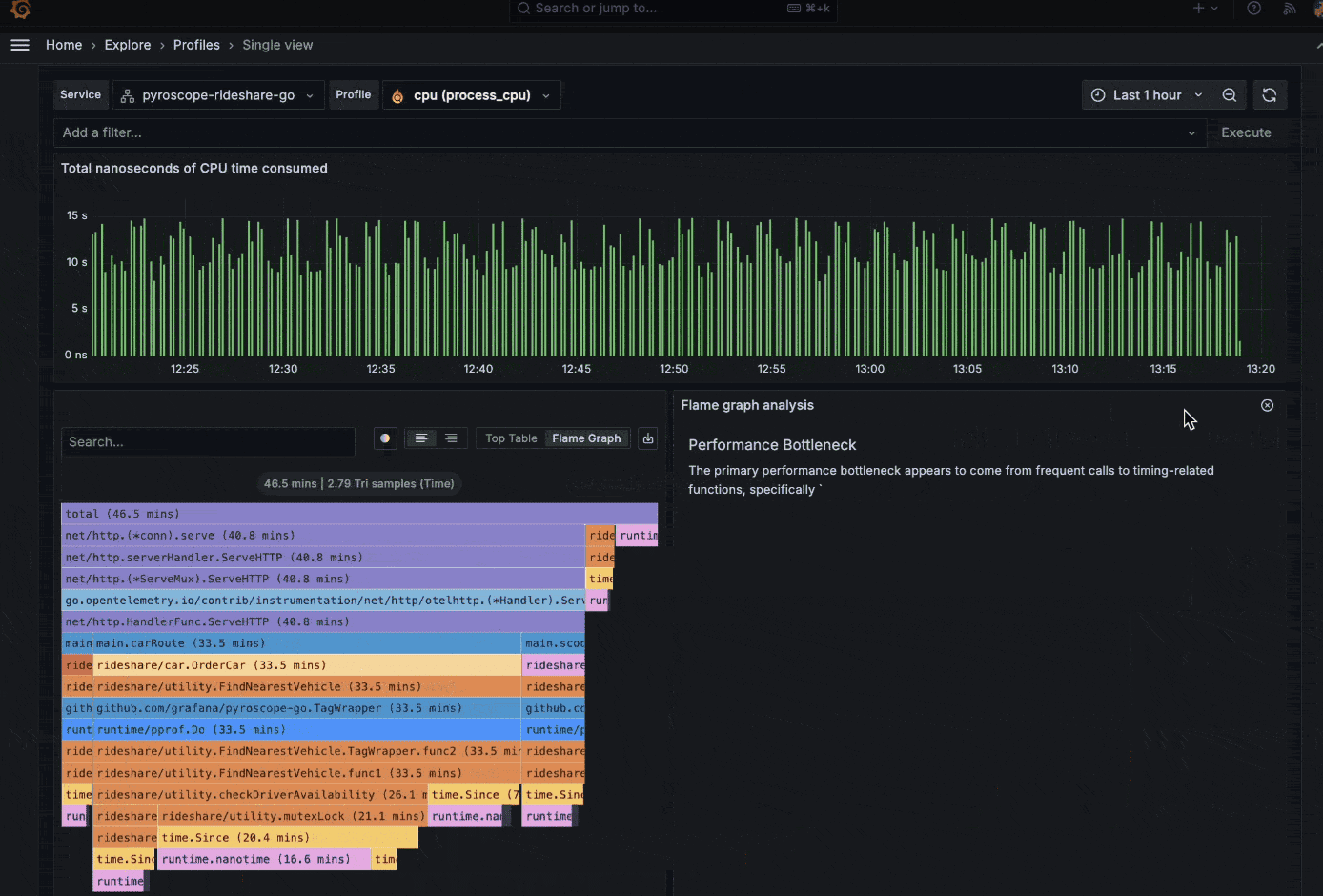

6. Monitoring Dashboards with Forecasting and Early Warnings

What it is

Monitoring platforms now include forecasting and predictive alerting features. Instead of only reacting to threshold breaches, they model past behavior to anticipate when a metric is likely to cross a critical boundary. This gives performance testers time to act before a failure or capacity issue occurs.

How it works in practice

Strengths and limitations

| Strengths | Limitations / Conditions |

| Warns before thresholds are breached, giving engineers lead time to respond. | Requires consistent seasonal or trending data. Irregular workloads limit accuracy. |

| Provides visualization of expected ranges with confidence intervals. | Forecast bands can be wide, reducing usefulness for fine-grained SLAs. |

| Useful for capacity planning and validating performance test environments. | Forecasts are not guarantees — they model history, not unexpected spikes. |

Conclusion

AI is already a standard part of performance engineering, from anomaly detection and predictive autoscaling to reporting and log analysis. The next step is AI-driven scripting, which will allow teams to describe scenarios in plain language and have the platform generate runnable tests. This shift gives people without deep technical backgrounds direct access to load testing — making performance validation faster, more collaborative, and easier to adopt across an organization.