Load testing is essential, but much of the process is repetitive. Engineers spend hours correlating scripts, preparing datasets, scanning endless graphs, and turning raw metrics into slide decks. None of this defines real expertise — yet it takes time away from analyzing bottlenecks and making decisions.

Modern platforms are embedding AI where it makes sense: anomaly detection, reporting, workload modeling, even draft scripting. The goal isn’t to replace engineers but to automate the low-value steps that slow them down.

Here are five tasks where AI can already take on the heavy lifting.

1. Real-Time Anomaly Detection

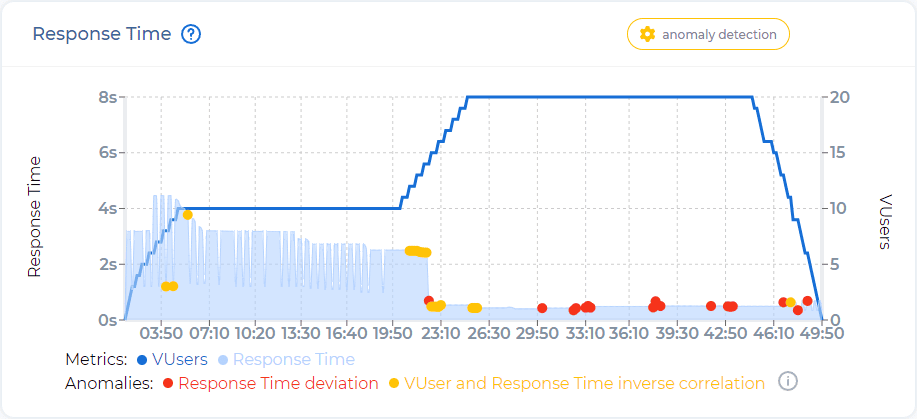

During a load test, performance engineers track multiple metrics at once: latency percentiles, throughput, error rates, CPU and memory utilization, database response times. Spotting anomalies manually means watching dashboards and trying to judge whether a sudden spike or dip is expected behavior or the start of a system failure.

AI improves this process by continuously scanning metric streams with anomaly detection models. These models establish baselines during the run and flag deviations in near real time, such as:

Under the hood, platforms use a mix of statistical approaches (moving averages, adaptive thresholds) and machine learning (isolation forests, clustering, regression-based forecasting) to reduce noise and highlight only meaningful deviations.

The real value is not “AI finds the problem” but AI points engineers to where to look first. Instead of scanning hundreds of charts, the engineer gets an annotated log of anomalies with timestamps, affected metrics, and confidence scores.

Engineer’s role: confirm if the anomaly is actionable. For example, a brief 2% error spike during ramp-up may not violate SLAs, while sustained CPU-driven latency growth at steady state demands immediate investigation.

In practice: anomaly detection is already built into leading tools. PFLB highlights anomalies across metrics automatically.

Other enterprise platforms — such as Dynatrace, New Relic, and LoadRunner — also provide anomaly detection.

2. Test Result Summarization for Stakeholders

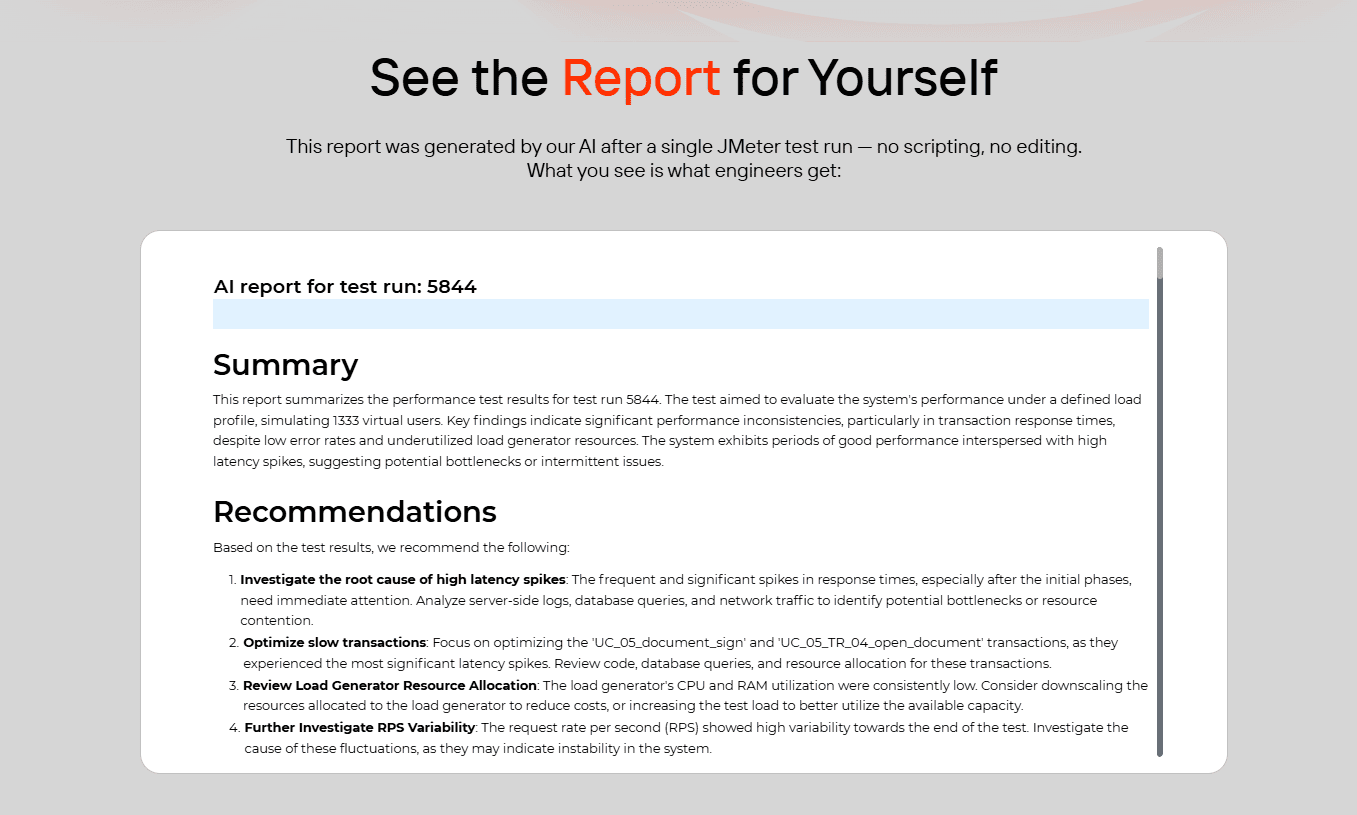

One of the least technical but most time-consuming tasks in performance testing is preparing results for non-engineers. After a test run, engineers often spend hours building slide decks: selecting charts, annotating spikes, and translating throughput and latency metrics into plain business language. The reporting step can take longer than the test itself.

AI cuts down this overhead by automatically generating structured summaries from raw test results. Instead of a dump of graphs, the platform produces text like:

Behind the scenes, natural language generation (NLG) models map key metrics — latency percentiles, SLA thresholds, error distributions, resource correlations — into templated statements enriched with contextual phrasing. This allows stakeholders outside engineering (product managers, QA leads, executives) to understand what happened without learning how to read a throughput curve.

The goal isn’t to simplify results into fluff, but to eliminate translation overhead. Engineers still review the AI draft, highlight critical risks, and decide what goes into the official report. But they no longer waste hours formatting slides or explaining basic terminology.

At PFLB: AI-powered summaries are live. Engineers get both the raw graphs and the auto-generated narrative, saving time and ensuring consistency across projects. As of now, PFLB is the only load testing platform offering fully embedded AI-powered load test reporting.

3. AI-Assisted Scripting & Correlation Help

For most engineers, scripting is the slowest part of a performance testing cycle. Even with mature tools like JMeter or LoadRunner, building a test script that mimics real-world usage means:

Correlation is especially painful. Miss a single dynamic value and the script breaks. Over-correlate, and you introduce noise. Experienced testers can spend hours just stabilizing scripts before a single meaningful run takes place.

AI is beginning to make this easier. Current approaches fall into three categories:

The technology is promising but far from perfect. Complex systems with chained dependencies, encrypted tokens, or legacy protocols often defeat automation. Inaccurate correlations can cause false stability or misleading failures, which is riskier than no correlation at all.

Engineer’s role: act as the gatekeeper. AI drafts, suggests, and accelerates, but every correlation and parameterization must be reviewed for correctness. It shifts the work from repetitive searching to higher-level validation.

In practice: functional testing tools like Mabl and ACCELQ already apply AI for scriptless testing. Research projects such as APITestGenie demonstrate that LLMs can draft executable API tests from contracts. In performance testing, most platforms — including PFLB — are actively building similar AI-assisted features. The consensus: this is where the industry is headed, but not yet at the point of “click-and-forget.”

4. Data Generation and Input Variation

A load test is only as good as the data that drives it. If every virtual user sends the same payloads or identical login credentials, the system under test behaves unrealistically — caches hide bottlenecks, concurrency is underrepresented, and errors don’t appear until production.

Traditionally, engineers prepare large CSV files, anonymize production logs, or write custom randomizers. These methods are time-consuming, limited in realism, and risky if sensitive data leaks into tests.

AI-driven data generation changes the picture by producing synthetic but realistic datasets on demand. Approaches include:

Engineer’s role: validate that generated datasets respect business rules — e.g., ensuring AI-generated credit card numbers still follow Luhn checks.

In practice:

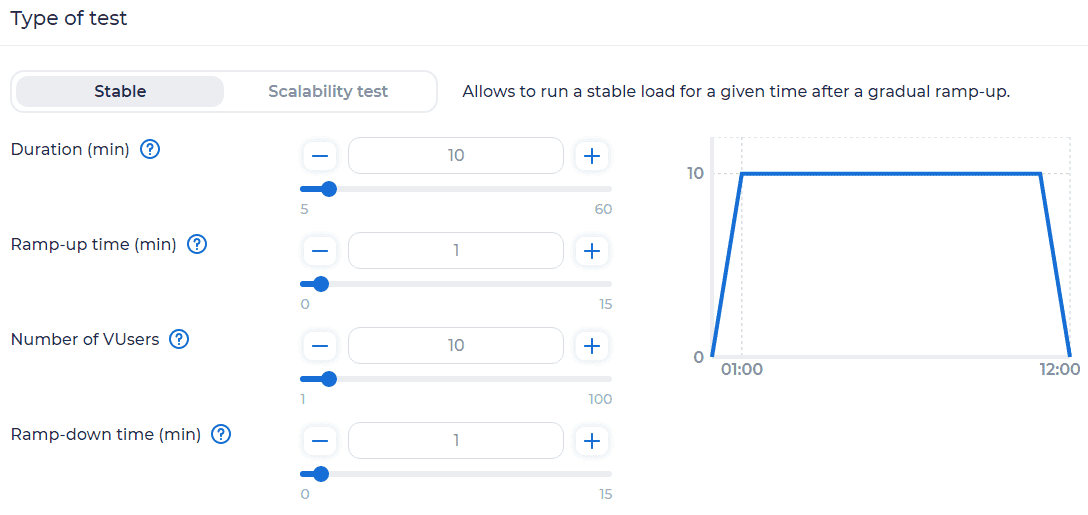

5. Workload Modeling and Scenario Tuning

Designing a workload model is often more art than science. Engineers need to answer questions like:

Traditionally, this means spreadsheets, log parsing, and a lot of trial and error. The risk: if your workload doesn’t reflect reality, your test results are misleading.

AI is now helping reduce this guesswork. By analyzing production telemetry, past test runs, or even business event schedules, AI can recommend workload patterns automatically. Examples include:

Engineer’s role: remain the final authority. AI may suggest that 1,000 users peak at 10 minutes, but if your business-critical scenario is a sudden Black Friday spike, you’ll tune it differently. AI accelerates modeling, but humans ensure alignment with real-world risk.

In practice: some platforms already offer workload recommendations based on observed data, while others provide traffic-shaping templates. Adoption is uneven, but the trend is clear: workload design is moving from manual spreadsheets to AI-assisted modeling where engineers validate and adjust instead of starting from scratch.

Conclusion

AI isn’t replacing performance engineers — it’s making their jobs more strategic. Instead of staring at dashboards, wrangling CSVs, or spending nights polishing reports, engineers can focus on what actually matters: finding bottlenecks, preventing failures, and guiding the system to scale.

The real advantage won’t go to teams that adopt AI blindly, but to those who learn how to steer it. The engineers who treat AI as an extension of their workflow — not a competitor — will be the ones shipping faster, safer, and more resilient systems.

At PFLB, that future is already taking shape. Our anomaly detection and AI-driven reports are built to free up engineers for higher-value work. The rest is coming — and those who adapt early will redefine what “ready for scale” really means.