Software teams often face a challenge when certain parts of a system aren’t ready, unstable, or too costly to call during testing. That’s what mock testing is for. By simulating dependencies, engineers can verify functionality without relying on real services. For many, understanding mock test meaning provides clarity: it’s about creating safe, controllable environments for validating behavior. This article explains what is a mock test, how it works, and why it matters. You’ll also discover the main types, benefits, and how mocking helps in broader QA practices. If you’ve ever wondered what is the meaning of mock test, this guide gives you the full picture.

Key Takeaways

What is Mock Testing?

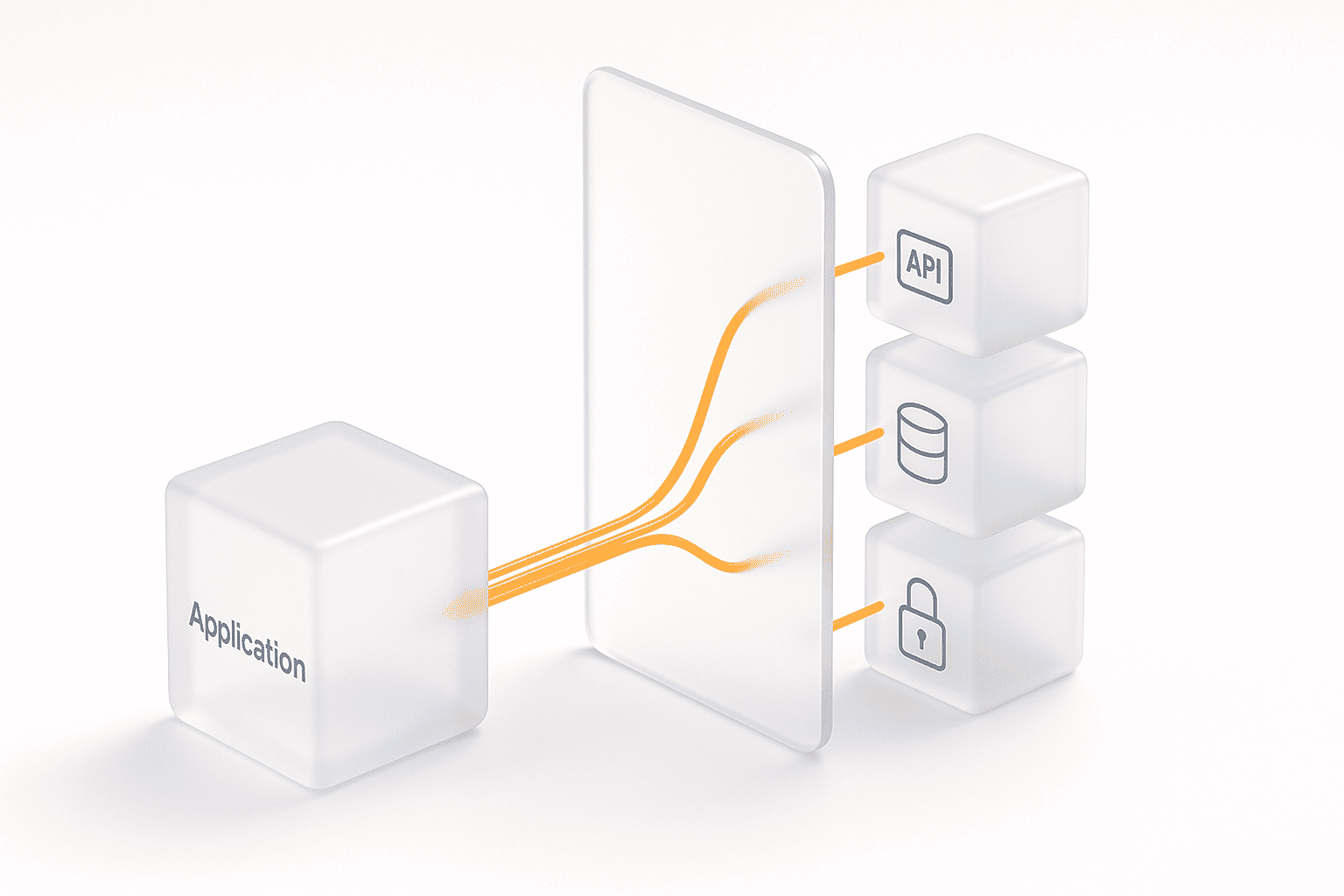

Mock testing is a software testing approach where real components, such as APIs, databases, or external services, are replaced with simulated versions called mocks. These mocks mimic the behavior of actual dependencies, allowing developers and QA teams to test applications in isolation.

The mock testing approach is especially valuable when external systems are unavailable, expensive to access, or too complex to configure during early development stages. Instead of waiting for every service to be fully functional, teams can simulate expected inputs and outputs.

In simple terms — it’s the practice of creating stand-ins for real systems so tests can run in a controlled, predictable way. This improves test reliability, shortens feedback cycles, and ensures problems are caught earlier.

While mocks don’t replace real-world validation, they complement it by providing stable test environments and act as an integral part of performance testing.

How Does Mock Test Work?

Mock testing follows a structured process to replace real dependencies with controlled simulations. Here’s a step-by-step breakdown of how it typically works in engineering practice:

Examples:

1) Java (JUnit + Mockito): mock an external API client

// Gradle: testImplementation("org.mockito:mockito-core:5.+")

class WeatherClient {

String fetch(String city) { /* calls real HTTP */ return ""; }

}

class WeatherService {

private final WeatherClient client;

WeatherService(WeatherClient client) { this.client = client; }

String todaysForecast(String city) {

String json = client.fetch(city);

// parse json… simplified:

return json.contains("rain") ? "Bring umbrella" : "All clear";

}

}

import static org.mockito.Mockito.*;

import static org.junit.jupiter.api.Assertions.*;

import org.junit.jupiter.api.Test;

class WeatherServiceTest {

@Test

void returnsUmbrellaAdviceWhenRaining() {

WeatherClient client = mock(WeatherClient.class);

when(client.fetch("London")).thenReturn("{\"cond\":\"rain\"}");

WeatherService svc = new WeatherService(client);

assertEquals("Bring umbrella", svc.todaysForecast("London"));

}

}2) Java (WireMock): mock HTTP API in integration tests

// testImplementation("com.github.tomakehurst:wiremock-jre8:2.+")

import static com.github.tomakehurst.wiremock.client.WireMock.*;

import com.github.tomakehurst.wiremock.WireMockServer;

WireMockServer wire = new WireMockServer(8089);

wire.start();

configureFor("localhost", 8089);

stubFor(get(urlEqualTo("/v1/users/42"))

.willReturn(aResponse()

.withStatus(200)

.withHeader("Content-Type","application/json")

.withFixedDelay(150) // latency injection

.withBody("{\"id\":42,\"name\":\"Ada\"}")));

//// Your app under test calls http://localhost:8089/v1/users/42 ////

wire.stop();3) Python (pytest + unittest.mock): mock an HTTP call

# pip install pytest

import json

from unittest.mock import patch

import requests

def get_user(uid: int) -> dict:

r = requests.get(f"https://api.example.com/users/{uid}")

r.raise_for_status()

return r.json()

def test_get_user_happy_path():

with patch("requests.get") as fake_get:

fake_resp = type("Resp", (), {})()

fake_resp.status_code = 200

fake_resp.json = lambda: {"id": 1, "name": "Ada"}

fake_resp.raise_for_status = lambda: None

fake_get.return_value = fake_resp

user = get_user(1)

assert user["name"] == "Ada"4) Python (responses): simulate requests without patching

# pip install responses

import responses, requests

@responses.activate

def test_profiles_endpoint():

responses.add(

responses.GET,

"https://api.example.com/profiles/7",

json={"id":7,"vip":True}, status=200

)

r = requests.get("https://api.example.com/profiles/7")

assert r.json()["vip"] is True5) JavaScript (Jest): mock fetch in UI/service code

// npm i --save-dev jest @jest/globals

global.fetch = jest.fn();

test("fetches product by id", async () => {

fetch.mockResolvedValueOnce({

ok: true,

json: async () => ({ id: 99, title: "Keyboard" }),

});

const res = await fetch("/api/products/99");

const data = await res.json();

expect(data.title).toBe("Keyboard");

});6) JavaScript (MSW – Mock Service Worker): mock APIs at runtime

// npm i msw --save-dev

import { setupServer } from "msw/node";

import { rest } from "msw";

const server = setupServer(

rest.get("https://api.example.com/orders/:id", (req, res, ctx) => {

return res(ctx.status(200), ctx.json({ id: req.params.id, total: 42 }));

})

);

beforeAll(() => server.listen());

afterEach(() => server.resetHandlers());

afterAll(() => server.close());7) Database mocking options

7.1 Java + H2 (in-memory) for repositories

// testImplementation("com.h2database:h2:2.+")

import org.springframework.boot.test.autoconfigure.jdbc.AutoConfigureTestDatabase;

import org.springframework.boot.test.autoconfigure.orm.jpa.DataJpaTest;

@DataJpaTest

@AutoConfigureTestDatabase(replace = AutoConfigureTestDatabase.Replace.ANY) // uses H2

class UserRepositoryTest {

// @Autowired UserRepository repo;

// write tests that persist/lookup without touching real DB

}7.2 Python + SQLite (in-memory) with SQLAlchemy

# pip install sqlalchemy

from sqlalchemy import create_engine, text

engine = create_engine("sqlite+pysqlite:///:memory:", echo=False)

with engine.begin() as conn:

conn.execute(text("CREATE TABLE users(id INTEGER, name TEXT)"))

conn.execute(text("INSERT INTO users VALUES(1,'Ada')"))

with engine.connect() as conn:

row = conn.execute(text("SELECT name FROM users WHERE id=1")).fetchone()

assert row[0] == "Ada"8) Proxy-based mocking (Nginx reverse-proxy + fault injection)

nginx.conf (test-only):

events {}

http {

server {

listen 8080;

location / {

proxy_pass http://real-upstream;

# Inject 200ms for reliability testing

proxy_set_header X-Test "proxy-mock";

# Delay & error examples (requires lua/nginx modules or upstream configs)

# return 500; # simple fault

}

}

}9) Classloader/bytecode remapping (PowerMock) — legacy edge cases

// testImplementation("org.powermock:powermock-module-junit4:2.+")

// testImplementation("org.powermock:powermock-api-mockito2:2.+")

@RunWith(PowerMockRunner.class)

@PrepareForTest(StaticUtil.class)

public class StaticUtilTest {

@Test

public void mocksStaticMethod() {

PowerMockito.mockStatic(StaticUtil.class);

Mockito.when(StaticUtil.dangerousCall()).thenReturn("safe");

assertEquals("safe", StaticUtil.dangerousCall());

}

}10) API mocks for performance testing (WireMock + JMeter/Locust)

10.1 WireMock mappings for load-safe endpoints

mappings/get-user.json

{

"request": { "method": "GET", "urlPath": "/v1/users/42" },

"response": {

"status": 200,

"headers": { "Content-Type": "application/json" },

"fixedDelayMilliseconds": 40,

"jsonBody": { "id": 42, "tier": "gold" }

}

}Run WireMock standalone:

java -jar wiremock-standalone.jar --port 9090 --root-dir .

Point your JMeter HTTP Requests to http://localhost:9090/v1/users/42 to measure client behavior without hitting real upstreams (great when real APIs are rate-limited or costly).

10.2 Locust hitting a mock API

# pip install locust

from locust import HttpUser, task, between

class UserLoad(HttpUser):

wait_time = between(0.1, 0.3)

@task

def get_user(self):

self.client.get("/v1/users/42") # point to WireMock11) Fault injection patterns to test resilience

WireMock:

stubFor(get(urlEqualTo("/v1/flaky"))

.willReturn(aResponse()

.withStatus(503)

.withHeader("Retry-After","1")

.withBody("{\"error\":\"temporary\"}")));MSW:

rest.get("/v1/flaky", (req, res, ctx) =>

res(ctx.status(503), ctx.json({ error: "temporary" }))

);Key Benefits of Mock Testing

Final Thought

Mock testing is powerful, but it isn’t a silver bullet. On its own, it solves isolation problems and accelerates feedback, yet the real value comes when it’s integrated into the full QA and delivery pipeline. In practice, mocks should coexist with integration tests, system tests, and live validation to provide confidence at every level. Treating mock testing as just another tool in the engineer’s toolbox — not the entire toolbox — ensures you get faster feedback while still grounding your product in real-world performance. When embedded in the process end-to-end, mocks amplify reliability and reduce risk without creating blind spots.