IoT is an ecosystem of devices connected through networks and relying on cloud or app services for endless communication, data exchange, and smart automation. For this ecosystem to work seamlessly 24/7, it heavily depends on IoT testing. Apart from impeccable performance, the latter guarantees the reliability, protection, and integrity of diverse devices, networks, apps, and data, delivering top-quality IoT products for businesses and ensuring safety and comfort for users.

This tutorial touches upon the concept of IoT testing with the fundamental layers it’s applied for; outlines the types and benefits of this kind of validation; and highlights the key best practices and tools for the efficient process.

What Is IoT Software Testing?

IoT testing is part of the broader QA strategy that validates the functionality, performance, and security of the entire IoT environment, including devices with edge components, network protocols, cloud and back-end services, and user dashboards.

Validating IoT systems differs from traditional web or API testing. It focuses not only on software functionality but also on hardware, firmware with over-the-air (OTA) updates, constrained networks, and power sources. In addition, IoT device testing involves verifying that communication protocols — such as MQTT, CoAP, HTTP/REST, BLE, Zigbee, Wi-Fi, and cellular — are implemented and used correctly across chains of numerous IoT devices.

The primary objectives of end-to-end IoT validation are ensuring correctness, scalability, and efficient power usage for all interconnected systems. Besides, an effective IoT quality assurance process must align with ISO/IEC 27001, NISTIR 8259, NIST SP 800-213, and the ETSI EN 303 645 consumer IoT security standard to guarantee that the ecosystem, along with all the data flowing through it, is resilient and protected against potential breaches.

IoT Architecture Layers to Validate

IoT systems span numerous architecture layers, with each having its own set of testing requirements. In the table below, we cover validation areas in each design tier, common issues that may arise, and which signals to monitor to identify these problems:

| Layer | What to Test | Typical Issues | Observability Signals |

| Device/Firmware (sensors/actuators, drivers, RTOS) | Sensor accuracy, actuator response, firmware stability, OTA update reality | Malfunctioning sensors, driver bugs, failed updates, memory leaks | Device logs, sensor data trends, crash dumps, update success/failure rates |

| Edge Gateway (protocol translation, buffering, local rules) | Data forwarding, protocol compatibility, buffering under load, local decision rules | Data loss, inconsistent translations, buffer overflows, rule misfires | Gateway logs, queue metrics, dropped packet counts, rule execution stats |

| Connectivity (broker/ingress, QoS, retires, roaming) | Network stability, throughput, latency, QoS handling, handoffs/roaming | Packet loss, jitter, high latency, unstable roaming, retry storms | Network logs, latency metrics, QoS delivery rates, retry/failure counters |

| Cloud/Application (ingestion, storage, analytics, APIs, dashboards) | Data ingestion reliability, storage consistency, analytics accuracy, API stability, dashboard usability | Data duplication/loss, API errors, inconsistent analytics, UI glitches | API logs, storage metrics, analytics output checks, UI monitoring tools |

Ensure that your tests cover every component of IoT architectural tiers so that the entire system operates as planned.

Benefits of IoT Testing

The perks of IoT tests are explicit for both the engineering life cycle and businesses. Here’s what you’ll gain by incorporating IoT testing into all steps of your SDLC:

Types of IoT Tests

To make sure every single aspect of your interconnected ecosystem performs well, it’s recommended that the following IoT test types be conducted:

Best Practices for IoT Test Automation

If you’re aiming for a glitchless IoT app testing process, incorporate the proven best practices outlined below:

Shift-Left & CI

Environment Parity & Data Realism

Network Emulation & Resilience

Security Framework Adherence

Data Quality & Telemetry Hygiene

Test Isolation & Fleet Hygiene

OTA Governance

Device Diversity Coverage

Long-Run Endurance & Capacity

Observability & Diagnostics

Top IoT Testing Tools: 2025 Edition

Regardless of whether you initiate IoT network testing or IoT device performance testing, specialized tools will help you smooth out and expedite the whole process. We’ve compiled the following platform-specific options of high-performing IoT testing equipment:

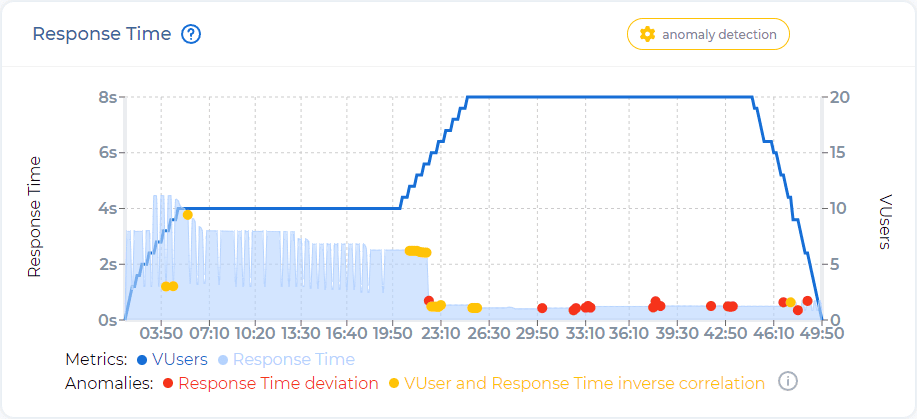

PFLB Platform

The PFLB cloud-based testing solution simulates massive IoT traffic, accurately gauges performance parameters like latency, and efficiently runs IoT scalability tests for the most complex environments.

Features:

Pros:

Cons:

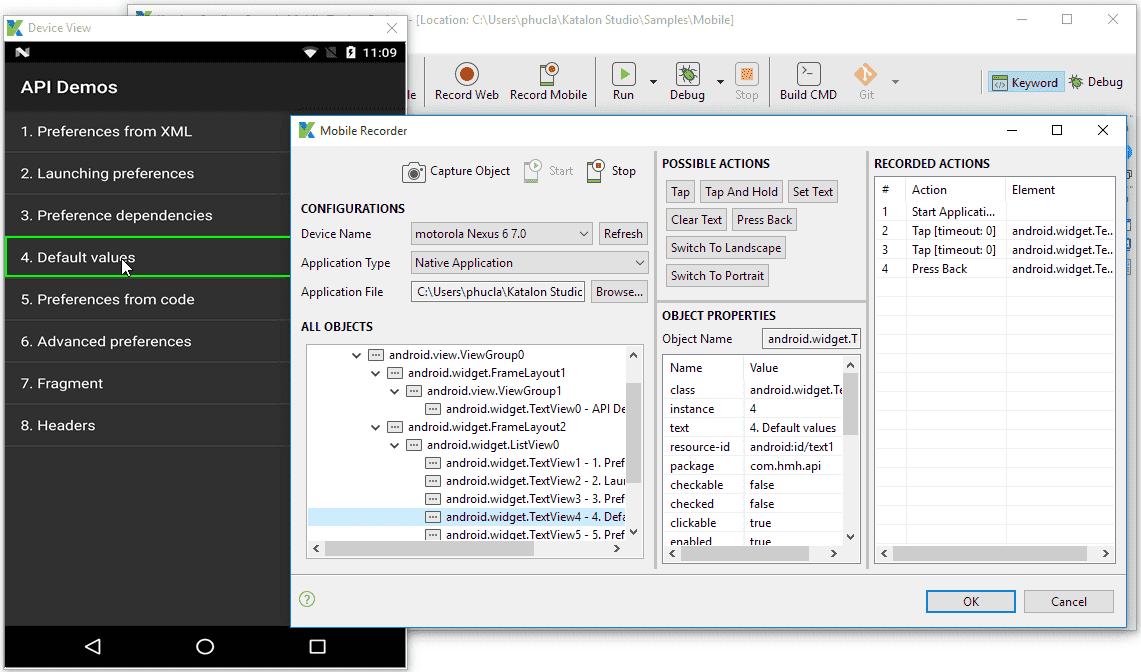

Katalon Studio

This low-code automation tool can be adeptly employed for functional and regression testing of IoT app dashboards and user interfaces across web, desktop, mobile, and API layers.

Features:

Pros:

Cons:

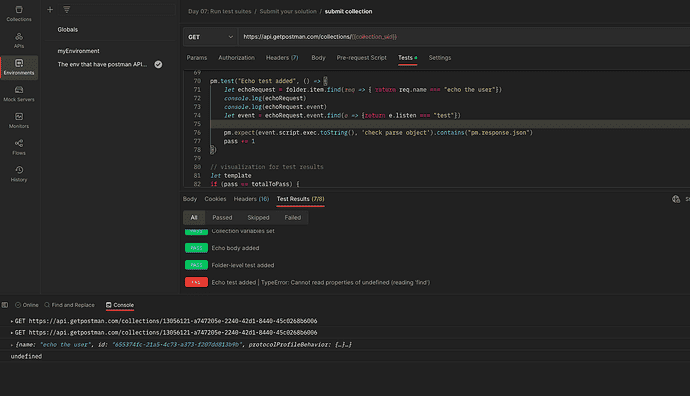

Postman

Postman streamlines IoT API testing by validating MQTT messages and RESTful API interactions, establishing reliable communication between IoT systems. You can also try this gRPC testing tool for API performance or REST Assured.

Features:

Pros:

Cons:

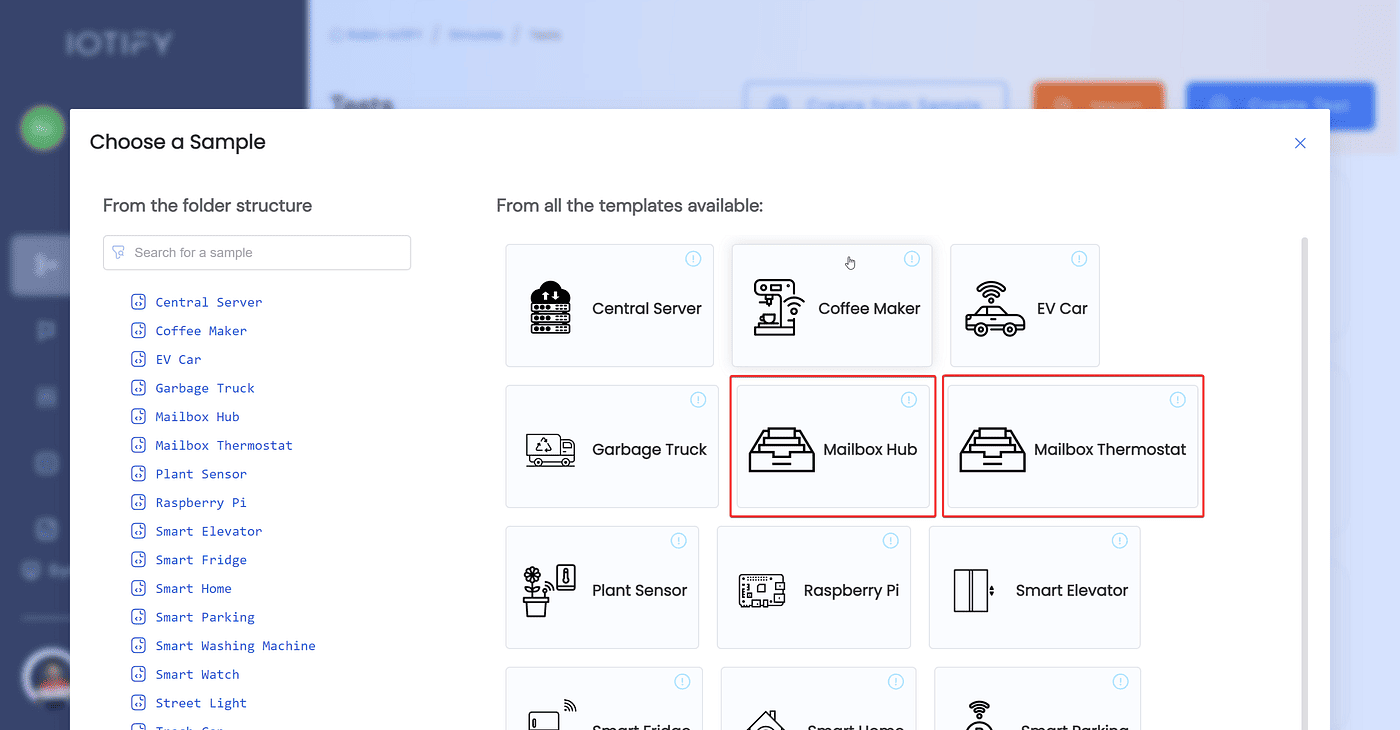

IoTIFY

Selecting IoTIFY is rational if you’re looking to simulate device behavior, communication protocols, and network connections, as well as conduct cloud-based IoT testing under heavy loads. The Bevywise IoT Simulator can be used as a complementary option for local or protocol-specific simulation.

Features:

Pros:

Cons:

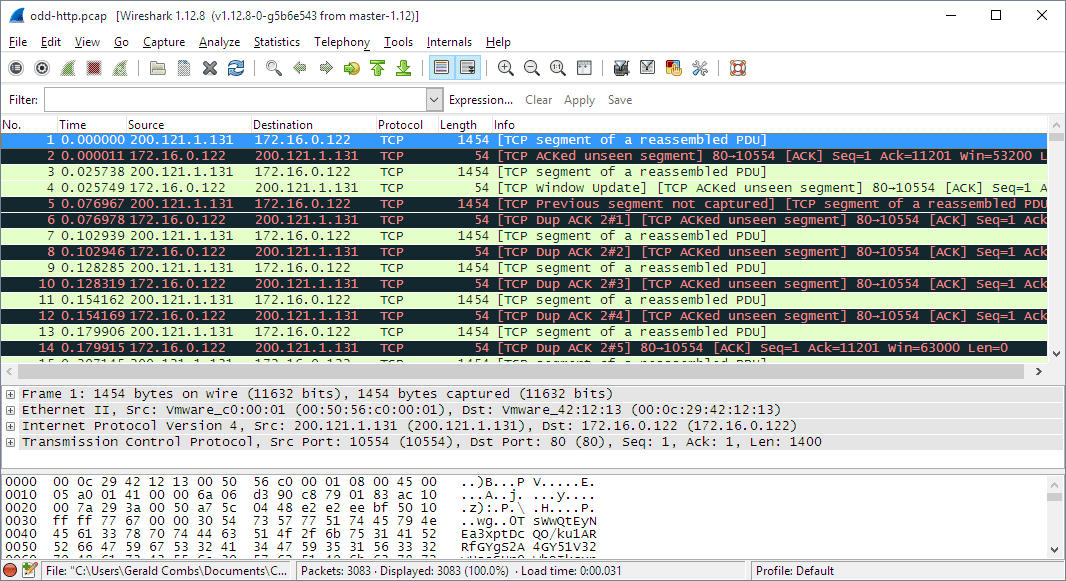

Wireshark

QA teams can use Wireshark to capture and analyze network packets from IoT apps; verify protocols; troubleshoot connectivity issues; and detect packet loss between devices, gateways, and cloud services.

Features:

Pros:

Cons:

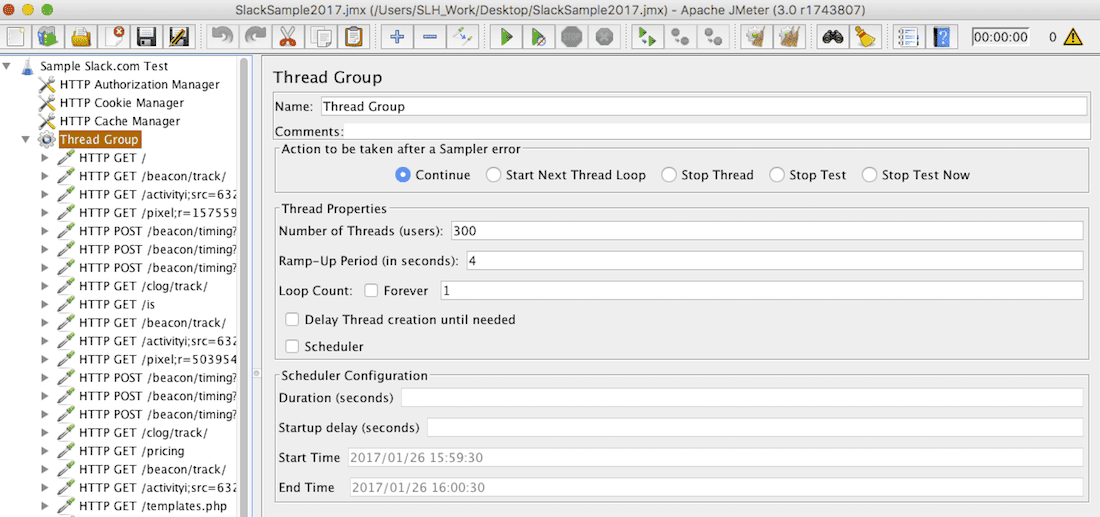

JMeter

Tailored for performance testing, JMeter supports MQTT, HTTP, and WebSocket protocols and triggers efficient load, latency, and throughput evaluation across IoT environments. Locust and Gatling can be applied as more code-driven alternatives.

Features:

Pros:

Cons:

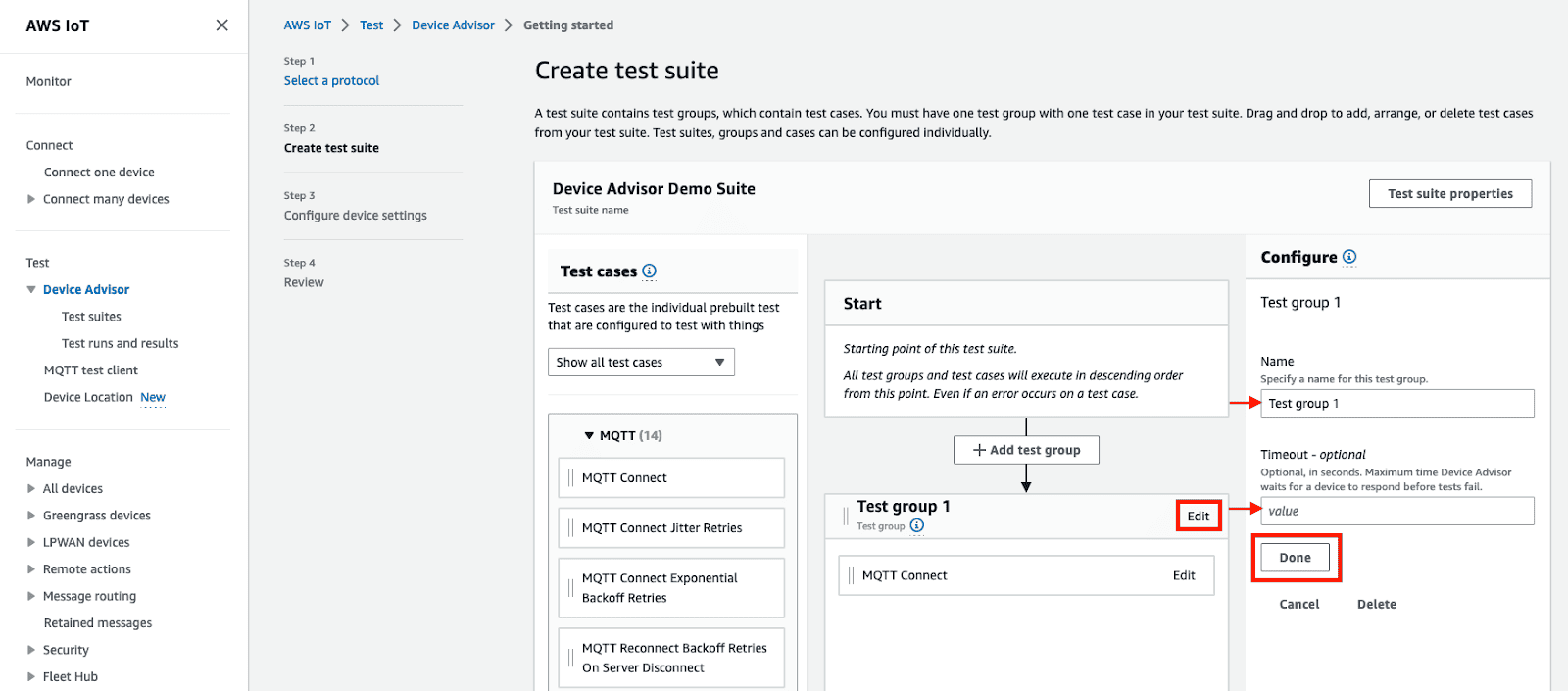

AWS IoT Core Device Advisor

Turn to AWS IoT Core Device Advisor if you’re planning to validate firmware updates, OTA deployments, and cloud integrations, all while ensuring security and compliance across IoT systems. Mender can be utilized as an alternative for OTA updates in your IoT ecosystem.

Features:

Pros:

Cons:

How to Choose the Right Stack

Deciding on the appropriate tech stack for smart device testing can be daunting, but it’s halfway to success. Here are the key criteria to consider:

Why Does a Reliable IoT Testing Partner Matter?

A lack of seamless IoT interoperability, system crashes, cyberthreats, long release cycles, and non-compliance are just a few of the consequences of insufficient IoT testing. If you need top-quality IoT validation services, consider a reliable partner who can effectively handle infrastructure, scalability, security, and performance testing strategy for interconnected devices.

With 15+ years of experience, PFLB adeptly handles cross-domain IoT projects of varying sizes and complexities, delivering measurable business outcomes, such as faster response times and near-zero system downtime. Check out our case study portfolio to see how we’ve assisted our global clients — and learn how we can help you.

Final Thoughts

Based on the latest report by Statista, the importance of connected device testing isn’t declining anytime soon, with the projected growth of IoT devices from 19.8 billion in 2025 to over 40.6 billion by 2034. As the number of IoT apps increases, so will the demand for high-quality testing services focused on end-to-end security, compliance, and system reliability. Access a pool of PFLB’s offerings and create an inherently healthy and thriving IoT ecosystem for many years to come.