Testing software without the right data is like rehearsing a play with no script; you can’t see the full picture, and mistakes slip by unnoticed. Synthetic test data offers a practical way to solve this problem.

In this article, we’ll explore what it means, how it’s generated, and the situations where it proves most valuable. You’ll also find examples of the main types, their benefits, and a step-by-step look at the process. If real data is limited, too sensitive, or simply unavailable, synthetic test data gives teams the freedom to test thoroughly without compromise.

What is Synthetic Test Data?

Synthetic test data is artificially created information that imitates the structure and characteristics of real-world data without exposing any sensitive details. In simple terms, it’s a safe stand-in for actual production data, designed specifically for testing.

The purpose of synthetic test data is to give QA and development teams a reliable way to validate applications, simulate different scenarios, and check system performance, all without relying on restricted or incomplete real data.

By using synthetic test data, organizations can overcome challenges like data privacy regulations, limited access to production environments, or inconsistent datasets. It ensures testers always have the right input available, whether they’re debugging a new feature, running performance checks, or preparing for compliance audits.

Types of Synthetic Test Data

Different testing needs call for different forms of synthetic test data. Below are the most common types and their applications.

| Type | Purpose | Strengths | Limitations | Example Use Case |

| Sample Data | Provide small, simplified datasets to validate system structure and logic. | Quick to create, easy to use, effective for early-stage testing. | Lacks complexity, not suitable for performance or large-scale tests. | A developer creates 50 fake user accounts to check login and profile modules. |

| Rule-Based Data | Ensure data meets specific patterns, formats, or constraints. | Highly customizable, reflects real-world rules (dates, IDs, currency). | Requires clear rule definitions; may miss unexpected edge cases. | Testing input validation for forms requiring phone numbers or tax IDs. |

| Anonymized Data | Protect sensitive information by masking or replacing identifiers. | Maintains realistic structure; essential for compliance (GDPR, HIPAA, PCI DSS). | Still linked to real data, so anonymization quality must be strong. | Healthcare provider tests appointment booking software with anonymized patient records. |

| Subset Data | Use a representative slice of a larger dataset while reducing size. | Saves time and resources, mirrors production trends, scalable for regression tests. | May omit rare scenarios if not carefully sampled. | An e-commerce site extracts 5% of order history to test a new recommendation engine. |

| Large Volume Data | Stress-test systems under production-like or extreme loads. | Reveals performance bottlenecks, validates scalability, supports capacity planning. | Expensive to generate and maintain; requires strong infrastructure. | A bank simulates 10M synthetic transactions to test payment system resilience. |

Sample Data

Sample data is a simplified set of records generated to reflect the basic structure of production data. It’s often used during early development stages to verify that systems can handle data fields correctly. For example, a developer building a customer portal may use a few dozen fake customer accounts with names, emails, and IDs to check login and profile functions.

Purpose in the testing lifecycle

Sample data is most valuable during prototyping, unit testing, and smoke testing. It helps teams confirm that an application can process expected inputs before moving into more complex testing phases.

Best practices

Rule-Based Data

Rule-based data is generated using predefined logic or constraints that mirror real-world requirements. For instance, a rule might state that “phone numbers must contain 10 digits” or “dates must fall within the last five years.” By following these rules, the generated data matches the formats and ranges an application will process in production.

Purpose in the testing lifecycle

Rule-based data is especially useful for functional testing, where systems need to be validated against strict business logic. It ensures inputs are realistic, making it easier to identify whether the application can handle both valid and invalid cases.

Best practices

Anonymized Data

Anonymized data starts with real production datasets but removes or replaces any sensitive or personally identifiable information (PII). It keeps the overall structure, relationships, and patterns intact while ensuring privacy is protected. For example, real customer names might be swapped with randomized ones, but purchase histories remain realistic.

Purpose in the testing lifecycle

Anonymized data is essential for organizations in regulated industries such as finance, healthcare, or telecom. It allows testers to work with lifelike data without breaching compliance rules like GDPR, HIPAA, or PCI DSS.

Best practices

Subset Data

Subset data is a smaller, representative slice of a larger dataset. It mirrors the structure and diversity of production data but in reduced volume, making it easier to manage during testing. For instance, instead of working with millions of e-commerce transactions, testers might generate a synthetic subset that reflects seasonal shopping trends with just a few thousand records.

Purpose in the testing lifecycle

Subset data is most valuable in regression, integration, and user acceptance testing. It enables teams to test application functions efficiently without the overhead of full-scale production datasets.

Best practices

Large Volume Test Data

Large volume test data consists of massive datasets designed to push systems to their limits. Unlike sample or subset data, this type aims to mimic production-scale or even peak load conditions. For example, testers might generate millions of banking transactions or IoT sensor readings to evaluate how an application performs under heavy demand.

Purpose in the testing lifecycle

Large volume data is critical for performance, stress, and scalability testing. It helps teams uncover bottlenecks, validate infrastructure capacity, and ensure applications can scale reliably before going live.

Best practices

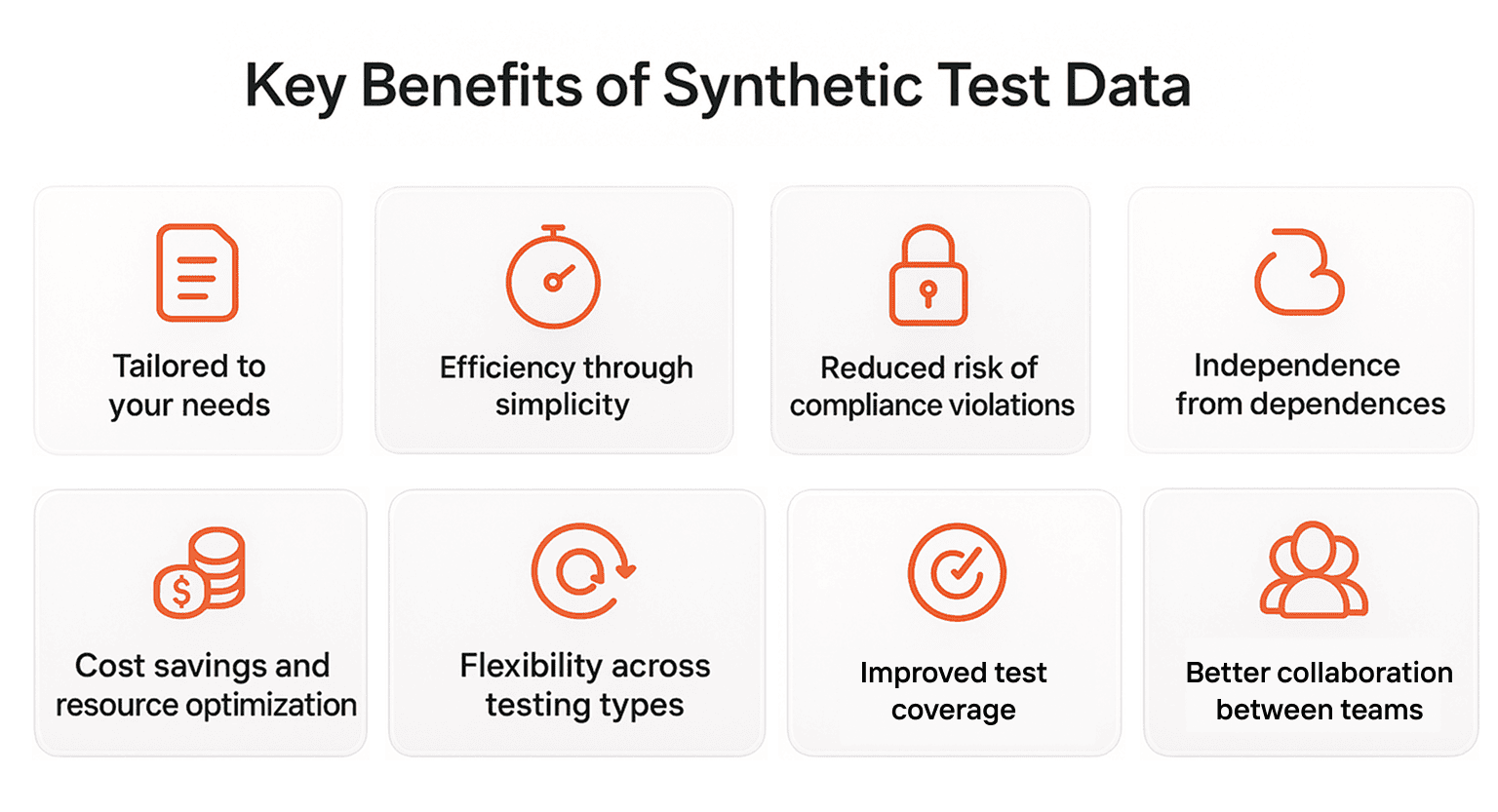

Key Benefits of Synthetic Test Data

Synthetic test data is more than just a workaround for unavailable or sensitive datasets. It plays a strategic role in modern QA and performance testing, helping teams deliver higher-quality software faster and at lower risk:

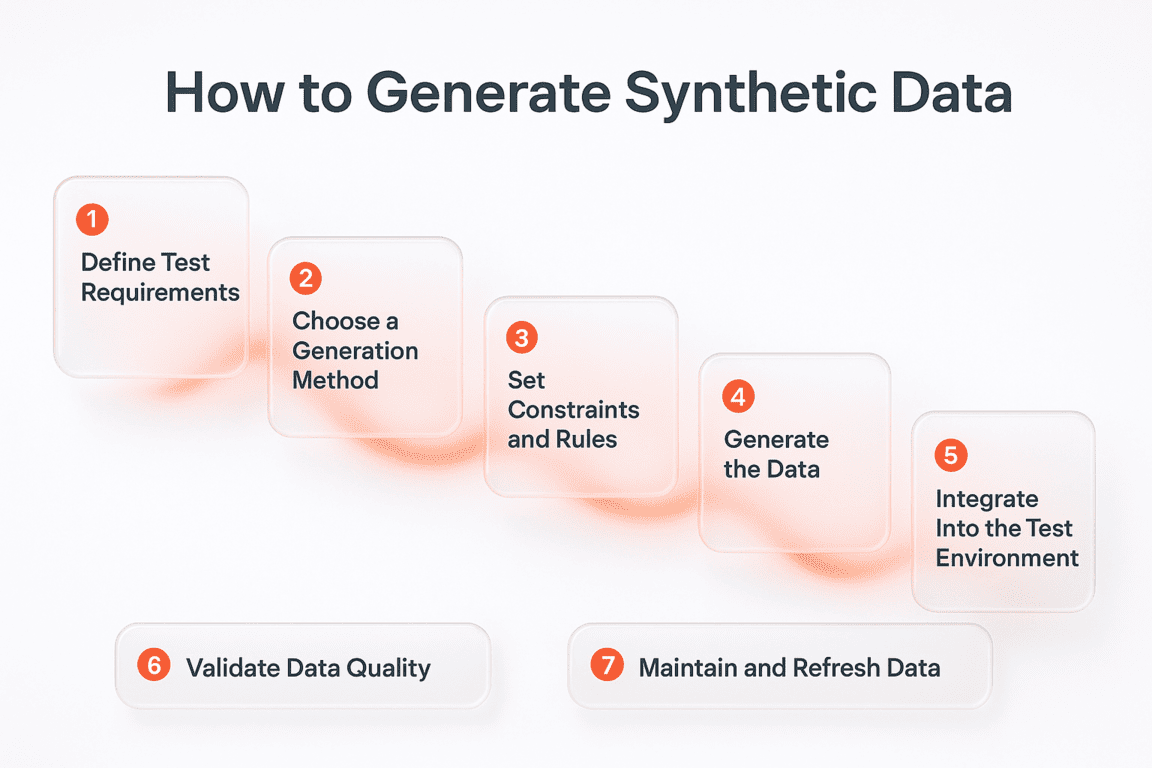

How to Generate Synthetic Data

Creating synthetic test data requires a clear process to make sure the generated data is both realistic and valuable. Below are the key steps involved in synthetic test data generation.

1. Define Test Requirements

Start by identifying the purpose of your test. Are you validating input forms, running a performance benchmark, or simulating compliance checks? Defining requirements ensures that the synthetic data you generate aligns with the specific functions and applications being tested.

2. Choose a Generation Method

There are several ways to generate synthetic test data. Rule-based generation uses constraints such as formats or ranges. Statistical methods create data based on probabilities and distributions. More advanced approaches use AI or machine learning to produce highly realistic datasets that mimic complex real-world patterns. The right method depends on your testing goals.

3. Set Constraints and Rules

Once the method is chosen, apply the rules. For example, dates must fall within a valid range, transaction amounts should reflect realistic limits, or user IDs should follow a specific alphanumeric pattern. Adding constraints makes the synthetic data more reliable and relevant.

4. Generate the Data

With requirements and rules defined, you can generate synthetic test data using dedicated tools or custom scripts. Data masking tool often allow bulk creation of records, randomized values, or integration with other test data management systems. This is where large datasets for performance testing or smaller, targeted datasets for functional testing are created.

5. Validate Data Quality

Even synthetic data needs quality checks. Validation involves ensuring the generated data is consistent, usable, and matches the rules you defined earlier. For example, checking that dates are in the correct format or that all customer IDs are unique. Poor-quality synthetic data can lead to misleading test results.

6. Integrate Into the Test Environment

The generated datasets should now be loaded into your QA or staging environment. This step ensures that developers and testers can interact with synthetic data in the same way they would with production data, without security or compliance risks.

7. Maintain and Refresh Data

Synthetic test data should evolve along with your systems. As applications change, rules may need updating, and datasets may need refreshing to remain relevant. Regular maintenance keeps the process efficient and avoids outdated or irrelevant test inputs.

Final Thoughts

Synthetic test data is a necessity for teams that want to test thoroughly without privacy risks or delays. By creating realistic datasets tailored to business needs, organizations improve test coverage, cut costs, and ensure compliance while keeping projects on schedule.

👉 If you’re ready to see how synthetic data can transform your QA process, connect with PFLB today and start building safer, smarter, and more efficient testing environments.