When an application starts to slow down, users notice it immediately. Pages hesitate to load, buttons lag, animations freeze for a split second, and that’s often enough to make someone close the tab. These issues rarely come from the backend alone. In most cases, the real strain appears in the browser, where scripts, styles, and third-party tags all compete for resources.

UI load testing helps uncover those weak points before they reach production. By simulating how real people interact with your interface, from login and navigation to form submissions and checkout flows, it shows how your front end performs under heavy traffic and different network conditions.

In this guide, we’ll explore the essentials of UI load testing: what it is, how it differs from API and protocol-level testing, why it’s critical for user experience and business metrics, and which UI load testing tools can help you evaluate browser performance more accurately.

What Is UI Load Testing?

UI load testing is the process of simulating how multiple users interact with a web application’s interface at the same time; inside real browsers or browser engines. It recreates real actions like logging in, filling out forms, navigating between pages, or completing transactions, and measures how the user interface behaves when those actions happen concurrently.

Unlike traditional API or protocol-level load testing, which focuses on backend throughput and response times, load testing UI observes performance at the presentation layer, where users actually experience your product. It helps identify front-end slowdowns, rendering delays, JavaScript errors, and layout shifts that wouldn’t appear in backend tests alone.

It’s sometimes referred to as browser-based load testing or client-side performance testing, since it measures how the browser handles traffic and interactions, not just how fast the server responds. You might also see it described as “GUI load testing,” but it’s important to distinguish this from general GUI testing: the latter checks functionality and design accuracy, while UI load testing evaluates performance under load; how stable and responsive the interface remains when user activity scales up.

By testing at the browser level, teams gain a complete view of the end-user experience under real conditions, including scripting complexity, rendering time, and third-party integrations that affect performance.

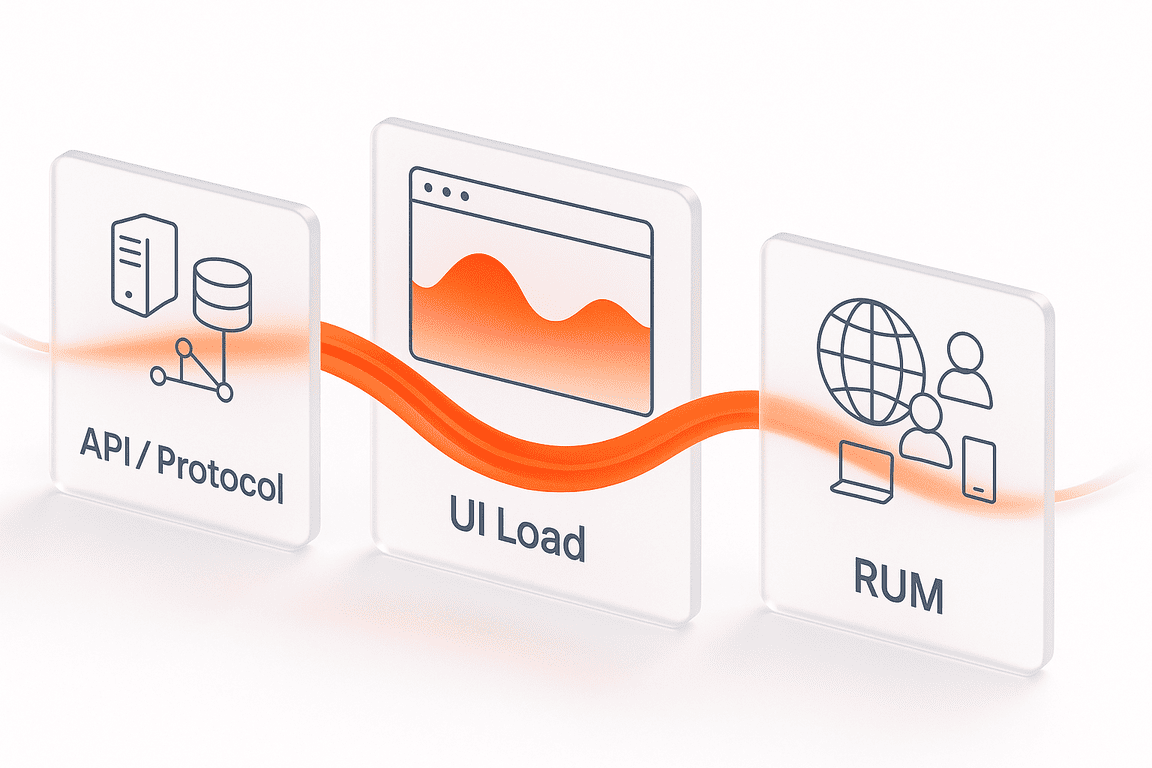

UI Load vs API/Protocol Load vs RUM

Performance testing isn’t a one-size-fits-all practice. Applications are complex systems where browsers, servers, and real users all shape how performance feels. To understand that experience fully, teams usually combine three complementary approaches: UI load testing, API or protocol-level load testing, and Real User Monitoring (RUM).

UI load testing focuses on the front end; what happens inside the browser when hundreds or thousands of users interact at once. It captures real rendering behavior, JavaScript execution, layout shifts, and other elements that shape how the interface responds under pressure. This is where you see issues like slow page transitions or broken UI components that wouldn’t appear in backend-only tests.

API or protocol load testing, on the other hand, isolates the backend. It measures how efficiently servers process requests, manage concurrency, and return responses. These tests help teams validate system scalability, database performance, and network throughput without involving a browser.

RUM (Real User Monitoring) adds the final layer; real-world observation. It collects performance data directly from end users’ browsers and devices in production. Instead of simulations, RUM provides continuous feedback about how your application behaves under actual conditions: different locations, devices, and network speeds.

Each of these methods answers a different question:

Together, they create a complete performance picture; from infrastructure to user perception.

| Aspect | UI Load Testing | API/Protocol Load Testing | RUM (Real User Monitoring) |

| Focus | End-user interactions in the browser | Server-side performance and scalability | Real user behavior in production |

| Layer | Presentation layer (UI) | Application and network layers | Live environment (client + network) |

| Typical Tools | Browser-based frameworks or headless engines | Protocol simulators and load generators | Analytics and monitoring platforms |

| Metrics | LCP, INP, CLS, JS errors, DOM load time, UI response | Response time, throughput, latency, error rate | Real-world load time, device and network data |

| Strengths | Captures rendering and interactivity issues under load | Validates backend performance and capacity | Reflects actual user experience post-deployment |

| Limitations | Higher resource cost, slower to scale | Doesn’t reflect browser or rendering behavior | Reactive; identifies issues after users are affected |

When combined, these three methods provide full visibility into both system and user performance overhead. UI load testing reduces that risk by catching them in controlled, reproducible conditions, helping teams fix bottlenecks long before they affect users or revenue.

When & Why UI Load Testing Matters

Modern web applications rely on increasingly complex front ends, built with dynamic frameworks, third-party integrations, and personalized content. While backend performance remains important, most performance issues that users actually feel happen in the browser. UI load testing ensures that your product continues to deliver fast, stable, and consistent experiences, even under peak traffic or real-world conditions.

Below are the key reasons why this type of testing matters; both technically and commercially.

1. User Experience & Revenue

When the interface slows, users don’t wait, they leave. Even a one-second delay in page response can reduce conversions by several percentage points. In e-commerce, that’s the difference between a completed order and an abandoned cart.

UI load testing helps prevent those losses by validating the stability of critical journeys such as:

By using browser load test tools and simulating realistic browser sessions with hundreds or thousands of concurrent users, testers can measure how these flows perform at p95 or p99 percentiles; the slowest real experiences your customers encounter. Ensuring those remain stable protects both your reputation and your revenue.

2. Front-End Performance Assurance

Core Web Vitals have become key indicators of front-end health. They reflect what users see and feel as they interact with your application.

Under high load, these metrics can easily drift: images load slower, animations freeze, and layouts jump due to asynchronous scripts. UI performance testing reproduces this stress and helps maintain target thresholds for each vital:

By continuously tracking these metrics during simulated traffic, teams can identify degradation patterns early and adjust rendering logic, caching, or resource prioritization before users notice.

3. Risk & Cost Reduction

Most production incidents traced to the UI layer come from client-side regressions that backend load tests never reveal. These include:

Finding these issues after release is expensive. often involving rollback, hotfixes, or customer support overhead. UI load testing reduces that risk by catching them in controlled, reproducible conditions, helping teams fix bottlenecks long before they affect users or revenue.

4. Compliance, Accessibility & SEO Context

Regulatory and search platforms increasingly emphasize user-centric performance. While Core Web Vitals alone don’t guarantee rankings, they are part of Google’s page experience signals, which reward consistent responsiveness and visual stability.

UI load testing helps maintain compliance with these evolving standards by validating that:

This not only improves usability and brand trust but also contributes indirectly to SEO and conversion goals by ensuring your site delivers a stable experience at scale.

5. Strategic Value Across Teams

Finally, UI load testing aligns development, QA, and business teams around a common metric: perceived user performance.

It bridges the gap between backend metrics and real customer impact, making performance optimization measurable, predictable, and business-relevant.

Test Scope & Workflows

A strong UI load testing strategy starts with realistic coverage, testing the right flows, under the right conditions, across the environments where users actually experience your product.

The goal is not to overwhelm the test plan with every possible scenario, but to focus on representative, high-impact workflows and user states that reveal true performance behavior.

1. Representative User Flows

Your tests should reflect how real users move through your application, not synthetic paths that only exist in QA scripts.

The most valuable flows to test are those that affect conversion, engagement, or retention; the core journeys that must remain smooth under pressure.

Common examples include:

Each of these workflows should be scripted end-to-end to capture time to interact, render stability, and visual completeness during concurrent use.

2. Surfaces and States

User interfaces rarely behave identically across sessions; what a new visitor sees can differ dramatically from what a returning user experiences.

To reflect that variability, include tests for:

3. Cross-Browser and Device Coverage

No two browser engines render pages exactly alike. Even well-optimized front ends can show different results across browsers due to differences in rendering pipelines, JavaScript engines, and resource scheduling.

Your testing matrix should include:

Testing across browsers and devices helps catch layout shifts, script bottlenecks, and inconsistent UI responsiveness that would otherwise slip through API-level testing.

4. Network Conditions

A web application that feels fast on a developer’s gigabit connection might struggle on a mobile network. Network simulation is therefore a key part of UI load testing workflows.

Include throttling profiles such as:

Combining browser-level load with controlled network conditions exposes how scripts, images, and third-party tags behave when bandwidth drops, ensuring that even under real-world constraints, the UI remains functional and user-friendly.

5. Building the Workflow

For maintainability, define each user flow as a modular script with reusable components; login, search, add-to-cart, etc. Parameterize credentials and datasets, and integrate the tests into your CI/CD pipeline so UI load validation runs automatically with every major release.

A well-structured workflow design allows teams to run short smoke tests daily and longer endurance tests weekly, ensuring that regressions are caught early and fixes can be validated quickly.

Types of UI Load Tests

Once you know which workflows to test, the next step is defining how to load them. Different load patterns reveal different aspects of front-end behavior; from sustained reliability to sudden stress recovery. A good testing plan mixes several types of UI load tests, each uncovering distinct performance characteristics that backend metrics alone can’t capture.

Steady-State Tests: Measuring Consistency Under Normal Traffic

Steady-state testing is the foundation of every performance plan. It maintains a constant level of user activity to observe how the interface behaves during routine, expected load — the kind of traffic your site handles every day.

It’s particularly effective for validating user-facing KPIs over time windows:

Because it uses realistic pacing rather than stress conditions, steady-state testing helps distinguish random outliers from genuine bottlenecks in rendering or event handling.

Ramp, Soak, and Endurance Tests: Uncovering Slow Degradation

Some issues don’t appear immediately but build up gradually. Ramp and soak tests gradually increase load, sustain it for hours, and then taper it down to detect performance drift over time.

In browser environments, that often means:

These tests give teams a realistic picture of how a user interface holds up during long sessions, dashboards, or apps that stay open for hours; a scenario common in SaaS, fintech, and analytics tools.

Spike and Stress Tests: Testing Resilience and Recovery

While ramp tests show endurance, spike tests reveal agility; how quickly the UI responds to sudden bursts of traffic or user interaction. Stress tests go further, deliberately exceeding capacity limits to observe recovery behavior once pressure is reduced.

Key insights from these tests include:

This data is critical for applications expecting event-driven surges, such as ticketing systems, sales campaigns, or media portals.

Geo-Distributed Tests: Seeing Performance from a Global View

Users experience your application differently depending on where they are. A smooth page in New York might feel sluggish in Singapore due to latency, caching gaps, or script delivery delays.

Running geo-distributed UI load tests helps pinpoint those differences. Testing from multiple regions allows you to measure:

This insight helps optimize content delivery, adjust caching policies, and maintain consistent experience for a global user base; something backend-only tests can’t measure.

Cross-Browser and Device Tests: Ensuring Parity Across Environments

Modern front ends depend heavily on browser behavior. Each rendering engine interprets code slightly differently, and performance can shift dramatically between desktop and mobile hardware.

Cross-browser and device testing validates whether your UI performs equally well across all supported platforms by comparing:

These tests expose inconsistencies that often hide in production; a layout that shifts only in Safari, a script that lags on Android, or a form that fails under mobile input throttling.

When combined, these test types give teams a 360° view of UI resilience. Steady-state testing defines the baseline; endurance testing shows how that baseline evolves; stress testing reveals limits; and geo-distributed and cross-browser testing confirm that performance remains reliable across users, regions, and devices. Together, they form the backbone of a realistic front-end performance strategy.

Metrics & SLOs for UI Load

UX Timing: These metrics capture how users actually experience performance. Core Web Vitals — LCP, INP, and CLS— show visual load speed, interactivity, and stability, while TTFB, TTI, and step or transaction duration measure how quickly pages respond and become usable under load.

Reliability: Reliability metrics focus on whether the interface stays functional and visually correct during testing. Tracking UI error rates, JavaScript exceptions, failed requests, and DOM or screenshot differences helps confirm that elements render properly and workflows don’t break under concurrency.

Resource Health: A smooth experience depends on how efficiently the browser handles your application. Monitoring CPU and memory usage, long tasks, and network errors highlights whether scripts, animations, or assets consume excessive resources or block interactivity.

SLO Targets: Service Level Objectives (SLOs) define what “good performance” means in measurable terms. Typical benchmarks include maintaining p95/p99 response times within target limits, keeping error rates within the allowed budget, and minimizing performance variance near the end of a test run.

Reporting: Once tests complete, structured reporting turns data into insight. Comparing results across runs, visualizing trends, and reviewing exportable artifacts like HAR files, traces, or screenshots help teams detect regressions early and track long-term performance improvements.

Test Data & Environment

Data realism matters more than scale. Use real-like user accounts, different payload sizes, and varied search or catalog inputs, not the same repeated query. This diversity mimics real traffic and prevents cache bias. Keep tests idempotent so they can run repeatedly without changing data or polluting the system.

Environment parity ensures your results match what users actually experience. Run tests in conditions that mirror production: same CDN settings, caching rules, compression methods, and image formats. Don’t forget smaller but important details like consent banners, analytics tags, and feature flags, they all affect front-end load time and stability.

Isolation keeps tests clean. Each run should use its own namespace, cookies, and dataset. Automate setup and teardown so previous sessions don’t interfere with the next. That way, every result reflects the application’s behavior, not leftover state from a previous test.

Best Practices for UI Load Testing

Establishing strong practices ensures that UI load testing produces reliable, repeatable, and meaningful results. Beyond the tools themselves, success depends on when tests are run, how scripts are written, and how data is observed and secured. The following ui load testing best practices help teams build mature testing workflows that scale with product complexity.

Shift-Left & CI

Performance testing shouldn’t be a late-stage activity. Integrating it early in the development process, especially in CI/CD pipelines, helps detect issues before they reach production. Regular runs, automated gates, and standardized reports turn UI load testing into a continuous performance monitor rather than an occasional check.

Stable & Maintainable Scripts

Unstable or brittle scripts waste more time than they save. Well-structured, maintainable test scripts allow teams to expand coverage without constant rework. Consistent locators, page-object models, and clear timeout strategies make the testing framework easier to scale and debug.

Network Emulation & Resilience

Real-world users operate under diverse network conditions, from stable Wi-Fi to unstable mobile connections. Emulating these conditions during UI load testing reveals how the interface handles slow, lossy, or fluctuating links, and whether it can recover gracefully.

Security & Privacy Hygiene

Load testing should never compromise data integrity or user privacy. Maintaining secure handling of credentials and masking sensitive data ensures compliance and builds trust. Security also extends to verifying that authentication and session management behave correctly under stress.

Observability First

Visibility is key to understanding why performance changes. Comprehensive observability connects metrics, logs, and traces, enabling quick root-cause analysis when results deviate from expectations. By retaining artifacts and correlating data across systems, teams gain a complete picture of front-end behavior under load.

Final Thoughts

UI load testing fills the gap that traditional API or protocol-level testing can’t cover. It validates what users actually experience; how pages render, respond, and stay stable under concurrent use, across browsers, devices, and real-world network conditions. When combined with backend testing, it forms a complete view of performance from infrastructure to interface.

Teams that invest in the right foundations, reliable selectors, realistic network simulations, and strong observability, gain far more than performance metrics. They gain confidence that their product will stay smooth, responsive, and resilient no matter how traffic fluctuates.

For organizations looking to plan, automate, or scale browser-level load testing, PFLB provides the tools and expertise needed to turn these practices into measurable results, helping teams keep performance consistent where it matters most: in the user’s experience.