Chainguard specializes in providing highly secure Docker container images — regularly updated, tightly managed, and designed to minimize vulnerabilities. While their reputation for security is well-established, Chainguard wanted to ensure their containers also delivered competitive performance. After all, strong security is crucial, but it shouldn’t slow you down.

Client Goal

Chainguard needed clear, objective proof that their Docker containers don’t compromise on speed or efficiency. They wanted transparent benchmark testing against popular, widely-used images to validate performance, not just security. To make this credible, Chainguard looked for an unaffiliated expert — someone trusted and independent — to carry out detailed, unbiased testing.

Why Chaingurad Chose PFLB?

Chainguard needed a partner they could trust completely — someone neutral, thorough, and dedicated to rigorous testing. That’s exactly where PFLB shines:

- Completely Impartial:

At PFLB, we don’t play favorites. Our testing is transparent, neutral, and never influenced by vendor interests. When we say something performs well, it’s backed purely by facts. - Robust and Repeatable Testing:

We take benchmark tesitng seriously, using meticulously crafted methods that anyone can reproduce. Our approach ensures every test is consistent and credible, giving you results you can rely on. - Clear, Actionable Insights:

Numbers alone don’t tell the full story. That’s why our reports clearly highlight strengths, pinpoint weaknesses, and offer straightforward insights. We help clients quickly understand exactly how their technology stacks up.

Scope of Work

To give Chainguard clear, trustworthy answers, PFLB benchmarked a variety of database Docker containers against their counterparts from DockerHub. We selected databases representing different real-world workloads and usage scenarios:

- PostgreSQL (tested on both ARM and x86 architectures)

- CockroachDB

- MariaDB

- MySQL

- Redis

- Memcached

- ClickHouse

- MongoDB

Testing PostgreSQL on both ARM and x86 architectures allowed us to offer additional insights, so performance results were relevant across different infrastructure setups and use cases.

Our Benchmark Testing Methodology

At PFLB, we pride ourself on our testing being precise, structured, and repeatable. We ensured that benchmarking for Chainguard was both comprehensive and fair:

Testing Environment Setup

We started by deploying consistent, cloud-based infrastructure using AWS virtual machines. Standardizing our testing environment meant every Docker container had the same opportunity to showcase its true performance — no hidden variables, no surprises.

Benchmarking Tools We Used

To accurately reflect real-world database use cases, we selected reliable, industry-recognized benchmarking tools tailored to each database type:

- Benchbase: For relational databases like PostgreSQL, CockroachDB, MariaDB, MySQL, and ClickHouse, running widely recognized TPC benchmarks:

- TPC-C: Transactional workloads, measuring real-world database responsiveness.

- TPC-H: Analytical workloads, evaluating performance for complex data queries.

- Memtier_benchmark: Specifically chosen for Redis and Memcached, allowing us to run realistic read-heavy scenarios, mirroring typical production environments.

Rigorous Test Execution

We ran each test multiple times — four to five iterations per scenario — to ensure the accuracy and statistical reliability of our results. By varying workloads and scenarios, we covered a wide spectrum of realistic performance demands:

- Transactional workloads (TPC-C): Simulated everyday business operations, evaluating responsiveness under pressure.

- Analytical workloads (TPC-H): Complex, resource-intensive queries to test sustained analytical performance.

- Read-heavy scenarios (Redis, Memcached): Assessing high-volume read performance typical of caching scenarios.

Comprehensive Performance Monitoring

Numbers alone don’t paint the whole picture. We closely monitored multiple dimensions of system performance, giving Chainguard a clear view into how each container performed across several critical metrics:

- System-level metrics: CPU usage, RAM consumption, disk I/O performance, network throughput.

- Docker-specific metrics: Individual container CPU and memory utilization, ensuring detailed resource-use insights.

- Latency and throughput measurements: Including both raw throughput (operations/sec) and “goodput” (successful transactions), alongside detailed latency distribution insights.

Reporting & Conclusions

Dedicated Reports

Each database received its own detailed performance report, highlighting key metrics. Clear comparison tables and precise visualizations helped Chainguard quickly understand the strengths and weaknesses of their containers compared to standard Docker images.

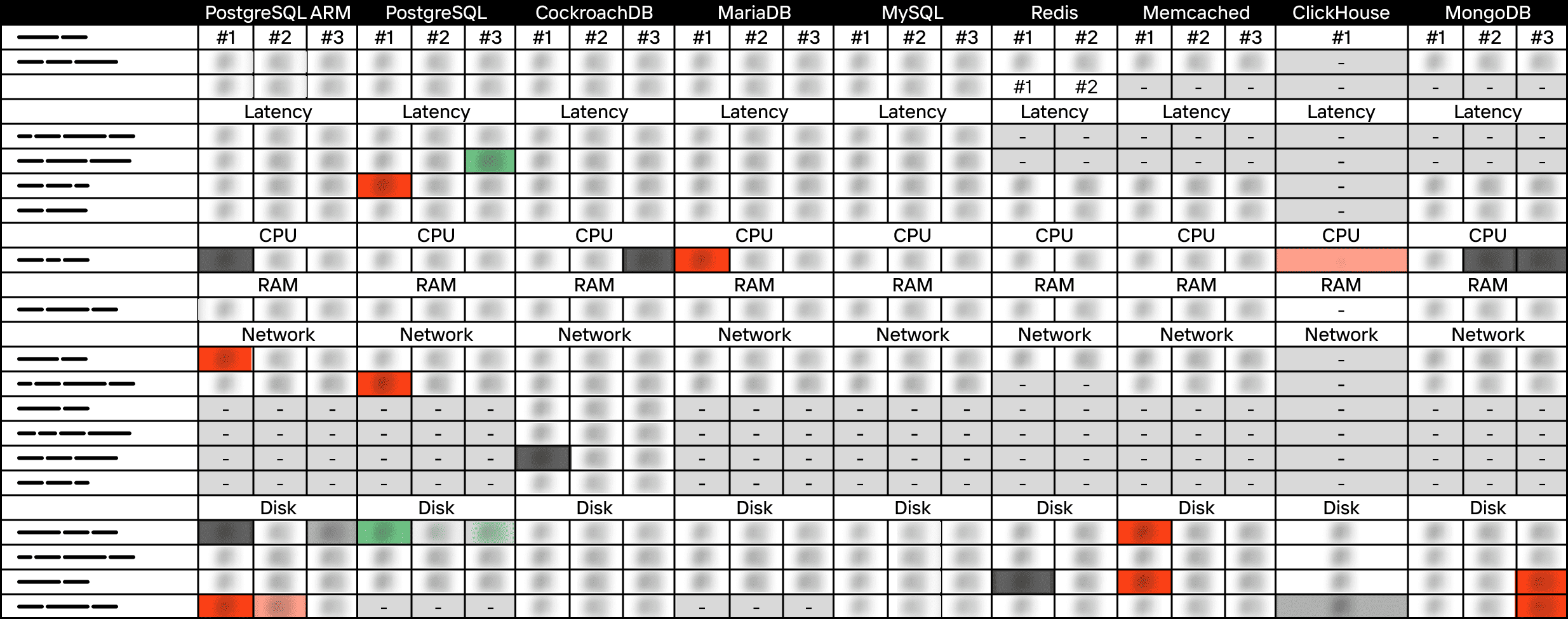

Unified Heatmap

We complemented these detailed documents with a unified heatmap, providing a clear, visual summary across all tested databases and scenarios. This heatmap instantly highlighted strengths, weaknesses, and opportunities for improvement.

Consistent Methodology

Uniform testing across every scenario ensured clear and reliable conclusions. Chainguard could confidently compare results across different databases, easily pinpointing areas requiring further optimization or resource allocation.

Client Benefits & the PFLB Advantage

Chainguard received clear, unbiased benchmarking data to confidently inform their product decisions and optimizations. With our rigorous testing and transparent reporting, they gained reliable proof points to support their performance claims.

By partnering with PFLB, Chainguard demonstrated their commitment to credible, neutral validation. Our detailed, actionable insights empowered their teams to optimize effectively, without any vendor bias or promotional influence.