Performance testing is the backbone of delivering seamless software experiences. In a world where slow applications drive away 53% of users, ensuring your system can handle real-world demands is non-negotiable.

This guide dives into the fundamentals of performance testing, explores its critical types and metrics, and reveals how it uncovers bottlenecks before they impact users.

Whether you’re a developer, QA specialist, or business leader, you’ll learn actionable strategies to validate scalability, optimize resources, and build customer trust.

Let’s begin.

What Is Performance Testing?

Performance testing is a method for evaluating how a software system performs under certain workloads, network conditions, and usage patterns. The main objective is to identify bottlenecks and confirm that the application meets predetermined performance testing objectives, such as responsiveness and stability.

When asking “what is a performance test?” or “why performance testing is important,” consider that these examinations measure crucial factors like response times, throughput, and resource usage. They help you decide whether your application can handle real-world demands, such as large numbers of simultaneous users or frequent data requests, without slowing down or crashing.

In a broader sense, performance testing means understanding how your software behaves under stress. By pinpointing areas that cause slowdowns or resource drains, organizations can ensure that end users get consistent service—even when the workload spikes. This proactive approach reduces the risk of system failures after deployment and improves overall customer satisfaction.

Purpose:

For example, an e-commerce site uses performance testing to confirm it can handle 10,000 simultaneous users during a Black Friday sale without lagging.

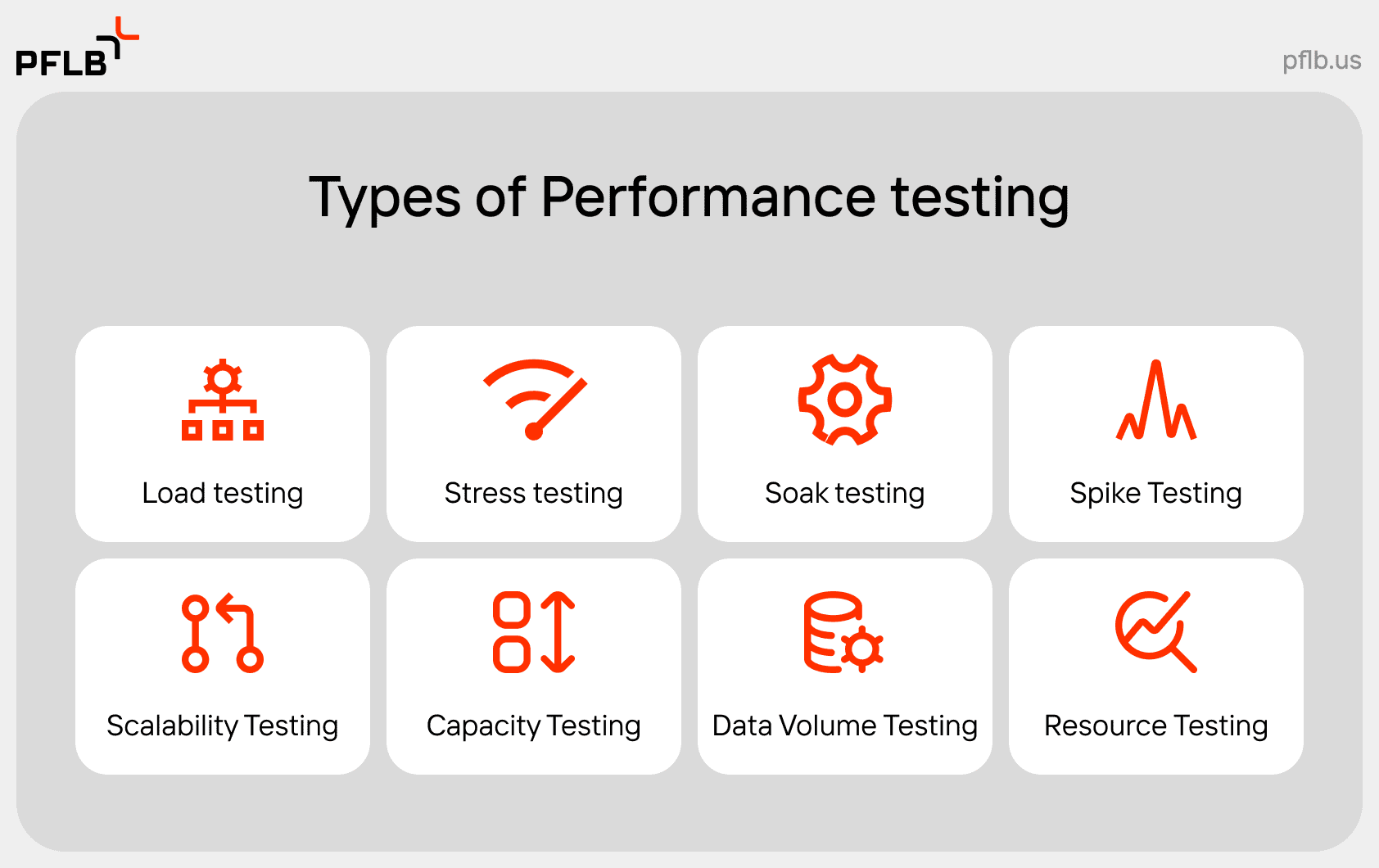

Types of Performance Testing

Performance testing generally encompasses several approaches, each designed to uncover specific insights about system behavior. While load testing forms a broad category, its subtypes, stress testing, soak testing, spike testing, data volume testing, and resource testing, all focus on unique aspects of how a system handles varying workloads.

Below are the major types of performance tests, broken down into individual categories to explain how they work and what they aim to accomplish.

Load Testing

Load testing checks the system’s performance under expected, regular user load. It involves simulating a typical number of simultaneous requests or users and observing metrics such as throughput, response times, and resource usage.

By performing load testing, development teams can confirm that the software meets performance testing requirements and determine if any problems emerge under everyday usage.

Key points:

For instance, testing a banking app with 5,000 concurrent users to ensure transaction speeds remain under 2 seconds.

In its turn, load testing consists of several types of testing. Let’s discover the main types of load testing now:

Stress Testing

Stress testing pushes the system beyond its normal operational capacity. The goal is to see how the application behaves when user load or data requests reach peak levels, sometimes exceeding planned usage.

By identifying the breakpoints, teams gain knowledge about system stability and can plan for graceful failure or resource allocation during extreme events.

Key points:

A streaming platform might test how many users it can handle before video buffering increases by 300%.

Soak Testing

Soak testing, sometimes called endurance testing, involves running the system at a typical or higher-than-usual load for a prolonged period. This approach can help identify issues like gradual memory leaks, resource exhaustion, or other problems that appear over time.

Key points:

For instance, a streaming service runs a soak test by simulating thousands of viewers over an entire weekend. During this extended test, the team tracks memory usage, CPU load, and error rates. This approach can reveal slow-building issues (like memory leaks) that wouldn’t appear in shorter tests.

Spike Testing

Spike testing focuses on the system’s reaction to sudden, dramatic spikes in user activity or data volume. For instance, you might simulate a scenario where a marketing campaign or unexpected event floods the application with new visitors.

Key points:

Imagine a news website’s response to a 500% traffic spike during a major event.

Scalability Testing

Scalability testing checks whether the system can scale vertically (by adding more power to existing servers) or horizontally (by adding more servers) without performance drops. This method is critical for applications that anticipate growth or seasonal surges.

Key points:

A cloud service provider might test how adding servers affects response times during load spikes.

Capacity Testing

Capacity testing determines the maximum amount of work a system can handle while still meeting its defined performance benchmarks. Unlike stress testing—which seeks a breaking point—capacity testing aims to define an optimal throughput threshold.

Key points:

A social media app could use this to plan server requirements for viral content scenarios.

Data Volume Testing

Data volume testing zeroes in on how the system manages large sets of information. When databases grow in size—often due to heavy usage over time—queries can slow down, and resource usage can spike. By conducting data volume testing, teams can gauge whether the application remains responsive and stable even when databases expand significantly.

Key points:

For example, a hospital management platform imports massive patient records into its database. Testers then measure how swiftly the system processes queries. If response times spike under heavy data, indexing or storage optimization might be necessary.

Resource Testing

Resource testing inspects how well the software uses specific resources like CPU, memory, and disk I/O under varying workloads. It zooms in on whether these assets can handle peak usage without causing bottlenecks or crashes.

Key points:

For instance, an e-commerce site simulates heavy traffic while tracking CPU, memory, and disk usage. If memory spikes too high or the CPU becomes overloaded, developers know to optimize resources before the real holiday rush.

| Performance Test | Main Focus | Key Metrics | When to Use | Example Use Case |

| Load Testing | Normal user load and baseline performance | Response times, Throughput, Resource usage | Early in development or before major releases | Testing a banking app with 5,000 concurrent users |

| Stress Testing | Behavior under extreme conditions | Peak response times, Error rates, CPU/memory | Preparing for peak events or traffic surges | Streaming site tested at its concurrency breaking point |

| Soak Testing | Long-duration stability and resource leaks | Memory usage over time, Error rates, CPU load | Ensuring stability over extended periods | Running a healthcare platform for 72 hours to detect memory leaks |

| Spike Testing | Reaction to abrupt traffic surges | Spike response times, Recovery speed | Preparing for viral/marketing-driven traffic | News site handling a 500% traffic spike during a major event |

| Scalability Testing | Growth without performance loss | Throughput increase, Latency under scaling | Planning infrastructure expansions | Cloud service scaling servers during holiday sales |

| Capacity Testing | Maximum throughput within performance goals | Max transactions/sec, Utilization thresholds | Determining operational limits | Social media app testing user limits for viral content |

| Data Volume Testing | Performance with large datasets | Query speed, Database I/O, Memory | Anticipating production data growth | Testing query speeds in a system with 10M+ patient records |

| Resource Testing | CPU, memory, and disk usage under load | CPU/memory load, Disk I/O, Interrupts/sec | Optimizing resource allocation | Simulating peak loads to check for memory spikes in a gaming app |

Performance Testing Metrics

When organizations conduct performance tests, they track several metrics that highlight system health and responsiveness. You can explore the most important ones in our detailed guide on Key Performance Test Metrics.

Why Use Performance Testing?

Organizations from startups to global enterprises rely on performance testing to confirm their software delivers consistent service—even when usage spikes or systems come under stress. Here are some notable advantages:

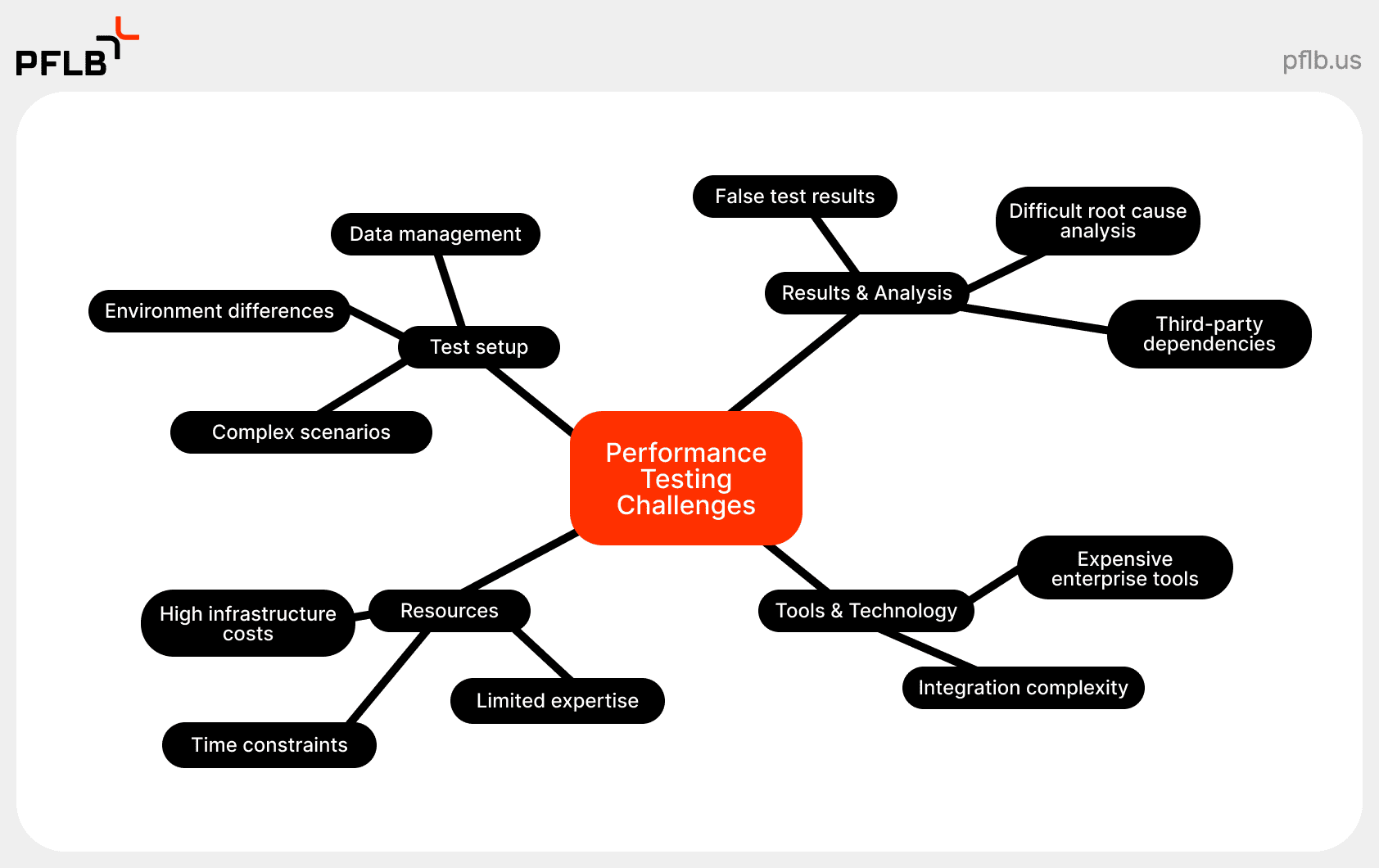

Performance Testing Challenges

Although performance testing is vital, teams often encounter obstacles that make the process more complex than anticipated. Some of the most common include:

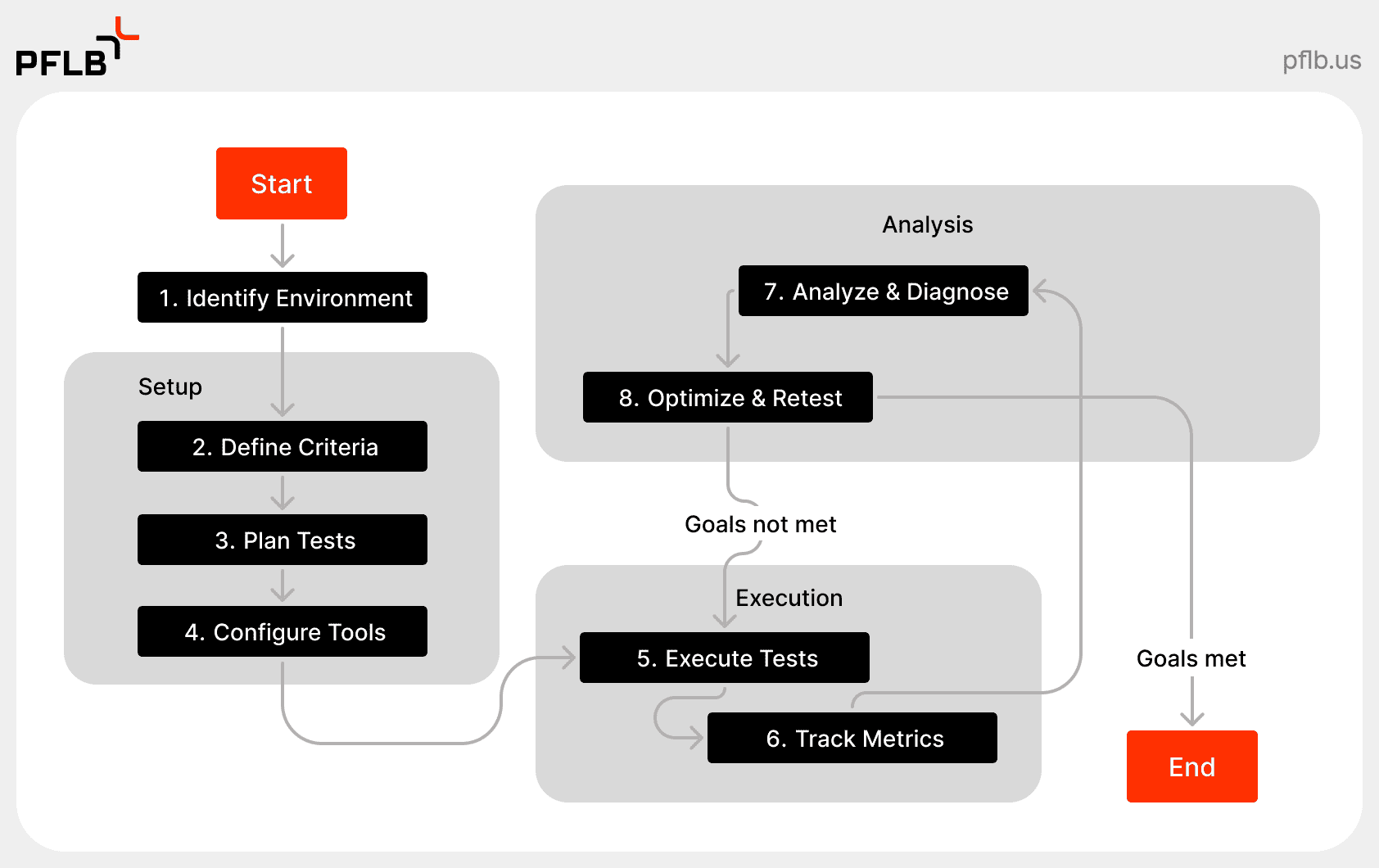

How to Conduct Performance Testing

If you are wondering “how to do performance testing” or “why do we need performance testing,” the steps below should offer a clear roadmap for your performance testing process. Follow them in order, but remain adaptable as project requirements may shift.

By following this sequence, you establish a disciplined performance testing process that clarifies the system’s limits, stability, and growth potential.

Top Performance Testing Tools

How PFLB Can Help with Performance Testing

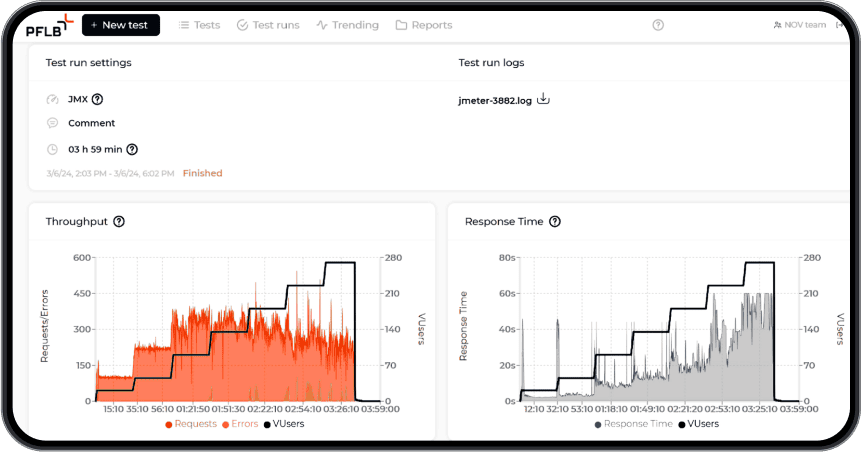

PFLB is a leading provider of performance testing services, delivering solutions that ensure reliability, scalability, and strong user experiences. Our team has spent years refining methods and tools for performance tests, making us adept at solving challenging demands in various industries.

✅ Cloud-Native Testing at Scale

PFLB leverages cloud infrastructure to simulate 1M+ virtual users globally. Test from AWS, Azure, or Google Cloud regions to mirror real-world traffic patterns—without upfront hardware costs.

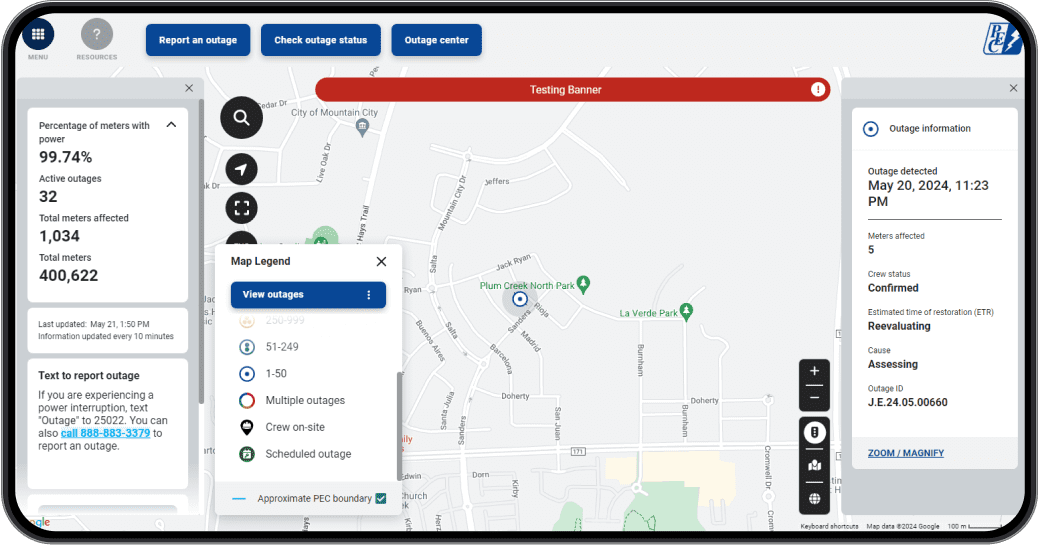

✅ Real-Time Monitoring

Our platform offers live dashboards tracking 50+ metrics (response times, error rates, CPU spikes). AI pinpoints bottlenecks (e.g., slow SQL queries) in minutes, not days.

✅ Compliance-Ready Reporting

Generate audit-ready reports for industries like healthcare (HIPAA) or finance (PCI DSS). Prove compliance with granular performance logs.

✅ Expert-Led Strategy

Our certified engineers design tests tailored to your goals—whether it’s Black Friday readiness or IoT scalability. No more “copy-paste” test scripts.

Our Case Study

Check out all our portfolio and success stories to see how we’ve delivered tangible results for clients across multiple sectors. We address resource shortfalls, tune software configurations, and help refine your performance testing process so that everything runs smoothly.

Conclusion

Performance testing is more than a technical procedure; it’s a strategic investment in your software’s future. By evaluating how your application handles typical and extreme workloads, you avoid launching unprepared solutions into production. Tests that measure response times, resource usage, and system behavior under peak conditions can reveal hidden problems and ensure user satisfaction.

Whether you are exploring types of performance testing for the first time or optimizing a well-known system, understanding why we need performance testing can help you create a robust plan that supports both current operations and future growth.

When you’re ready to elevate your performance testing to the next level, consider partnering with PFLB. Our specialized services can help you craft a sustainable, resilient application that meets the evolving demands of your business.