Your core applications carry the real weight of your business. They run the order flows, power partner integrations, handle accounts, and keep internal workflows moving. When these systems slow down under pressure, everything that depends on them starts to shake.

So, when a big release, a migration, or a scaling project comes up, every team hits the same crossroads: should we build application load testing in-house, or should we bring in specialists who can handle it with confidence?

Let’s take a closer look at both paths and see how each one affects cost, tooling, expertise, and delivery speed. By the end, you’ll know which direction gives your team the strongest footing.

What Do We Mean by “Application Load Testing”?

When people hear “load testing,” they often think about web pages or landing screens. But in most modern companies, the real action happens behind the scenes. Application load testing focuses on the backend: the APIs, microservices, and internal systems that quietly power everything your business relies on.

These are the components that process orders, handle account updates, run billing rules, move messages through queues, or support partner integrations. If any of them slows down under pressure, the impact ripples outward. Transactions take longer, workflows stall, and dependent services start to fail in ways that are hard to predict.

That’s why application load testing looks at how your core systems behave when they’re under real stress. It checks how much throughput they can handle, whether latency stays within SLA expectations, and how stable the architecture remains when multiple services and data stores are working at the same time. It helps teams spot weak points that don’t show up during day-to-day usage but surface immediately once traffic or internal load increases.

Think of it as testing the engine, not the dashboard. The website might be the doorway users see, but the backend decides if your business actually keeps moving or not yet.

Pros of In-House Application Load Testing

Handling performance testing internally comes with several natural advantages, especially for teams that already live and breathe the product. Before looking at outsourcing, it’s worth understanding the strengths you automatically gain when your own engineers run these tests.

Deep Knowledge of Your Business Logic and Edge Cases

Internal teams understand your application in ways an outside provider never will. They know the quirks of your workflows, the transactions that matter most, the integrations that tend to misbehave, and the incidents that have caused trouble in the past. This knowledge helps them create load scenarios that reflect real usage instead of generic patterns. They can model unusual edge cases, stress specific business flows, and focus on the areas that drive the most value or carry the most risk for your organization.

Tight Integration With CI/CD and Engineering Workflows

An in-house team can embed performance testing directly into your build and release process. They can run smaller load checks on every major backend change, schedule nightly performance suites, and connect results to your existing observability stack. When something slows down, the same engineers who built the system can investigate it immediately. This creates a continuous feedback loop where performance stays visible throughout development, not only during big pre-release events.

Long-Term Ownership of Performance Knowledge

Over time, an internal team builds a deep understanding of how your system behaves under different conditions. They accumulate insights on historical bottlenecks, past scaling issues, and recurring weak spots. This knowledge becomes useful during planning sessions, architecture discussions, and capacity forecasting. Instead of relying on outside experts to rediscover old problems, your team knows exactly what to watch for as the application evolves.

Cons of In-House Application Load Testing

Managing load testing internally has real advantages, but it also comes with challenges that can slow teams down or stretch resources thin. These limitations tend to show up as systems grow more complex or as deadlines get tighter.

Building True Application Performance Expertise Is Expensive

Hiring people who truly understand application-level performance is harder than it sounds. These engineers need to be comfortable with distributed systems, databases, queues, caching, cloud architecture, and the load-testing tools themselves. It’s a rare combination of skills, and salaries reflect that.

For many companies, performance work isn’t constant enough to justify a full team, so the cost of recruiting, training, and keeping experienced specialists becomes a long-term burden. Internal teams often end up stretched thin, and critical tests get delayed simply because there aren’t enough hands with the right knowledge.

Complex Tooling and Environment Overhead

Enterprise application load testing requires an entire ecosystem to function correctly. You need test data that resembles production, environments that mimic real integrations, and coordination with teams across security, DevOps, SRE, and databases.

Maintaining this setup takes time and attention. Even small configuration gaps between staging and production can lead to misleading test results. For organizations with limited environment support or fragmented systems, building and maintaining realistic performance environments becomes its own major project.

Steep Learning Curve for Realistic Load Models

The biggest challenge internal teams face is building the right scenarios. Real application traffic is complex: one business action can trigger dozens of internal calls, background jobs, retries, and cross-service workflows.

Teams that are new to performance testing often start with simplified scripts that don’t reflect true user behavior. Everything looks stable in a test run, but once real users arrive, the system struggles because the scenarios never pushed the right layers. Without experience modeling concurrency patterns, failure conditions, and end-to-end flows, it’s easy to develop a false sense of confidence before a major release.

| In-House Application Load Testing: Pros & Cons | |

|---|---|

| Pros | Cons |

| Deep knowledge of business logic | Expensive to build and maintain expertise |

| Full control over test design and priorities | High environment and tooling overhead |

| Seamless CI/CD and engineering integration | Steep learning curve for realistic scenarios |

| Immediate access to code and observability | Limited bandwidth during large or urgent tests |

| Long-term internal performance knowledge | Not cost-effective for infrequent large tests |

Outsourced Application Load Testing: How It Works

The shift toward outsourcing performance testing is no longer a trend but a market reality: the global outsourced software testing market reached about $51.6 billion in 2025 and is projected to triple by 2034, showing how widely companies now rely on external testing expertise.

When teams bring in an external provider for application load testing, the goal is simple: let specialists handle the heavy lifting so your engineers can focus on building and improving the product. Instead of learning tools, creating data sets, or assembling complex test environments, the provider manages the full process from start to finish.

Most outsourced engagements follow a predictable structure:

Discovery and risk mapping: The provider works with your team to understand critical transactions, architectural risks, dependencies, and past incidents. This step shapes all test scenarios.

Workload and scenario modeling: They translate real business flows into accurate load models: API calls, message queue traffic, multi-step transactions, batch operations, partner integrations, and more.

Script development and environment preparation: Specialists build scripts for REST, gRPC, GraphQL, MQ protocols, and any internal API formats your system uses. They coordinate test data, user profiles, and endpoint access with your team.

Running the tests: Large-scale load, stress, and spike tests are executed using distributed load generators. These can run across regions or clouds if needed, something most companies don’t maintain in-house.

Deep analysis and reporting: After the tests, the provider breaks down performance behavior: latency patterns, bottlenecks, slow database queries, cache misses, queue delays, thread pool saturation, memory pressure, and resilience issues.

Collaboration on improvements: Most teams use the results to prioritize fixes, redesign flows, tune configurations, or plan capacity increases. Providers often stay involved for follow-up tests until the system reaches the expected level of stability.

What makes outsourced testing different is the level of experience external engineers bring. They’ve seen many architectures across industries, so they know what “normal” looks like, and what’s a serious red flag. This outside perspective helps teams catch issues they may have never tested for internally.

Pros of Outsourcing Application Load Testing

When deadlines are tight and systems are complex, bringing in a dedicated performance team can make a major difference. Outsourced application load testing gives you access to people who’ve already seen similar systems under pressure, and know exactly what to test, how to test it, and what red flags to watch for.

Immediate Access to Senior Performance Engineers

When you outsource application load testing, you’re not starting from scratch. You’re tapping into a team that’s done this dozens, maybe hundreds of times. These aren’t generalists learning on the job. They’ve tested banking cores, logistics systems, real-time APIs, high-traffic SaaS platforms; you name it.

This experience is also reinforced by tooling maturity. By 2025, around 68% of outsourced testing engagements rely on automated frameworks, allowing providers to deliver faster, more consistent, and more scalable load validation than most internal teams can achieve on their own.

Faster Ramp-Up for High-Stakes Releases and Migrations

Big changes don’t wait for your team to master new tools or build load scripts from scratch. When you’re launching a new module, moving to microservices, or preparing for a traffic surge, time is short and the pressure’s high.

An external team can get to work immediately, defining real-world traffic patterns, building out tests, and running multiple scenarios while your engineers stay focused on core tasks. By the time you hit your release window, you’ve already tested the system under load, fixed weak spots, and avoided the “hope for the best” approach that many teams fall into.

No Need to Maintain Heavy Load Infrastructure

Application-level load tests aren’t light. They often need distributed generators, support for multiple protocols (HTTP, gRPC, message queues), and coordination across environments.

Keeping all that running in-house is a lot, especially if you only need it a few times a year. With outsourcing, the infrastructure is already in place. You don’t have to build it, monitor it, or pay to keep it warm between projects. It’s ready when you need it, and invisible when you don’t.

Independent, Unbiased View of Your Application Risks

When you’ve worked on a system for months or years, it’s easy to make assumptions. You know how it should behave. You trust certain flows. You might skip edge cases that “never happen.”

An external team doesn’t have those blind spots. They’re more likely to push the system in uncomfortable ways: simulating degraded dependencies, testing failure recovery, running noisy neighbors alongside your service.

And as they’re on the outside, their reports tend to be more business-ready. They connect the dots between a slow transaction and its impact on SLAs, customer experience, or revenue, so the conversation with stakeholders isn’t only technical but also actionable.

Cons of Outsourcing Application Load Testing

Outsourcing isn’t a silver bullet. While it solves a lot of problems, it also introduces new challenges, especially if your team isn’t prepared to collaborate closely. From scoping the right test cases to following up on results, your engineers still need to be actively involved. For fast-moving teams shipping frequent updates, outsourcing every performance test just doesn’t scale.

Requires Strong Knowledge Transfer and Clear Scope

To run meaningful application-level load tests, an external team needs real insight into how your business works. That includes which transactions matter most, which integrations are fragile, and what “failure” actually looks like in your context.

Getting them up to speed takes time. If the scope isn’t clearly defined or the handoff is too shallow, you risk getting tests that miss edge cases or overlook critical paths. Good providers will ask smart questions, but your team still needs to bring the details.

Can Be Less Suitable for Very Frequent, Small Changes

Outsourcing shines during big events; major releases, architecture shifts, cloud migrations. But if you’re pushing changes every few days, especially in a microservices setup, it’s harder to rely on an external team for every tweak.

In these cases, it’s often more efficient to build lightweight, in-house load tests into your CI/CD pipeline. External help can support the big-picture planning, but for day-to-day development, you need internal coverage that moves as fast as your builds.

Internal Teams Still Need to Own Fixes

Outsourcing gives you a clear view of where the system struggles, but fixing those issues still lands on your engineering team. No matter if it’s a blocking DB call, poor caching behavior, or thread pool saturation, the actual code and infrastructure changes stay in-house.

This means your team needs at least a baseline understanding of performance bottlenecks and how to resolve them. Without that, even the best test report ends up sitting on a shelf. External engineers can guide, but they can’t patch your codebase.

| Outsourced Application Load Testing: Pros & Cons | |

|---|---|

| Pros | Cons |

| Quick access to senior performance experts | Needs time for proper knowledge transfer |

| Fast ramp-up for big releases or migrations | Not ideal for frequent small changes |

| No internal infra or tool maintenance | Internal team still owns the fixes |

| Ready-to-use advanced tools and test setups | May miss edge cases without full context |

| Fresh, unbiased view of system risks | Harder to embed in daily dev cycles |

Cost and Risk Comparison for Application Load Testing

There’s no one-size-fits-all answer when it comes to the cost of application load testing. It depends on how often you test, how complex your system is, and what kind of performance experience your team already has.

Let’s compare these two approaches to discover them better:

In-House Costs Can Add Up Fast

Running performance tests internally requires people, tools, and infrastructure.

- Hiring or training engineers with deep knowledge of APIs, queues, databases, and performance tooling

- Maintaining dedicated, realistic staging environments that mirror production

- Managing test data, monitoring, and analysis pipelines internally

- Paying for tooling licenses or cloud usage to run high-load scenarios

- Time investment: onboarding, test development, environment setup, etc.

Outsourcing Focuses Spending Where It Matters

Outsourced testing is typically project-based or done on a flexible retainer. You pay for a full-service engagement, not just tooling or headcount.

- Includes infrastructure, test scripts, tools, and senior-level expertise

- Costs are limited to when you actually need testing (e.g. before a major release or migration)

- Faster start time compared to building from scratch

- No ongoing maintenance or infrastructure to worry about

So Which One Costs Less?

If your team runs performance tests regularly and has strong engineering culture, in-house can pay off in the long run, especially if you integrate it into CI/CD and own performance as a discipline.

But if performance testing happens once a quarter (or once a year), outsourcing is usually more cost-effective. You avoid building tools and infra from scratch, and you’re only paying for what you need, when you need it.

In-House vs. Outsourced Load Testing

| Aspect | In-House | Outsourced |

|---|---|---|

| Expertise | Built over time | Ready from day one |

| Setup Speed | Slower ramp-up | Fast to launch |

| Tools & Infra | You build and manage | Provided by vendor |

| Cost | Ongoing internal costs | Pay per project |

| Team Bandwidth | Often limited | Scales on demand |

| System Knowledge | Deep understanding | Needs onboarding |

| Risk Coverage | May miss blind spots | Brings fresh perspective |

| Best for | Frequent updates | Big releases or migrations |

How to Decide: In-House, Outsourced, or Hybrid?

Here’s a breakdown of key factors to help you decide which approach fits your situation best.

1. Application Criticality

If your application powers essential business operations, like financial transactions, patient records, logistics, or order processing, the margin for error is small. Performance issues here can directly impact revenue, compliance, or even customer safety.

In this case, outsourcing or hybrid testing is often the safer route.

Specialist providers bring proven methods, reusable scripts, and risk-mapping expertise that help ensure your core systems perform under pressure. Internal teams can then take over lighter testing later, once the foundational work is done.

2. Architecture Complexity

Modern applications rarely run as single, monolithic units. Instead, they’re made up of microservices, message queues, APIs, caching layers, distributed databases, and often operate across regions or cloud environments.

If your architecture looks like this, outsourced teams may offer a big advantage.

They’ve worked across hundreds of similar systems and can spot patterns, edge cases, and failure points your team might miss. A hybrid model also works well here: external experts handle initial test design and implementation, and internal teams maintain and evolve them going forward.

3. Internal Expertise

Does your team include someone who has led full-scale application load tests before? Not just web tests or frontend performance checks, but full backend load simulation, scripting for internal APIs, interpreting memory leaks, latency spikes, and bottlenecks?

If yes, going in-house makes sense.

Your team knows the workflows, the system behavior, and how to embed testing into CI/CD. If not, consider outsourcing the first few rounds or hiring support to avoid false positives, missed signals, and wasted test cycles.

4. Frequency of Major Performance Events

Think about how often your system goes through significant change.

- If you’re deploying every few days, a full outsourcing model can quickly become inefficient.

- If your performance risks come from quarterly releases, infrastructure changes, or platform migrations, outsourced testing can be a great fit.

Use internal processes for small, frequent updates. Bring in external teams for high-stakes events.

5. SLA or Regulatory Pressure

In some industries, uptime and latency are contractual obligations. Financial services, healthcare, insurance, and government platforms often operate under strict SLAs or compliance frameworks.

This is especially visible in regulated industries, where up to 67% of financial-services companies now rely on outsourced testing providers to validate performance, stability, and compliance before major releases.

In these cases, having third-party performance validation can be important.

It adds objectivity, builds trust with stakeholders, and can help during audits or capacity planning sessions.

6. Time-to-Market Pressure

If your team is racing toward a release deadline or in the middle of a migration, building load-testing infrastructure from scratch might not be realistic.

Outsourcing is often the only viable option here.

You can skip the tooling setup, data generation, and environment prep, and go straight into performance validation; all while your team focuses on finishing features and fixing bugs.

This pressure is only increasing: by 2027, nearly 80% of enterprises will operate under DevOps-driven release cycles, which will push teams toward outsourcing performance testing simply to keep pace without slowing delivery.

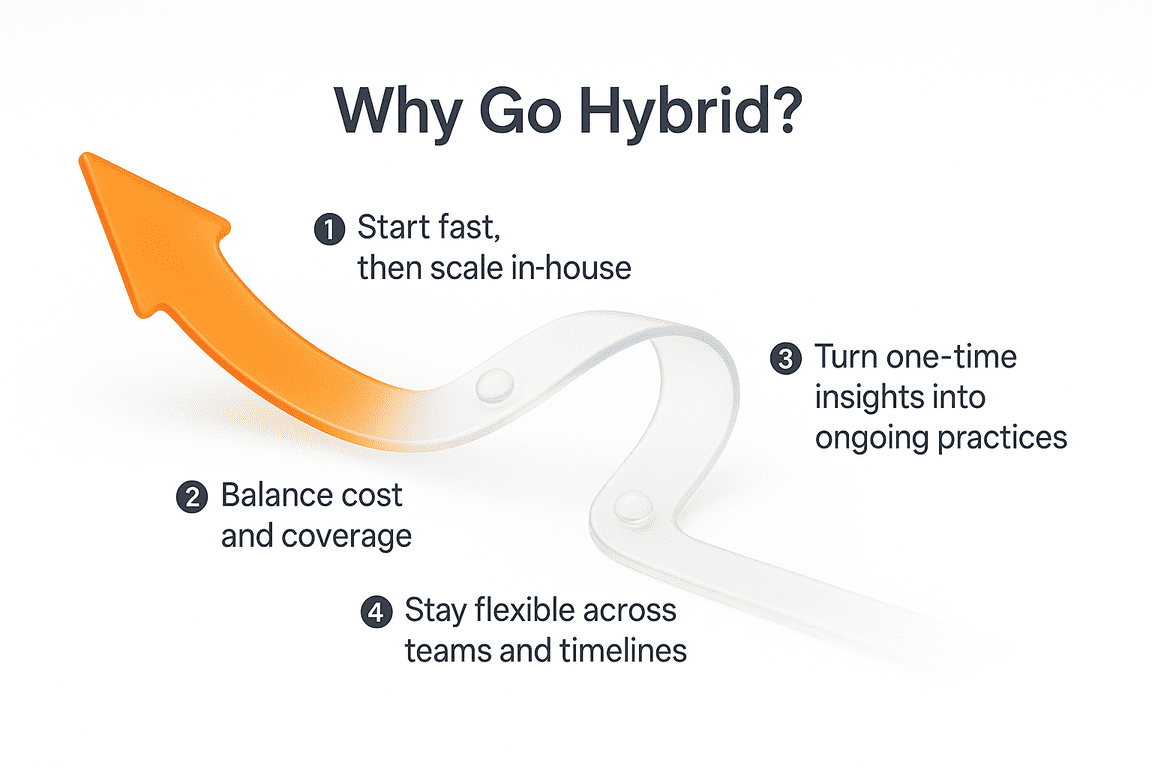

Hybrid Model: The Best of Both Worlds for Application Load Testing

For many teams, the choice between outsourcing and in-house load testing doesn’t have to be all or nothing. A hybrid model can offer the speed and expertise of external providers, combined with the long-term efficiency and control of an internal setup.

Why Go Hybrid?

- Start fast, then scale in-house: External experts help you get initial tests running quickly, especially for high-risk releases or unfamiliar architectures. Once the foundation is in place, your team can take over and build on what’s been learned.

- Balance cost and coverage: Use outsourced testing for critical or complex flows, like payment processing, partner APIs, or batch processing, while handling simpler or repeatable tests internally. This keeps budgets in check without sacrificing depth.

- Turn one-time insights into ongoing practices: A good provider will show you how to structure tests, model real usage, and tune for performance. These lessons make it easier to train your team and improve your internal process going forward.

- Stay flexible across teams and timelines: If your development cycles vary by product line or business unit, a hybrid model lets you assign resources accordingly. Fast-moving teams can test in CI/CD. Others can tap into vendor expertise when needed.

What It Looks Like in Practice

- You outsource a major performance test before a public launch or migration.

- The vendor builds and executes the scenarios, shares detailed reports, and walks your team through key findings.

- Your team takes that knowledge, adapts it into lighter CI/CD checks, and maintains ongoing performance visibility between big events.

- For the next major release, you already have a starting point; plus the option to bring the external team back if needed.

The hybrid model works because it’s practical. It doesn’t require building everything from scratch or depending fully on outsiders. It’s built for teams who want to move fast, test smarter, and grow internal capabilities over time.

How PFLB Fits Into Application Load Testing Strategies

If your backend powers orders, customer data, transactions, or partner integrations, performance is critical. That’s exactly where PFLB can help.

The latter specialize in application-level load testing, helping engineering teams validate and improve the systems behind the UI: APIs, microservices, queues, batch processors, and complex internal flows that keep your business running.

Why Teams Use PFLB for Application Load Testing

Full Backend Coverage

PFLB supports realistic testing of REST APIs, gRPC, message queues, GraphQL endpoints, and complex multi-step flows, helping teams simulate real user and system behavior across the stack.

Designed for High-Stakes Environments

PFLB is trusted by companies in industries where performance isn’t optional, finance, healthcare, logistics, telecom, where delays impact SLAs, compliance, and customer trust.

Flexible, Hybrid-Ready Engagements

Some teams use PFLB during high-pressure events like releases or migrations. Others keep the team involved long-term while gradually building internal capabilities.

Compatible with Leading Tools

PFLB works with a wide range of performance tools, including JMeter, Gatling, LoadRunner, and k6, ensuring compatibility with existing architectures and workflows.

Clear, Business-Focused Reporting

Test results go beyond technical metrics. Reports highlight which transactions are affected, how performance ties into SLAs, and what’s at risk if bottlenecks aren’t addressed.

Final Thoughts

For any business that depends on complex digital systems, load testing plays a key role in keeping things stable and reliable, especially when pressure builds up.

But the question isn’t simply whether to build everything in-house or outsource completely. The real value often comes from combining both. The teams that treat performance as an ongoing discipline are the ones that spot issues early, maintain a strong user experience, and deliver on business goals, even under pressure.

Take time to assess your current capabilities honestly. If you’re missing tools, expertise, or time, bringing in external specialists for key tests can be a smart move. At the same time, investing in internal processes ensures long-term resilience.

In the end, it’s about keeping your applications healthy, reliable, and ready for whatever real-world demand comes next.