Testing APIs without proper documentation can feel like walking through fog — every endpoint is a guess, every parameter a risk. But not with Swagger UI API testing.

Swagger turns static API definitions into a live, interactive interface where developers and QA teams can validate endpoints, check request/response schemas, and explore the system in real time — all from a browser. It connects documentation, testing, and collaboration in one place.

For teams scaling microservices or adopting CI/CD, Swagger makes early defect detection and contract verification part of the daily workflow. And when functional testing needs to evolve into performance validation, an API load testing tool will ensure that your APIs perform as well under stress as they do in theory.

What Is Swagger API Testing?

At its core, Swagger API testing revolves around the OpenAPI Specification (OAS) — a standard format that describes every detail of an API, from endpoints and parameters to authentication and expected responses.

Swagger is not a single tool but a suite that includes:

When you open a service’s OpenAPI file in Swagger UI, you get an instant, browser-based interface that displays all endpoints and allows real-time requests to the API. This makes it ideal for:

From a QA perspective, this means documentation and testing no longer live in silos. What is Swagger UI? It’s essentially an executable API documentation layer — the same contract used by both developers and testers. That shared contract reduces miscommunication, shortens debugging cycles, and ensures that front- and backend teams work from the same truth.

In short, Swagger API testing provides a structured overview of your endpoints, turning human-readable documentation into a machine-verifiable testing surface. It’s the first step toward continuous validation and smoother integration across complex microservice ecosystems.

Why Swagger Matters for API Testing

In most teams, API testing used to be a disconnected process — developers wrote code, QA engineers tried to reverse-engineer requests, and documentation quickly went stale. Swagger changed that rhythm.

By enforcing a single source of truth — the OpenAPI definition — Swagger ensures that every stakeholder interacts with the same data model, endpoint list, and validation rules. This shared visibility makes API testing faster, clearer, and less prone to errors.

Here’s why Swagger has become essential for QA and development teams alike:

Before Swagger vs After Swagger

| Stage | Before Swagger | After Swagger |

| Documentation | Static, outdated | Auto-generated, always synced |

| Testing workflow | Manual, tool-dependent | Unified, browser-based |

| Collaboration | QA/dev misalignment | Shared interactive spec |

| Defect discovery | Late in staging | Early, during design |

| Release cycle | Slower, reactive | Faster, continuous |

Ultimately, Swagger testing doesn’t replace existing QA workflows, it streamlines them. It brings clarity where there was fragmentation and bridges the communication gap between code, documentation, and real API behavior.

How to Use Swagger UI

Swagger UI turns an OpenAPI definition into a visual, interactive interface where you can test endpoints directly — no separate client required.

Access the UI

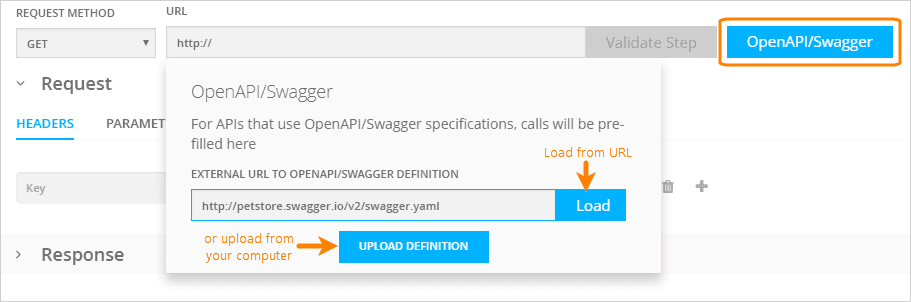

Load/Open an OpenAPI Definition

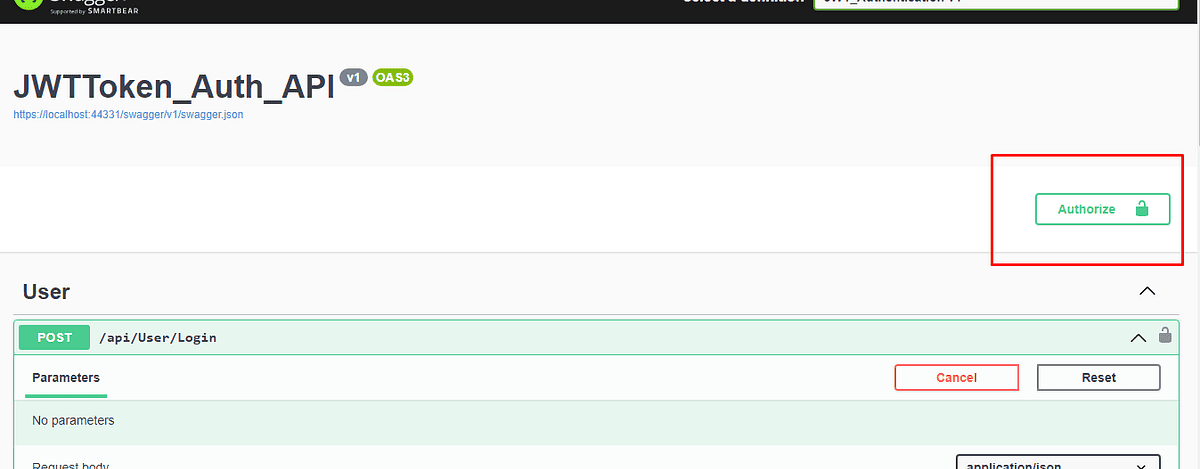

Authorize

Try It Out / Execute

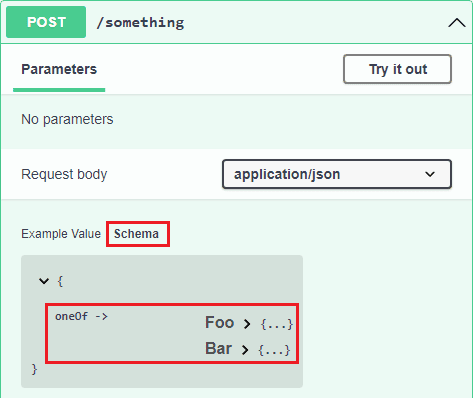

Schema & examples

Environments & versions

Local hosting / embedding

Swagger API Testing Best Practices

Keep your OpenAPI specs versioned and validated

Design for testability in the spec

Automate regression tests for every API update

Use realistic mock data and error cases

Combine Swagger with security checks

Integrate with CI/CD pipelines

Keep environments and versions in sync

Document auth flows completely

Know what Swagger UI is used for vs. what it isn’t

Keep your examples executable

Operationalize quality signals

Team rituals that prevent decay

Swagger vs Postman vs JMeter — Which Tool Should You Use?

Each of these tools — Swagger, Postman, and JMeter — plays a different role in the testing lifecycle. They’re not competitors so much as complementary layers in a mature QA pipeline. Understanding what each does best helps teams choose the right tool for the right stage of validation.

Common Challenges in Swagger API Testing

Even with its intuitive interface and strong alignment with OpenAPI standards, Swagger isn’t a silver bullet. Teams often run into recurring pain points when they start using it beyond basic documentation — particularly when scaling up or integrating with CI/CD. Understanding these challenges helps you anticipate and design around them early.

1. Outdated or Incomplete OpenAPI Specs

Swagger depends entirely on the accuracy of the API definition. If that definition isn’t maintained, the UI becomes misleading instead of helpful.

2. Environment Inconsistencies and Mock Servers

Swagger makes testing endpoints easy — but only if they point to stable, realistic environments.

3. Version Mismatches Between Development and Production APIs

Swagger UI can expose multiple API versions, but that flexibility can backfire if teams test the wrong one.

4. Limited Automation and Manual Validation

Swagger excels at exploratory testing, but it wasn’t designed as a full automation framework.

5. Security Gaps and Authentication Drift

Security schemes often change as APIs evolve, but the Swagger spec may lag behind.

- Symptoms:

- Solution:

6. Overreliance on Swagger for All Testing Needs

Swagger is perfect for API visualization and contract validation, but it’s not meant for high-volume testing.

Final Thoughts

Swagger is best when used for what it was built to do: make API documentation testable and transparent. It bridges development and QA, turning static specs into something you can actually run, verify, and trust.

It’s not a load-testing or automation framework — and that’s fine. Swagger belongs at the stage where you need to check that endpoints behave as documented, that contracts are clear, and that teams speak the same language.