API performance testing is a critical component of any software development process. It ensures that your application responds quickly, reliably, and accurately to user requests. But with so many metrics to consider, which ones should you focus on? In this blog, we’ll explore eight key metrics that are essential for evaluating the performance of your APIs.

You can also find some helpful information in our API mocking guide.

Grab a cup of coffee, and let’s dive into the world of API performance testing together!

Key Takeaways

1. Response Time: The Speed of Your API

When discussing API performance testing, response time is the first metric that comes to mind, and for good reason. It measures the duration from when a request is sent to when a response is received. Fast response times are crucial for user satisfaction, as they directly impact the speed and fluidity of the end-user experience. The importance of efficiency in API responses cannot be overstated. Ideally, your API should maintain consistent response times under various conditions to ensure a reliable user experience. Regular monitoring helps identify fluctuations in response times, which could indicate underlying issues that need immediate attention. Read also How to improve API performance.

2. Success Rate: Measuring Reliability

A high success rate, measured by the percentage of requests that return a successful response, signals a healthy, reliable API. This metric is crucial for understanding how many API failures happened to deliver the expected outcome. Monitoring the success rate helps in optimizing API performance and reliability, ensuring that users get a seamless experience. Applications in domains such as e-commerce, banking, and healthcare cannot afford frequent downtimes or errors, making the success rate an indispensable metric to track.

3. Error Rate: Keeping Tabs on Faults

The error rate goes hand in hand with the success rate, providing insights into the frequency and nature of errors your API encounters. Whether it’s a 4xx client error or a 5xx server error, keeping a low error rate is paramount. Errors can stem from various factors, including API endpoint problems or issues with the server’s infrastructure. Using tools that alert you to unusual spikes in error rates can prevent significant disruptions. Understandably, pinpointing errors can optimize user experience, as it enables developers to address and rectify issues swiftly.

4. Concurrent Users: Understanding Load Capacity

APIs must cater to varying numbers of concurrent users without compromising on performance (learn more about concurrent users vs simultaneous users). This metric gives you an idea of the load your API can handle before performance starts to degrade. Stress testing or load testing, using an API load testing tool, helps assess how your API performs under peak conditions, ensuring smooth scalability. Preparing your API to handle sudden spikes in traffic, especially during key business events, ensures a stable experience for every user.

5. Throughput: The Flow of Data

Throughput measures the number of requests an API can handle within a given timeframe and is a direct indicator of its efficiency. High throughput under stress signifies a robust infrastructure that can deal with heavy traffic. It’s essential for APIs that support data-intensive operations, serving as a benchmark for optimizing system capacity and planning for growth. In fact, employing product performance testing services can help ensure your infrastructure remains stable under varying conditions. For a deeper understanding of how to test web applications effectively and improve performance, check out our guide on how to test web application at PFLB Blog. Monitoring throughput alongside other metrics like response time and success rate offers a comprehensive view of your API’s performance.

6. Server Utilization: Resource Efficiency

Server utilization tracks the resources consumed by your API, including CPU, memory usage, and disk IO. Efficient use of server resources ensures that your API remains responsive and stable under varying loads. High server utilization may indicate that your API needs optimization or that it’s time to scale your infrastructure. Using one of the best API load testing tools can help identify performance bottlenecks by simulating real-world conditions and assessing how efficiently your API manages resource allocation. Tools that monitor server-side performance provide insights into how well your API uses underlying hardware, enabling you to make informed decisions about optimization and scaling.

7. Time to First Byte (TTFB): The Initial Step

Time to First Byte (TTFB) measures the time from making a request to receiving the first byte of the response. It’s an important aspect of the performance that affects the perceived responsiveness of your API. A delayed TTFB can be a sign of server-side bottlenecks, indicating problems such as slow database queries or inadequate server resources. By minimizing TTFB, you ensure faster data delivery, improving the overall speed of your application.

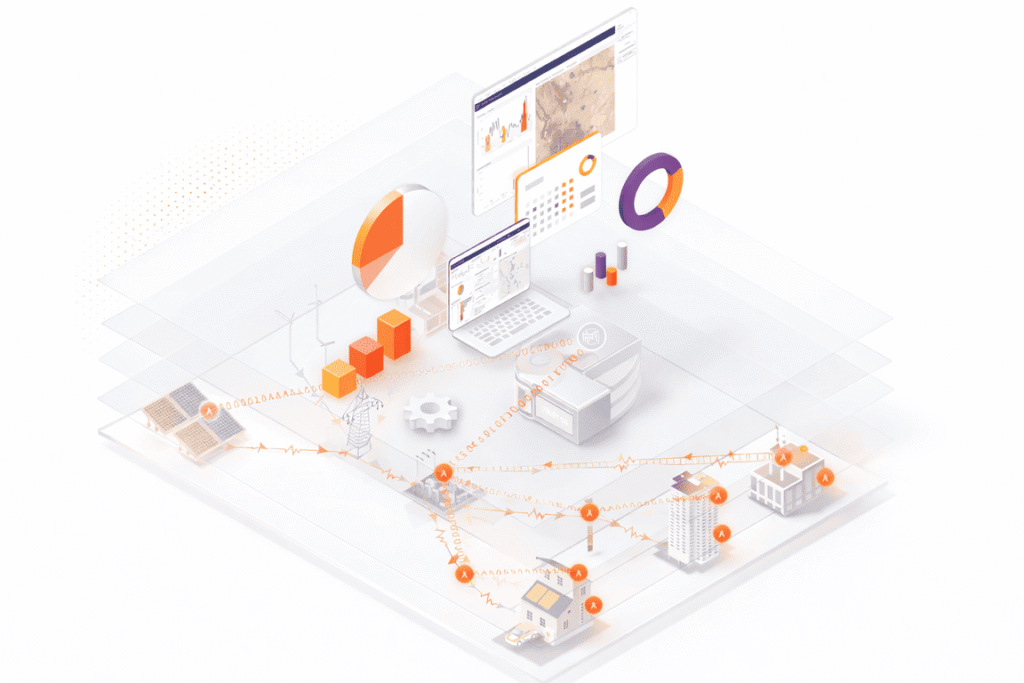

8. API Dependencies: The Impact on Performance

Most modern applications rely on multiple APIs, making the performance of one dependent on others. Tracking how these dependencies affect your API’s performance is crucial. Issues in a third-party API can adversely impact your app’s functionality, leading to slow response times or errors. Implementing redundancies and setting service level agreements (SLAs) with third parties can mitigate risks. This metric helps in understanding the broader ecosystem your API operates within, ensuring that external dependencies do not hinder your app’s overall performance.