One-of-a-kind AI tool cuts reporting time, boosts productivity, and makes performance data accessible to all.

PFLB is proud to introduce AI Reports, a first-of-its-kind feature that’s set to reshape how performance engineering teams handle reporting. Built to tackle one of the most time-consuming parts of the job, AI Reports automatically analyzes test results, identifies issues, and generates full, readable reports — no manual formatting needed. Internal data shows it can save teams up to 10% of their time, simply by taking report writing off their plate. It’s a smarter, clearer way to work with performance data.

What Makes It Unique

What sets AI Reports apart is that it’s not just another AI text generator wrapped around test results. It’s powered by PFLB’s own proprietary data model, built from the ground up for performance engineering. It’s a system that actually understands performance data.

The AI can identify issues on its own, without needing to be told where to look. It scans test results, spots anomalies, and points out exactly where things went off — then explains those findings clearly and in context. It even goes a step further by interpreting performance graphs, highlighting key moments, and describing what’s happening and why it matters.

And when the report is ready, it’s easy to share — no login required. Just send a link, and anyone can view the full interactive report, whether they’re part of the platform or not.

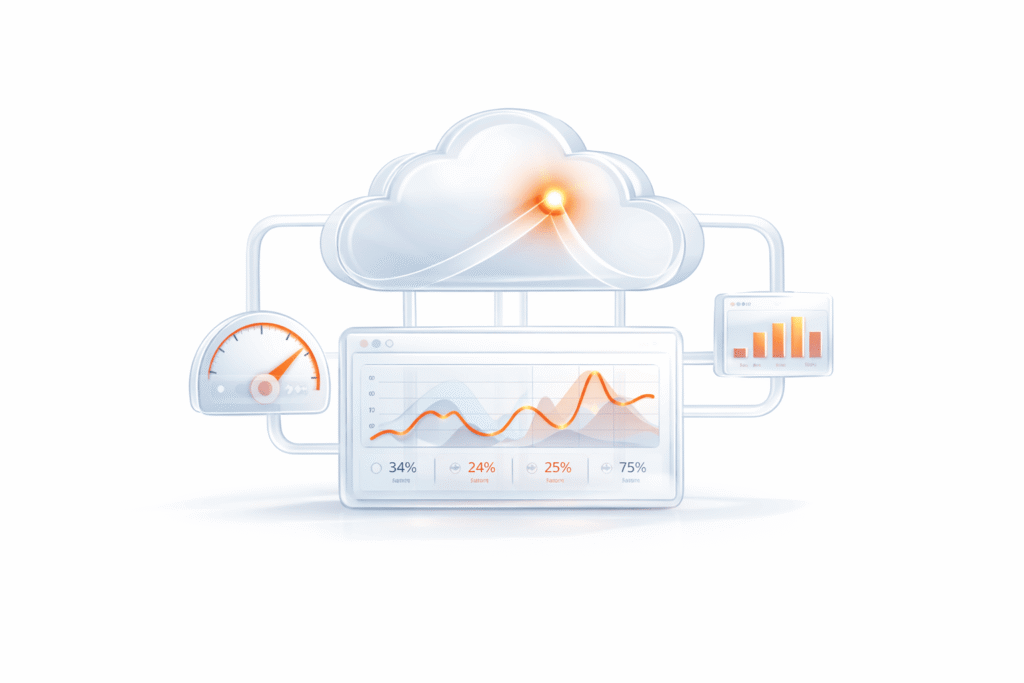

Interactive Visuals

AI Reports deliver fully interactive graphs that make digging into your performance data quicker and more intuitive. You can hover over data points to see exact values, explore trends, and adjust the view to focus on what matters most.

The visual tools are designed to help engineers move faster and with more precision. It’s a hands-on way to explore results — and a major step up from the usual static reports.

Fully Automated Reports

With AI Reports, the entire reporting process is handled automatically — from analysis to full write-up. It builds complete reports that often match or even exceed the quality of what a junior engineer might produce manually.

Because the system is built on consistent logic and a deep understanding of performance metrics, you get reliable, high-quality reporting every time, no matter who ran the test. That means fewer discrepancies, fewer missed insights, and less reliance on someone’s writing skills to get the message across.

It’s reporting that works the same way, every time — clear, accurate, and consistent.

For Managers & Stakeholders

AI Reports was also built with managers and stakeholders in mind.

The reports are written in clear, natural language that makes complex performance data easy to understand, even for those without a technical background.

Instead of vague summaries or raw graphs, managers get a focused narrative: what happened during the test, where potential issues lie, and what needs attention. That makes it easier to connect performance metrics with business outcomes, whether it’s ensuring stability before a launch or tracking improvements over time.

By bridging the gap between engineers and decision-makers, AI Reports helps teams move faster and collaborate better — without needing someone to translate in between.

Efficiency and ROI

Writing reports has always been one of the most time-consuming parts of performance testing — and one of the easiest to overlook. With AI Reports, teams can save up to 10% of their total work time, based on internal studies. That’s time engineers can now spend analyzing deeper issues, optimizing systems, or simply moving on to the next task.

Beyond saving time, automated reports also reduce the mental load that comes with context-switching between testing and documentation. It’s a small shift that adds up: less manual effort, fewer delays, and more consistent output across the board.

Sharing and Accessibility

One of the most practical features is the ability to share reports via a simple link — no registration required. The shared report includes everything: the full write-up, interactive graphs, and all the underlying data. It’s a seamless way to bring others into the loop without exporting, emailing files, or setting up access rights.

Availability

AI Reports is currently available in beta for all registered PFLB users. If you’re already using the platform, you can start exploring the feature right away and see how it fits into your workflow. Want to see more similar innovations? Read our overview on AI in Load Testing.