How Granularity Influences the Load Testing Results with Grafana+lnfluxDB & LoadRunner Analysis

PFLB has worked with load test analysis and test process consulting for many years. During that time we’ve tried many tools and technologies out. In the article, we are going to explain how different configurations for LoadRunner Analysis and Grafana+lndluxDB influence the results and account for data differences.

When the operation intensity is high, around 4-5 million operations per hour, then the result analysis using LoadRunner Analysis takes longer. We’ve decided to shorten the analysis runtime and use alternative software. Our team has chosen Grafana+lnfluxDB. As it turned out, the results shown by these two tools differ significantly. LoadRunner Analysis lowered the response time by several times and increased the maximal performance level up to 40%. As a result, the performance reserve compounded 80% instead of the actual 40%.

Using LoadRunner Analysis

LoadRunner Analysis is a software that analyses executed tests. LoadRunner Analysis takes the dump in that has been created by the controller during testing. The dump contains raw data that gets processed by LoadRunner Analysis to create different tables and diagrams.

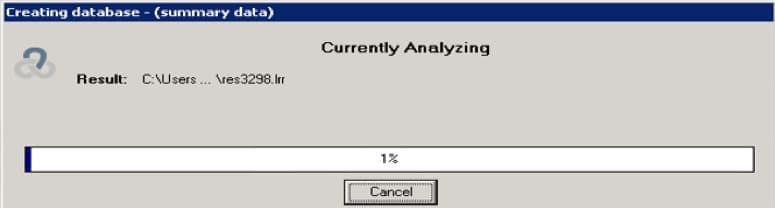

To create a new session in LoadRunner Analysis:

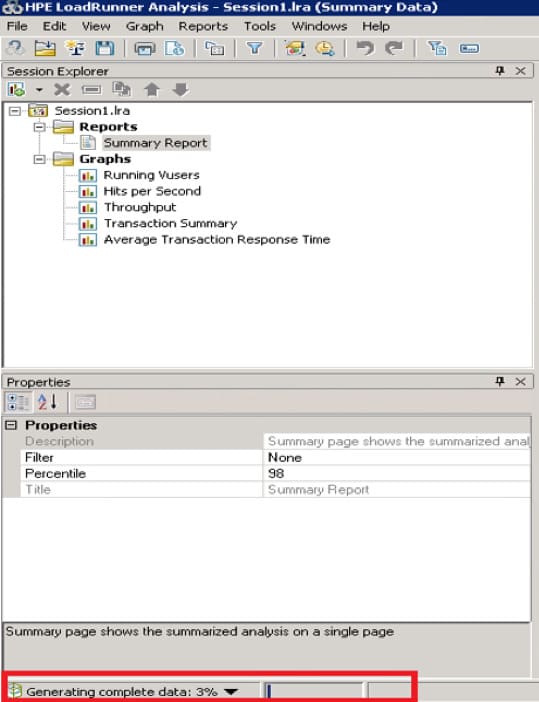

Now, we’ve got the complete data from LoadRunner Analysis and can interpret them. The program displayed the preliminary results, but we only received the final results after “Generate complete data” was finished.

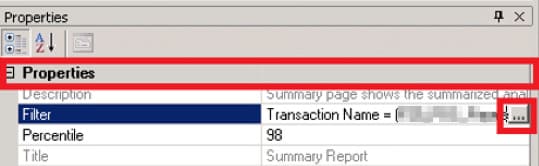

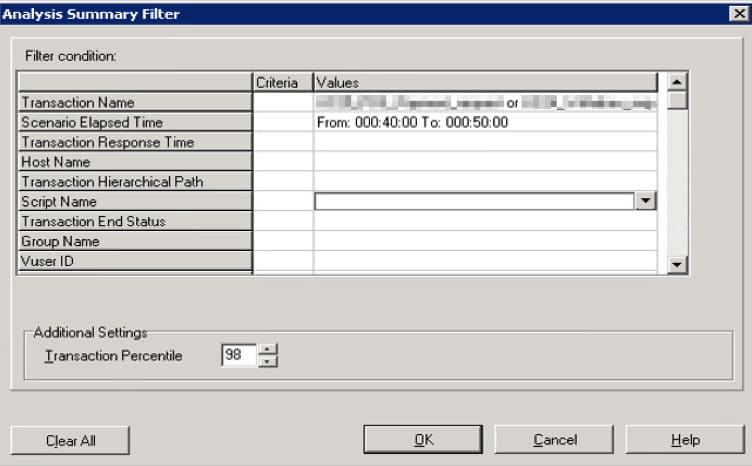

It’s possible to customize the filter, for example, by the transaction name, granularity, or percentile:

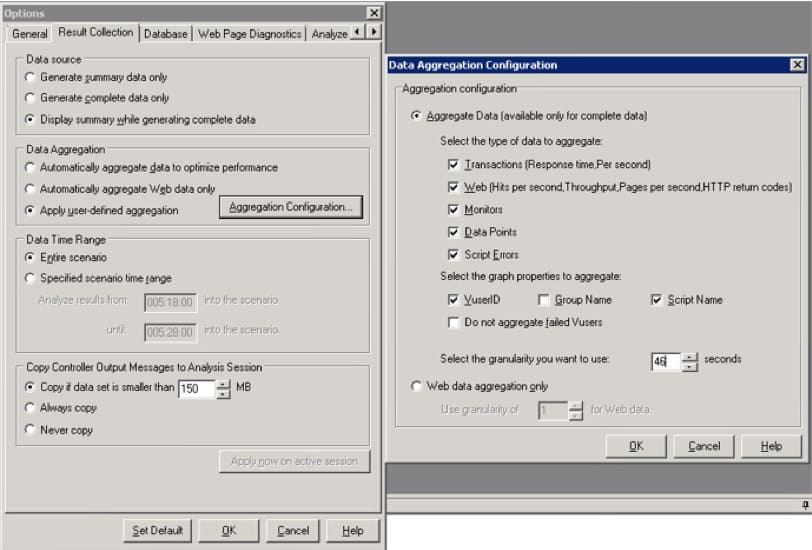

Configuring LoadRunner Analysis on our project.

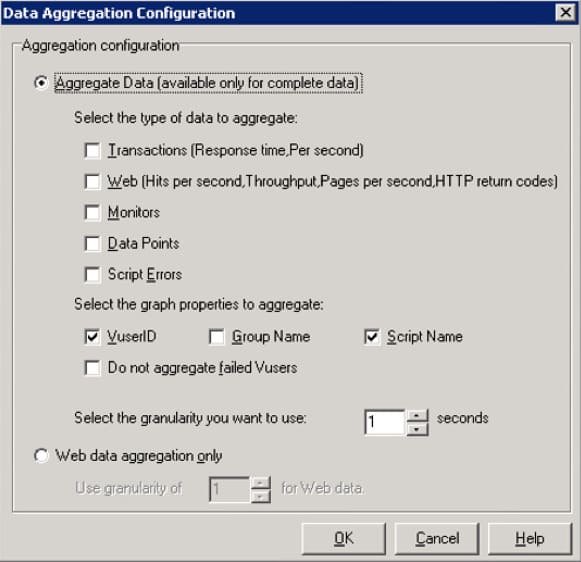

We are using the following LoadRunner Analysis configurations:

Problems working with LoadRunner Analysis

The described above approach takes very much time to:

The more errors per test found, the longer Load Runner Analysis aggregates and saves the session.

While LoadRunner Analysis is running, there are the following problems:

We’ve collected the statistics on LoadAnalysis runtime depending on the test data volume in the following table:

| Runtime | Maximal TPS | Raw data, MByte | Processing, minutes | Creating diagram, from | Filter, from |

| 3 hours 45 minutes | 169 | 175 | 20 | 30 | 10-30 |

| 9 hours 20 minutes | 1004 | 398 | 60 | 60 | 20-80 |

| 9 hours 30 minutes | 1041 | 728 | 100 | 90-120 | 20-120 |

| 6 hours | 3800 | 3258 | 900 | ~600 | ~600 |

| > 6 hours | 7500 | 8146 | ~1440 | ~1800 | ~1800 |

We want to mention that the data has been collected with the granularity set to 1 second and without Web and Transaction flags. Please see the details in the Correct LoadRunner Analysis configuration and settings section.

Using Grafana+lnfluxDB

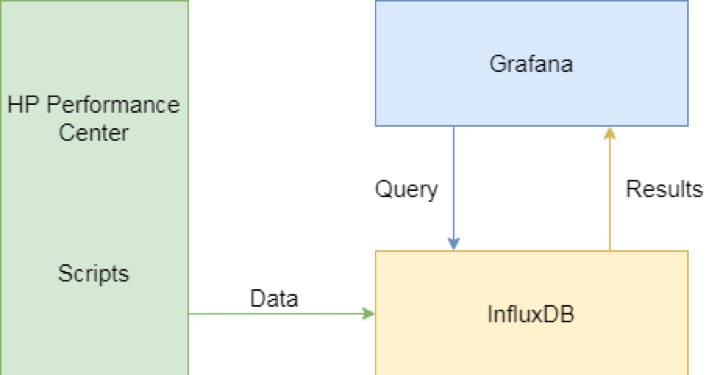

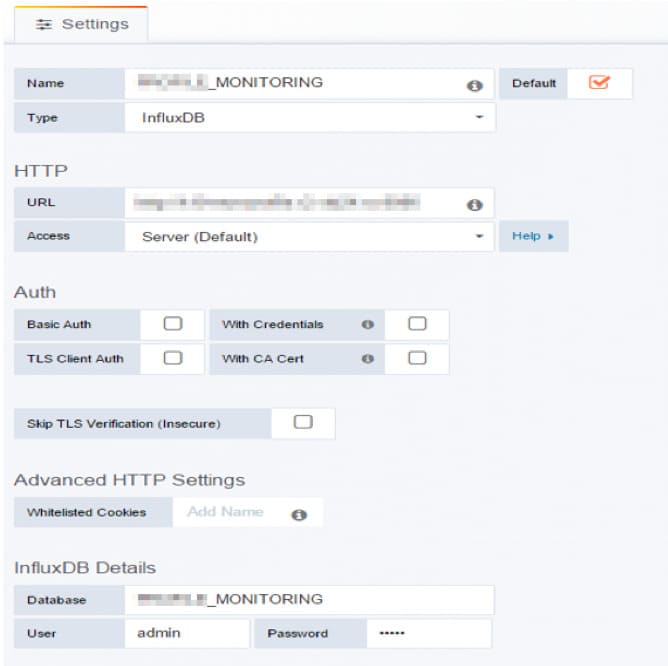

An alternative approach to retrieve the testing results is to use Grafana+lnfluxDB.

Grafana is an open-source platform to visualize, monitor, and analyze data.

InfluxDB is an open-source time series database aimed at high-load storage and retrieval of time series data.

Here is the solution’s scheme:

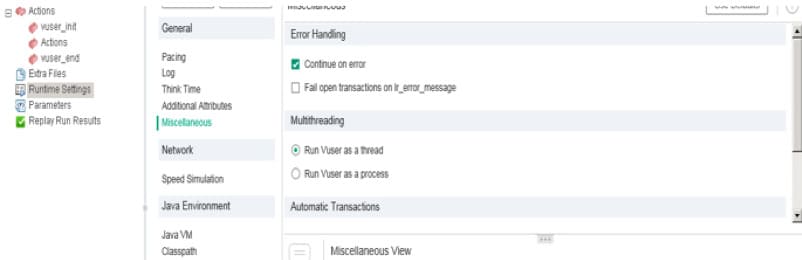

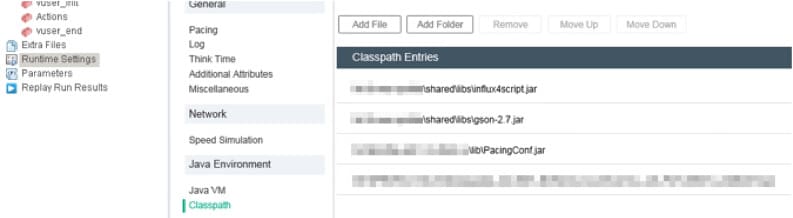

We send the runtime data from every load script to InfluxDB. Scripts written on JavaVU and C receive the data differently.

JavaVU

To receive the data:

public int init() throws Throwable {

this.Client = new lnfluxClient("\\\\{host}\\datapools\\params\\influx_connect.json");

this.client.setUniq(scriptNum,lr.get_vuser_id(),lr.get_group_name());

this.client.enableBatch();

}Influx_connect.json contains:

{

"host" : "{host}:{port}",

"db" : "MONITORING",

"login" : "admin",

"password" : "admin",

"count" : 10000,

"delay" : 30000

}

String elapsedTime = findFirst(lr.eval_string("{RESPONSE}"), "<elapsedTime>([^<]+)</elapsedTime>");

double elapsedTimelnSec = Double.valueOf(elapsedTime) / 1000;

if (isResponseCodeOK(lr.eval_string("{RESPONSE}"))) {

lr.set_transaction("UC"+transaction,elapsedTimelnSec, lr.PASS);

this.client.write("MONITORING", elapsedTimelnSec, "Pass","UC"+transaction,"processing");

}

else {

lr.error.message("Transaction UC"+transaction+" FAILED [" +" PAN=" + lr.eval_string("{PAN}") + SUM=" + lr.eval_string("{SUM}" + ']'));lr.set_transaction("UC"+transaction,elapsedTimelnSec, lr.FAIL

);

this.client.write("MONITORING", elapsedTimelnSec, "Fail", "UC"+transaction, "processing")C

HTTP API is used to send the data from load scripts to C.

Storage

In the simplest case, the storage request in InfluxDB looks the following way:

Web_rest(

"influxWrite",

"URL=http://{host}:{port}/write?db={db}",

"Method=POST",

"Body={tbl},Transaction={tran_name},Status={Status}

ElapsedTime={ElapsedTime},Vuser={yuserid)",

LAST

);In the request, we send in the “table”{tbl} tags with the transaction name and the current status, as well as the keys with the business operation execution time and the virtual user ID (Vuser ID) from the current transaction. Vuser ID can be used to create a diagram about the virtual user amount in Grafana.

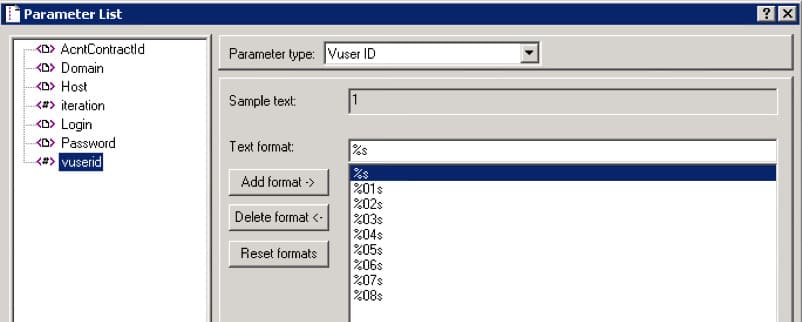

VuserID

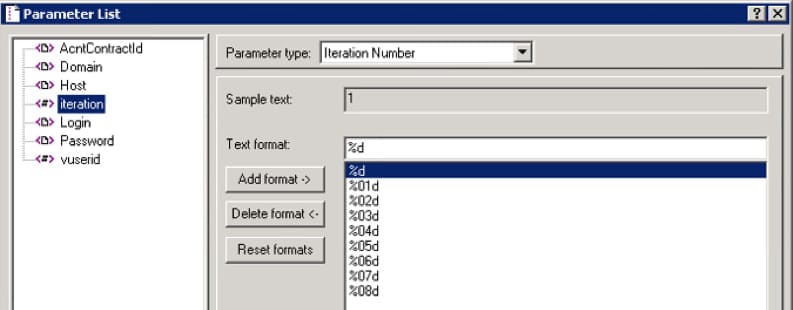

VuserID value can be indicated as a parameter in the script. Add a parameter with the VuserlD type:

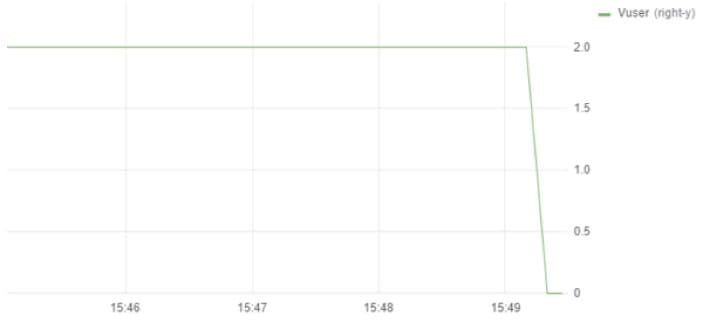

If the transaction pacing is bigger than the required for the diagram aggregation, then we generate the following diagram on virtual users (VU):

To generate a graph without zig-zags, we can group the data by bigger time intervals, e.g., by 30 seconds. There is also another approach: to store the VU values more often while saving the business operation for not every script execution.

For example, if pacing equals 27 seconds, it can be reduced to 9 seconds, whereas the business operation can be executed only every third time. We receive this way for the same script on 27 second intervals, not 2, but 4 points, which means that the graph looks the following way:

Iteration Number

To monitor the business-operation start time, we can use the integrated iteration counter by creating a parameter of Iteration Number type:

The complete script

Web_rest(

"influxWrite",

"URL=http://{host}:{port}/write?db={db}",

"Method=POST",

"Body={tbl},Transaction={tran_name},Status={Status}

ElapsedTime={ElapsedTime},Vuser={yuserid)",

LAST

);it = atoi(lr eval string("{iteration}"));

if(it%3 == 0){request_trans_handle = lr_start_transaction_instance("RequestTran",0);This can also be done directly in the code:

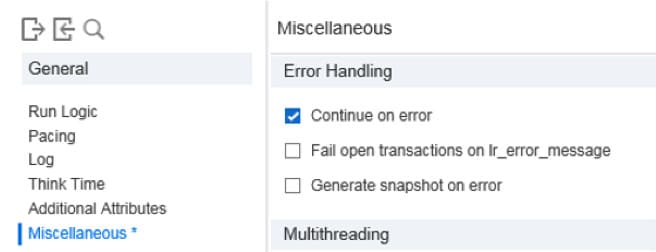

lr_continue_on_error(1);Web_url(...);lr_continue_on_error(0);

trans_time = lr_get_trans_instance_duration(request_trans_handle);

lr_save_int(trans_time*1000, "ElapsedTime");

lr_end_transaction_instance(request_trans_handle, LR_PASS);Status=Pass to InfluxDB, otherwise – Status=Fail:

Web_rest(

"influxWrite",

"URL=http://{host}:{port}/write?db={db}",

"Method=POST",

"Body={tbl},Transaction={tran_name},Status={Fail/Pass}

ElapsedTime={ElapsedTime}.Vuser={vuserid}",

LAST

);Utilization of the server, where InfluxDB+Grafana are installed

Most importantly, we need to take care of the operative memory utilization.

We’ve calculated the operative memory utilization during a 6-hour test that looked for maximal performance with the max intensity of 4.8 million operations per hour:

| Test start | 609 MByte |

| Test middle | 2181 MByte |

| Test end | 3593 MByte |

Solution’s advantages

This approach allows us to observe the chosen metrics online with maximum precision. Likewise, we don’t need to use filters, one after another, to generate the desired tables and graphs.

Result Comparison

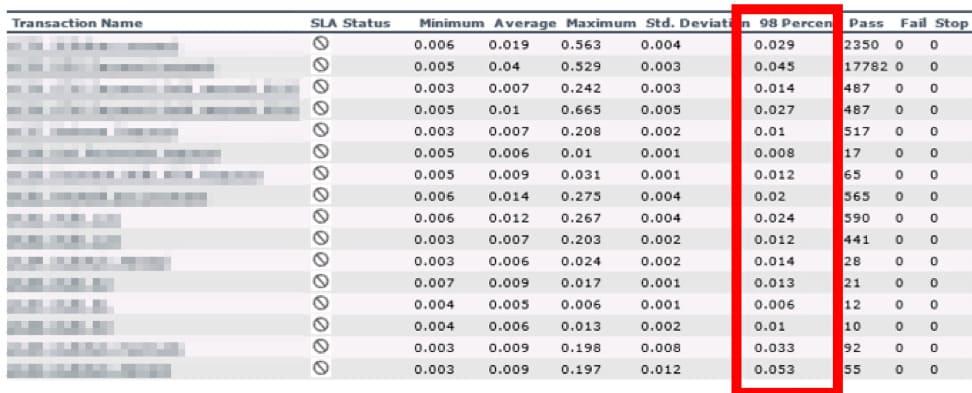

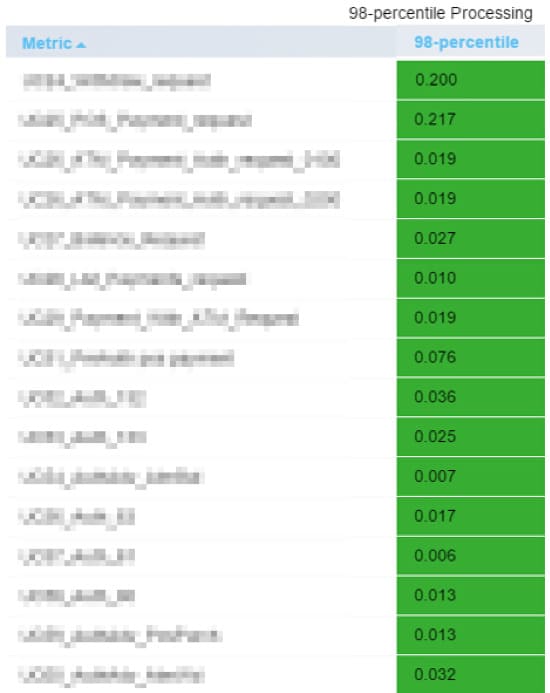

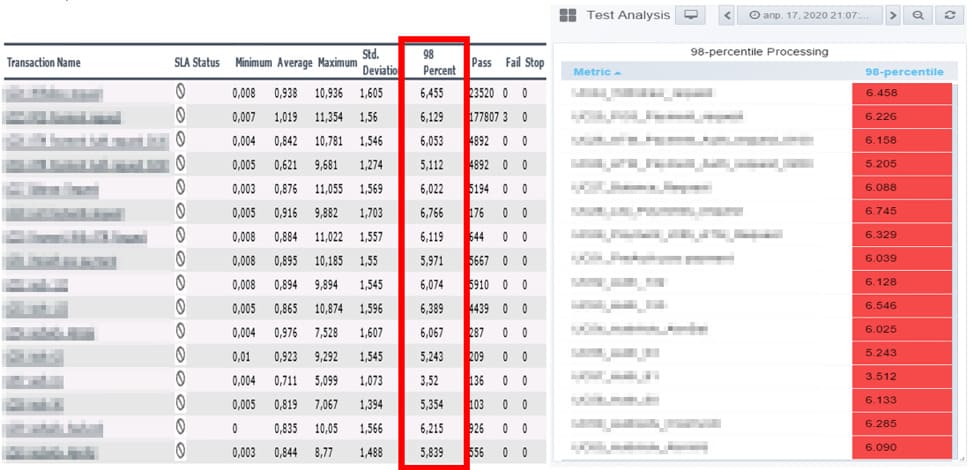

While comparing results from LoadRunner Analysis and Grafana+lnfluxDB, we’ve encountered data differences:

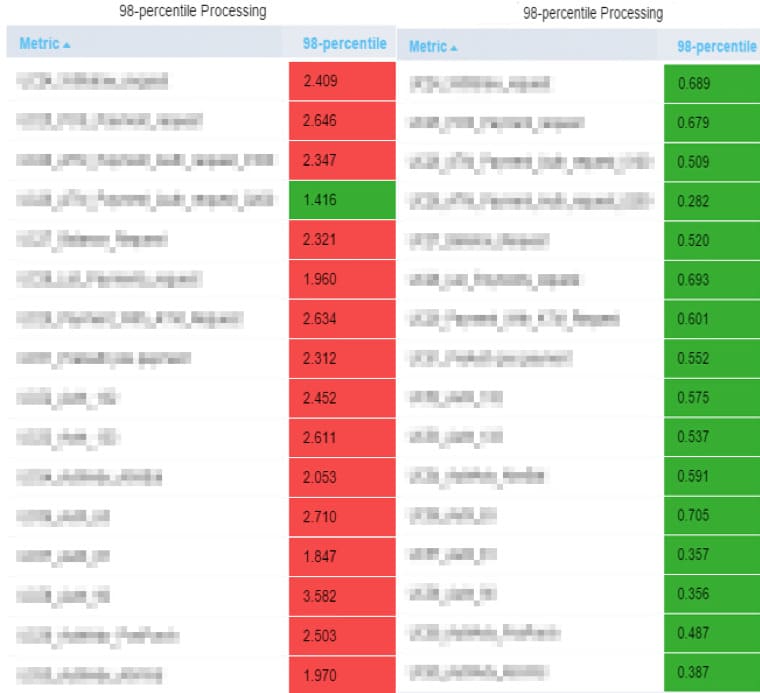

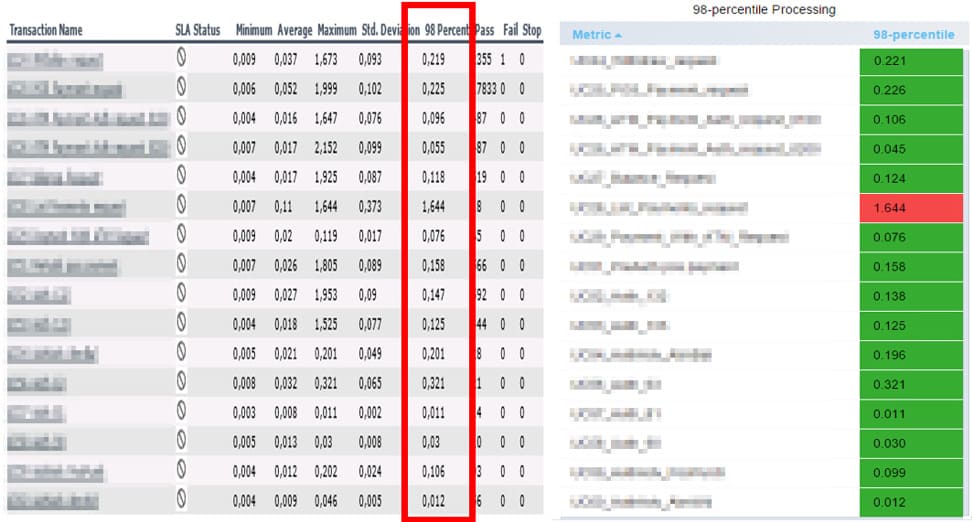

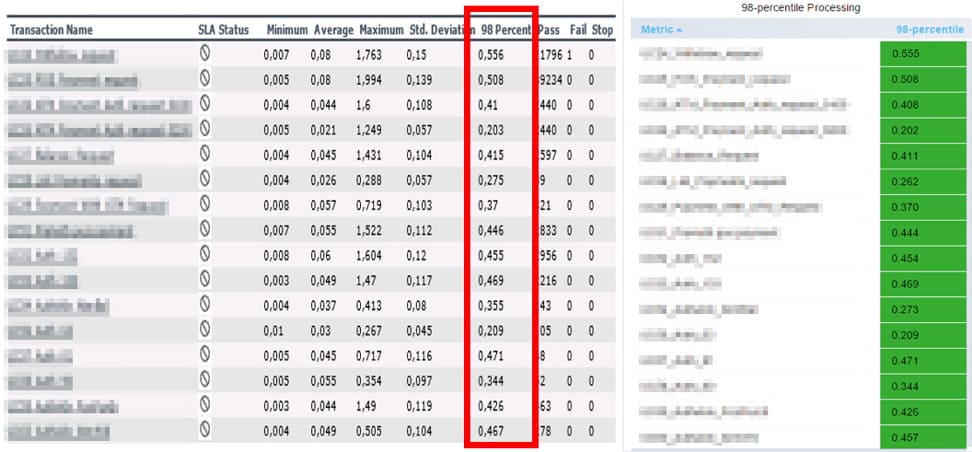

It has been most clearly displayed in the response time on the 98 percentile of the operation processing, where the requirement is that it shouldn’t exceed 1.5 seconds.

In one of such tests, the maximal performance level compounded 140% in Grafana+lnfluxDB, whereas in LoadRunner Analysis, it amounted to 180%. The response time results on the 98th percentile were lowered by LoadRunner Analysis by 2-10 times.

Level 160% Grafana+lnfluxDB / Level 140% Grafana InfluxDB

Level 160% LoadRunner Analysis

Level 180% LoadRunner Analysis

Level 200% LoadRunner Analysis

Solving the results difference problem

We’ve chosen a non-intensive operation UC56 to analyze the result differences. It produces about 2 values a minute, so during 10 minutes 23 values have been recorded: 0,008; 0.009; 0,009; 0.01; 0,007; 0.009; 0,017; 0.007; 0,008; 0.008; 0,015; 0.009; 0,009; 0.009; 0,009; 0.009; 0,009; 0.009; 0,008; 0.009; 0.009; 0.009; 0.008.

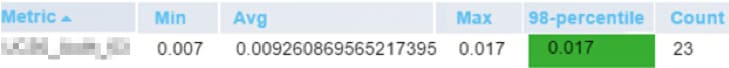

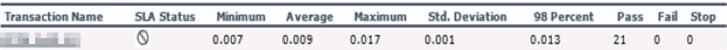

We’ve calculated the 98th percentile based on the raw data sent to InfluxBD, which is equivalent to the data for LoadRunner Analysis. The result has matched the data shown by Grafana+lnfluxBD (0.017):

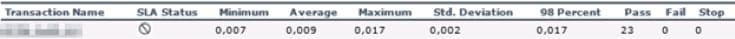

In LoadRunner Analysis, we’ve got 0.013. This value has not corresponded to any 98 percentile value:

In the LoadRunner Analysis specification, it’s written that the software applies granularity to the summary report. LoadRunner Analysis adapts the values to its algorithm using its own set of granularity values, which distorts the data.

LoadRunner Analysis Summary data only. The root of the problem

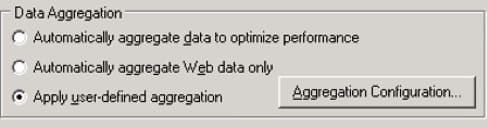

Those who have worked with LoadRunner Analysis know about the granularity parameter. Of course, many users expect that the parameter influences the graph’s generation. Unfortunately, it doesn’t work this way.

The global parameter value determines the data aggregation precision for the Summary Report. The graphs are generated based on the Summary Report. A local filter sets graph granularity value and can’t be smaller than the global granularity value.

We’ve checked how granularity influences the Summary Report:

After we’ve finished and analyzed the generated session, its results for the 98 percentile turned to be identical to Grafana+lnfluxDB. We’ve also verified the data for another test on the 20, 100, 200% levels.

Surprisingly, the root cause of the problem was the granularity. The switched-off parameters show which granularities have already been used to aggregate your data in the Summary table.

Correct Configuration for LoadRunner Analysis and Grafana+lnfluxDB.

LoadRunner Analysis

Please use the following steps to perform the global configuration before a new session is created:

All described above settings need to be applied before you’ve opened the new results in LoadRunner Analysis, i.e. before performing the steps from the Using Analysis section.

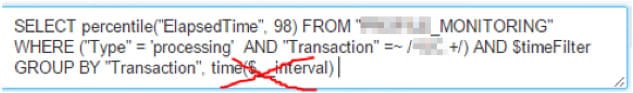

Grafana + InfjuxDB

Don’t group data by time interval in order to ensure precise values in the tables:

Comparison

There is a slight difference in the results, but it is, in our opinion, insignificant and doesn’t influence the conclusions.

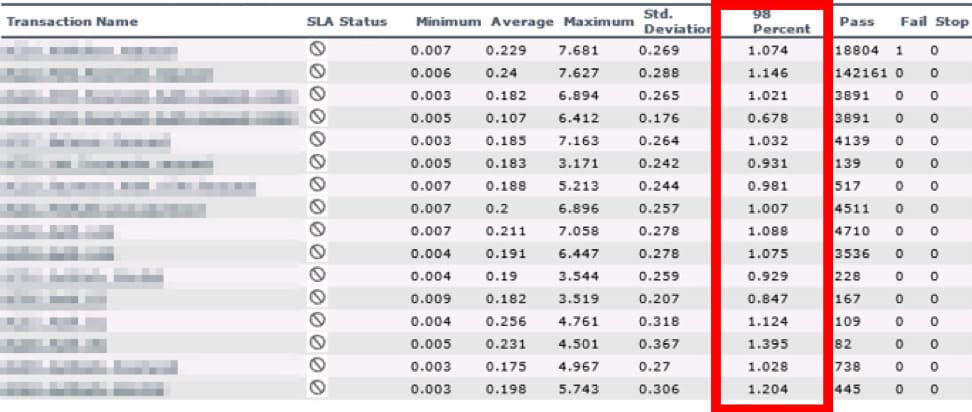

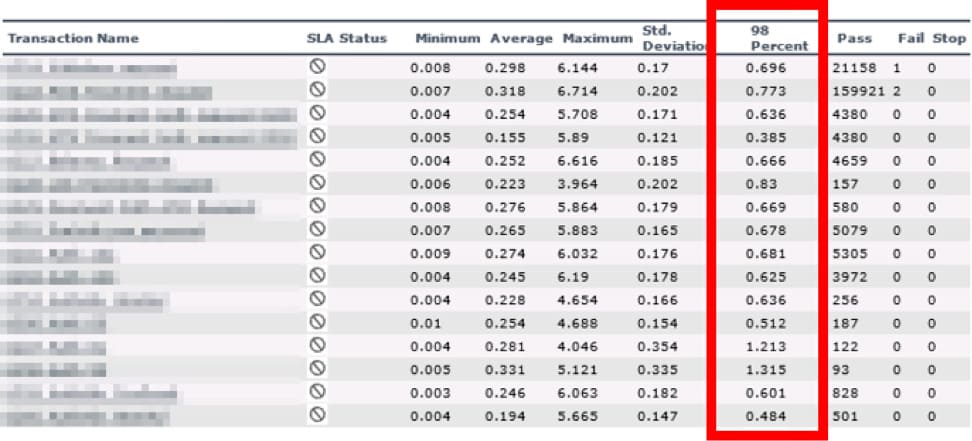

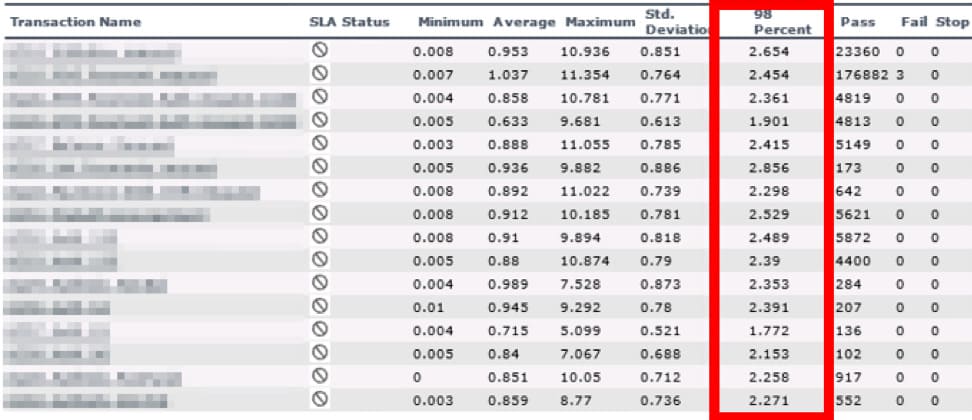

Beneath, we compare the response time on the 98th percentile without granulation in LoadRunner Analysis and without GROUP BY time($ interval) in Grafana+lnfluxDB on 20%, 100%, and 200% levels.

Level 20 %

Level 100 %

Level 200 %

P.S. For whom the data distortion by LoadRunner Analysis can be dangerous

As we’ve discovered, the data aggregation is set by default in LoadRunner Analysis. If you haven’t got such highly loaded systems, you probably have not observed the problems described above. But as you read this article, you have to consider the correctness of your test results.

It can be assumed that if your operation’s TPS is smaller than the granularity parameter, your data will have no distortions. But that’s just a hypothesis. If several users start to work, there is still a probability that while the test runs, some transactions will be performed at the same interval that equals your granularity. In this case, there can be data aggregation, so it can influence the results. However, we have not researched how these two approaches depend on TPS. We’ll do it in the future.

How to determine granularity for a saved session.

Conclusion

Grafana+lnfluxDB displays the preliminary results online, so we don’t need to wait for 24 hours.

We’ve found out what has distorted the test results

The bigger the number of operations and the bigger the difference in operations’ values, the higher the result distortion. The granularity value is only aimed at generating readable graphs. The granularity should not be used to acquire precise results.

Related insights in blog articles

API Endpoint: A Complete Guide

Modern applications rely heavily on APIs (Application Programming Interfaces) to communicate and exchange data across different systems. At the heart of this interaction lies the API endpoint — a fundamental concept that defines where and how data exchanges happen. This guide explains clearly what an API endpoint is, outlines its importance, and provides practical insights […]

gRPC vs. REST: Detailed Comparison

Choosing between gRPC and REST can feel confusing, especially if you’re trying to figure out the best way for your applications to communicate. This article breaks down the grpc vs rest comparison clearly, without jargon or confusion. You’ll learn exactly what each protocol is, the advantages and disadvantages of each, and understand why gRPC is […]

Top 10 Data Masking K2view Alternatives

If you’re exploring alternatives to K2view for data masking, this guide breaks down the top tools worth considering. We’ve compiled the leading solutions that serve a variety of industries — from finance and healthcare to DevOps-heavy SaaS. You’ll find a detailed comparison table of K2View competitors, full tool breakdowns, and a closer look at PFLB […]

How to Generate AI-Powered Load Test Reports with PFLB

Say goodbye to tedious manual reporting after load testing! With PFLB’s innovative AI-powered report generation, performance engineers can quickly turn detailed test data into comprehensive reports. This guide walks you step-by-step through setting up your test, running it, and effortlessly generating exhaustive performance analysis — so you spend less time reporting and more time optimizing. […]

Be the first one to know

We’ll send you a monthly e-mail with all the useful insights that we will have found and analyzed

People love to read

Explore the most popular articles we’ve written so far

- Top 10 Online Load Testing Tools for 2025 May 19, 2025

- Cloud-based Testing: Key Benefits, Features & Types Dec 5, 2024

- Benefits of Performance Testing for Businesses Sep 4, 2024

- Android vs iOS App Performance Testing: What’s the Difference? Dec 9, 2022

- How to Save Money on Performance Testing? Dec 5, 2022