gRPC stands for Google Remote Procedure Call, which is a modern framework designed to make communication between services seamless and efficient. By leveraging Protocol Buffers (Protobuf) and HTTP/2, gRPC enables robust, schema-driven, and language-agnostic communication, making it a preferred choice in distributed systems.

In this article, we’ll dive deep into the world of gRPC, exploring its architecture, how it works, and the problems it solves.

We’ll also cover the gRPC meaning, its key benefits, challenges, and practical applications, with insights into how companies like yours can leverage gRPC for efficient performance testing and scalability.

Whether you’re a developer, architect, or just someone eager to understand how to use grpc to reshape service-to-service communication, this guide has you covered!

What is gRPC: Overview

In simple terms, gRPC allows programs running on different computers to interact as if they were running locally. At its core, gRPC relies on HTTP/2 for transport and Protocol Buffers (Protobuf) for defining the structure of messages, ensuring fast, lightweight communication.

This framework is especially significant in the world of distributed systems, where microservices, APIs, and real-time communication are becoming the standard. gRPC’s support for multiple programming languages and bi-directional streaming capabilities positions it as a powerful tool for building scalable and efficient systems. It simplifies the challenges of inter-service communication by providing strongly typed contracts and high-performance data serialization.

From enabling service-to-service communication in microservices architectures to powering real-time applications, gRPC has redefined how modern systems communicate.

How Does gRPC Work?

Unlike traditional communication methods, gRPC operates on a schema-driven framework powered by Protocol Buffers (Protobuf) for data serialization and HTTP/2 as its transport protocol. This combination allows gRPC to deliver efficient, real-time communication with minimal latency.

gRPC explained:

To make it easier to understand how gRPC actually works, let’s find out its step-by-step process:

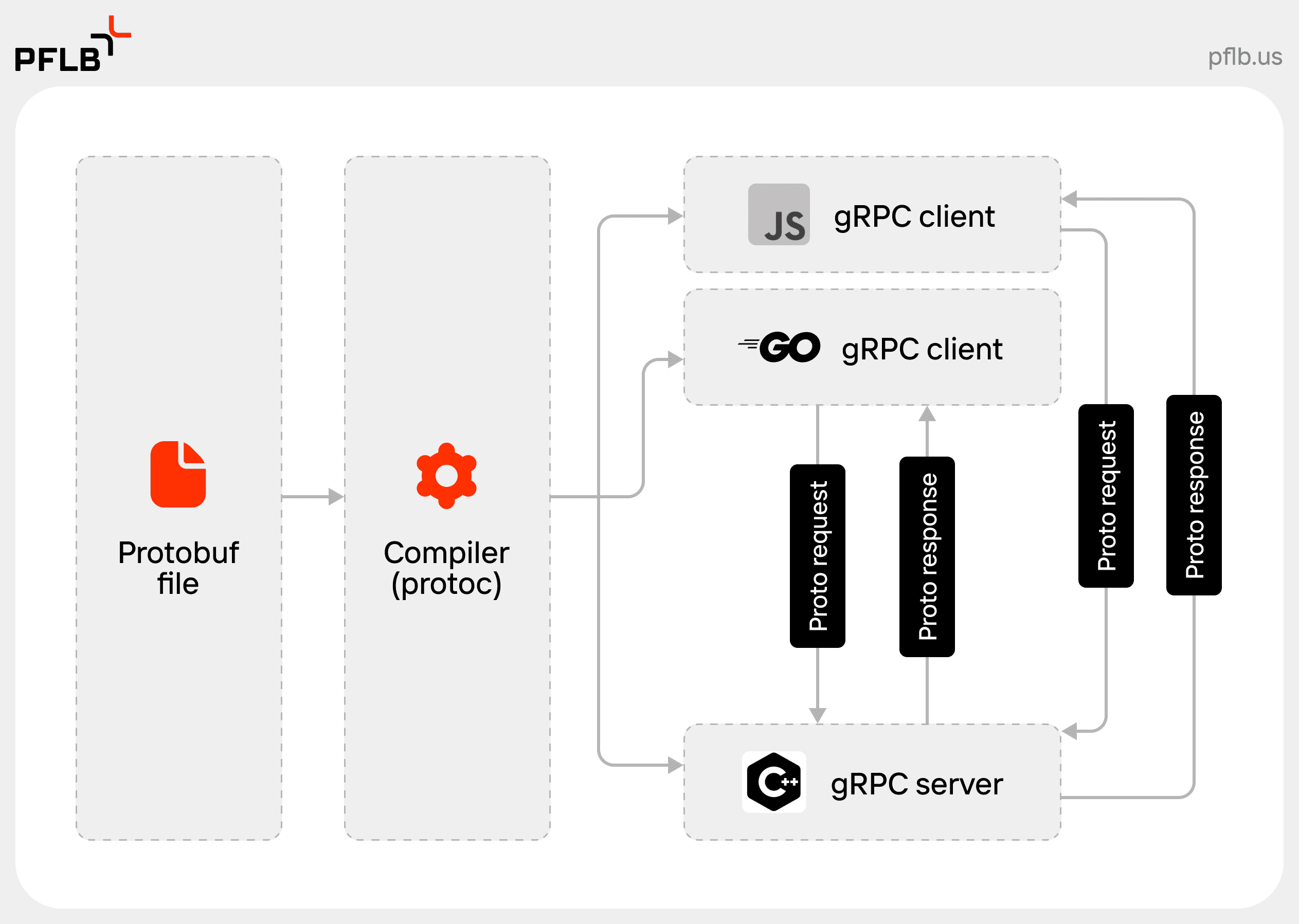

Defining the Service with Protobuf

The process begins with defining the communication contract. Developers create a .proto file using Protocol Buffers (Protobuf), a language-neutral serialization framework. This file outlines the structure of the API, including the service methods and the format of messages exchanged between the client and server. It defines the rules—how requests should be formatted, what data types are expected, and what responses will look like.

For example, a .proto file may describe an operation to fetch user details, detailing the input (like a user ID) and output (user information). This clear contract ensures both client and server are on the same page.

Code Generation with protoc

Once the .proto file is ready, the Protocol Buffers compiler, commonly referred to as protoc, processes it to generate code for both the client and the server. This generated code acts as a foundation, eliminating much of the manual work typically required for service-to-service communication.

The client-side code includes stubs or methods that allow developers to call remote services as if they were local functions.

On the server side, protoc generates base classes that developers can implement or override to define the service’s functionality. This automation not only speeds up development but also ensures consistency across different languages and platforms.

Establishing Communication

gRPC relies on HTTP/2 as its transport protocol, which brings several advantages over traditional HTTP/1.1. HTTP/2 supports multiplexing, meaning multiple requests and responses can be sent over a single connection without waiting for each to complete. This reduces latency and improves efficiency, particularly in real-time scenarios.

Additionally, HTTP/2 provides built-in support for bidirectional streaming, making it possible for the client and server to send data to each other simultaneously—a feature that sets gRPC apart from older protocols.

Handling Requests and Responses

Once the communication is established, the interaction between client and server follows a straightforward pattern. On the client side, the developer uses the generated stub to call a service method as if it were a local function.

The stub handles the complexities of serializing the data into the format defined in the .proto file and sending it over the network. When the response arrives, the stub deserializes it into a format the application can easily understand.

On the server side, the base class generated by protoc is implemented with custom logic to handle the incoming request.

For example, if the request is to fetch user details, the server may query a database, retrieve the necessary data, and serialize it before sending it back to the client. The interaction is seamless, with both sides adhering to the rules defined in the .proto contract.

Exploring gRPC Types

One of gRPC’s standout features is its support for multiple communication patterns, enabling flexibility based on the use case. For simple tasks, unary RPC involves sending a single request and receiving a single response.

For scenarios requiring the server to provide multiple updates for a single request, server streaming RPC comes into play. In contrast, client streaming RPC allows the client to send a series of requests, with the server responding once all data is received.

Finally, bidirectional streaming RPC facilitates two-way data streams, where both client and server can continuously send and receive information, making it perfect for real-time applications like chat systems or IoT.

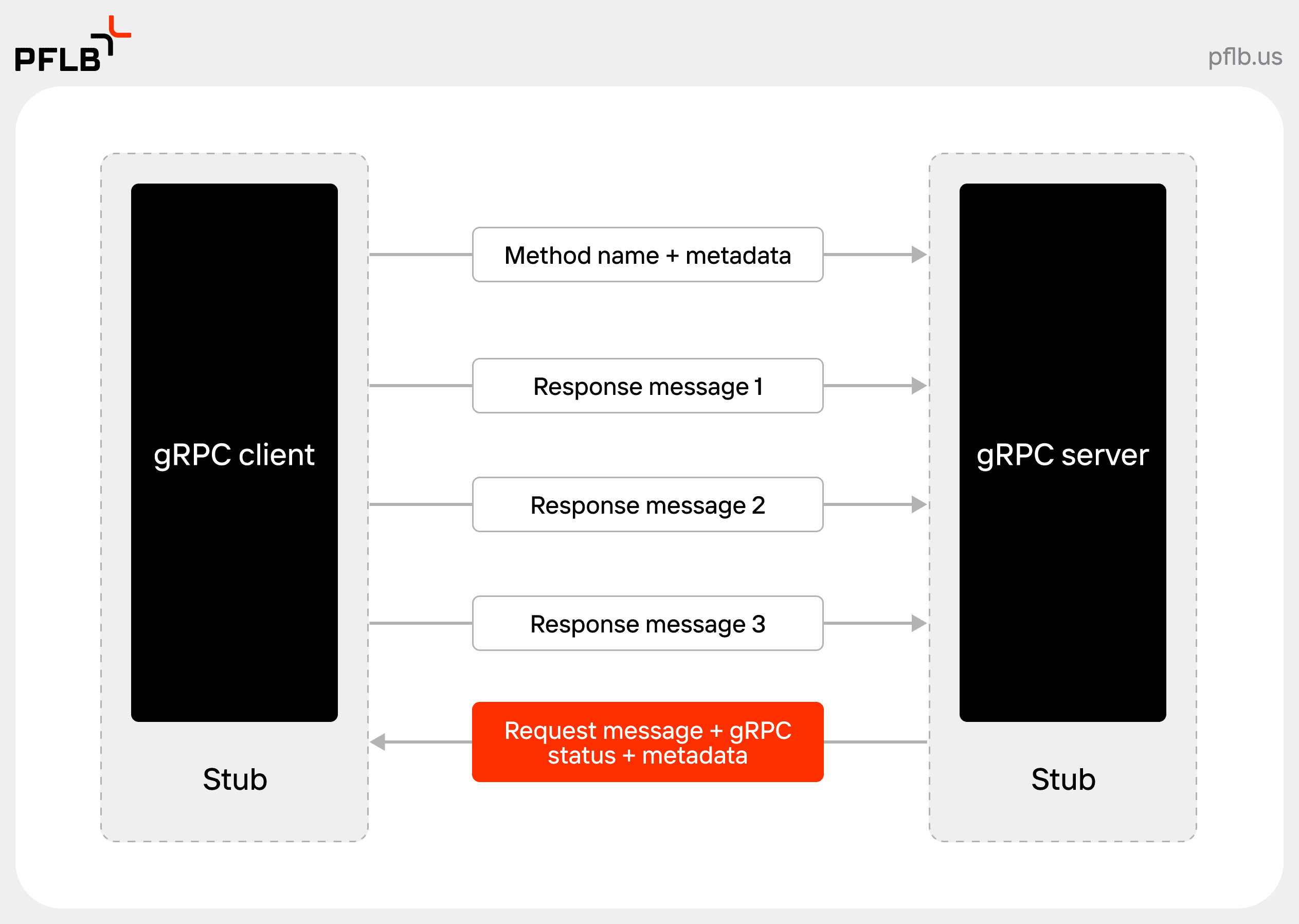

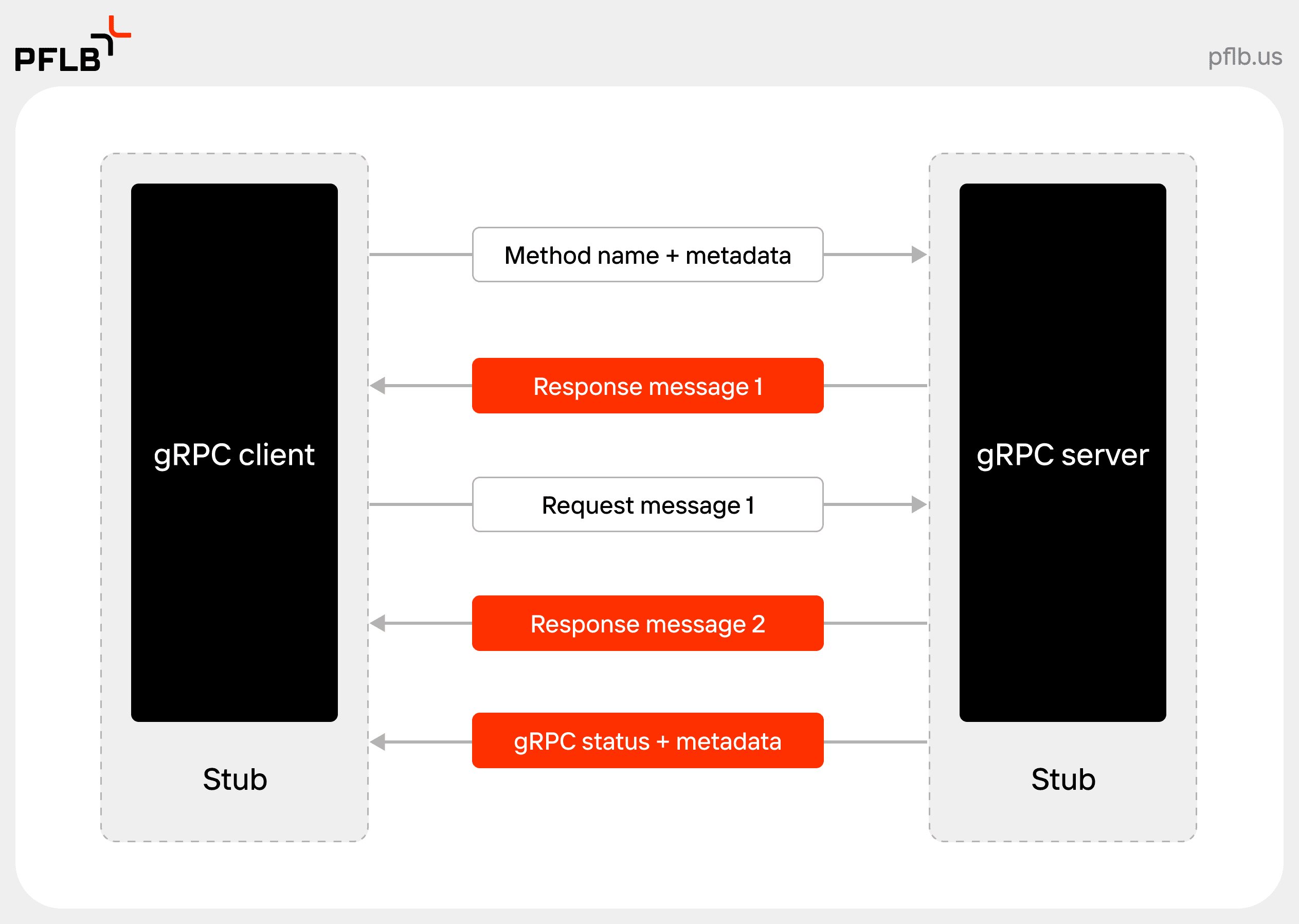

gRPC diagram:

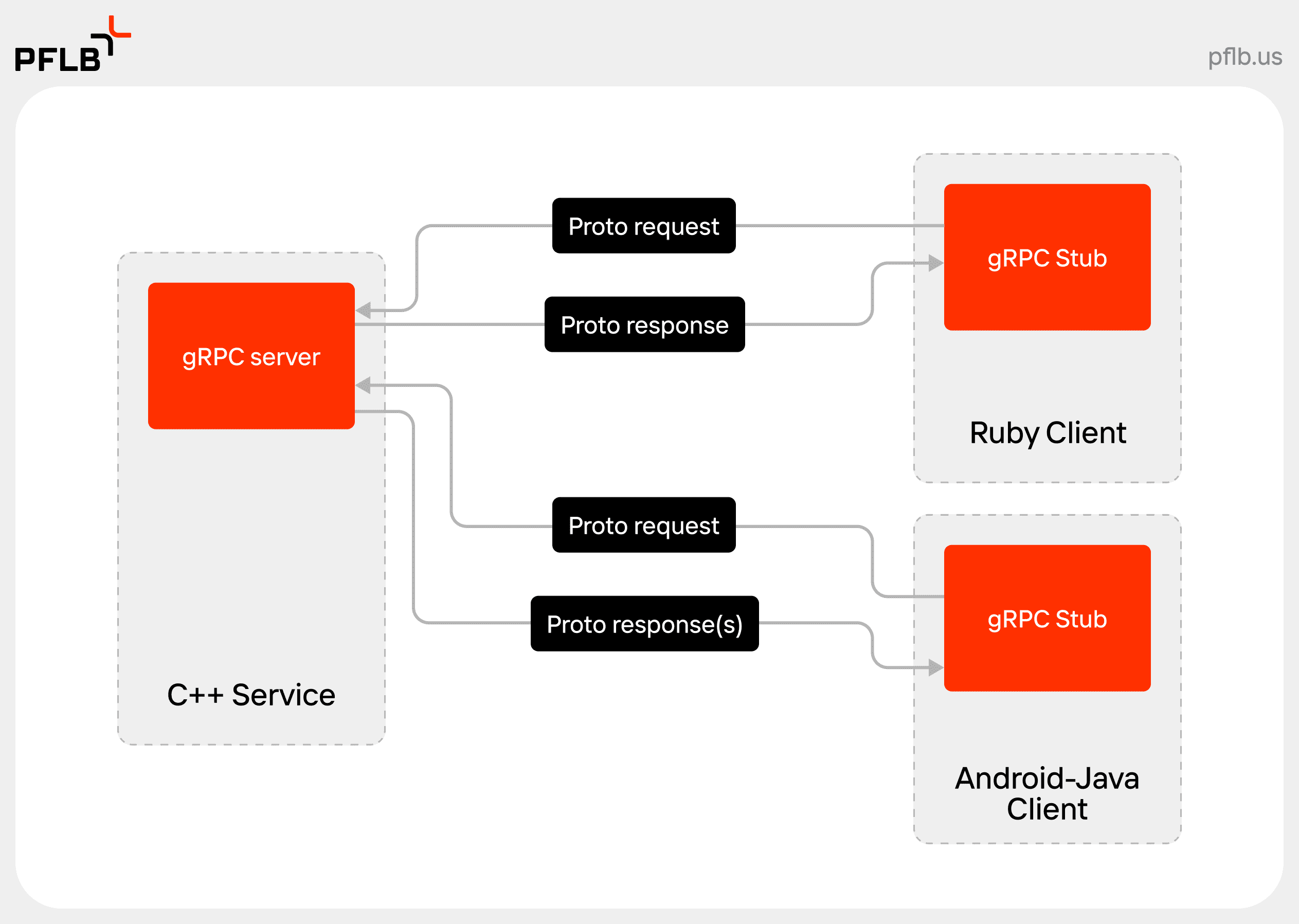

Core gRPC Architecture

The architecture of gRPC is built around the principles of simplicity, performance, and interoperability, allowing it to efficiently support communication in distributed systems.

At its core, gRPC leverages several key components that work together to provide a seamless Remote Procedure Call (RPC) framework.

Understanding these components helps clarify how gRPC achieves its high performance and versatility in service-to-service communication.

Protocol Buffers

Protocol Buffers (Protobuf) serve as the foundation of gRPC’s data serialization and structure definition. Protobuf is a language-neutral and platform-independent mechanism that defines the format of the messages exchanged between client and server. A .proto file specifies the structure of these messages and service methods.

The Protobuf compiler (protoc) generates code for multiple programming languages based on this file, ensuring consistency across different environments. By using Protobuf, gRPC achieves lightweight, compact data serialization, reducing message sizes and improving transmission speed, especially in network-constrained environments.

HTTP/2

gRPC uses HTTP/2 as its transport protocol, which brings advanced capabilities that traditional HTTP/1.1 lacks. HTTP/2 supports multiplexing, allowing multiple requests and responses to be sent simultaneously over a single TCP connection. This eliminates the overhead of establishing separate connections for each RPC, reducing latency.

HTTP/2 also provides features like flow control, header compression, and built-in support for bidirectional streaming. These features enable gRPC to handle diverse communication patterns efficiently, such as real-time data transfer and continuous updates.

Channel

A channel in gRPC represents the abstraction of a long-lived connection between the client and server. The channel handles the underlying network communication, including setting up and managing connections, multiplexing requests, and ensuring proper use of HTTP/2 features.

Channels also allow for advanced configurations, such as load balancing and retry policies, making them a critical component for scalability and fault tolerance in distributed systems. Developers can reuse a single channel to make multiple RPC calls, further enhancing efficiency.

Stub

The stub, also known as the client stub or proxy, is the component generated from the .proto file that resides on the client side. It provides an interface for the client to interact with the server as though the methods are local functions.

When a client makes a method call using the stub, it handles serializing the request data into Protobuf format, sending it over the network through the channel, and deserializing the response from the server. This abstraction simplifies the complexities of RPC communication, allowing developers to focus on application logic rather than low-level networking details.

Interface

A gRPC interface is defined using Protobuf files, where developers specify services and methods. These interfaces act as a contract, ensuring consistent communication between client and server.

4 gRPC Methods

RPC supports four core types of service methods, enabling flexible and efficient communication patterns tailored to specific use cases. These methods differ in how data flows between the client and server, providing options for simple requests, real-time updates, and streaming data.

Here’s a breakdown of each method type with examples of how they are applied in real-world scenarios.

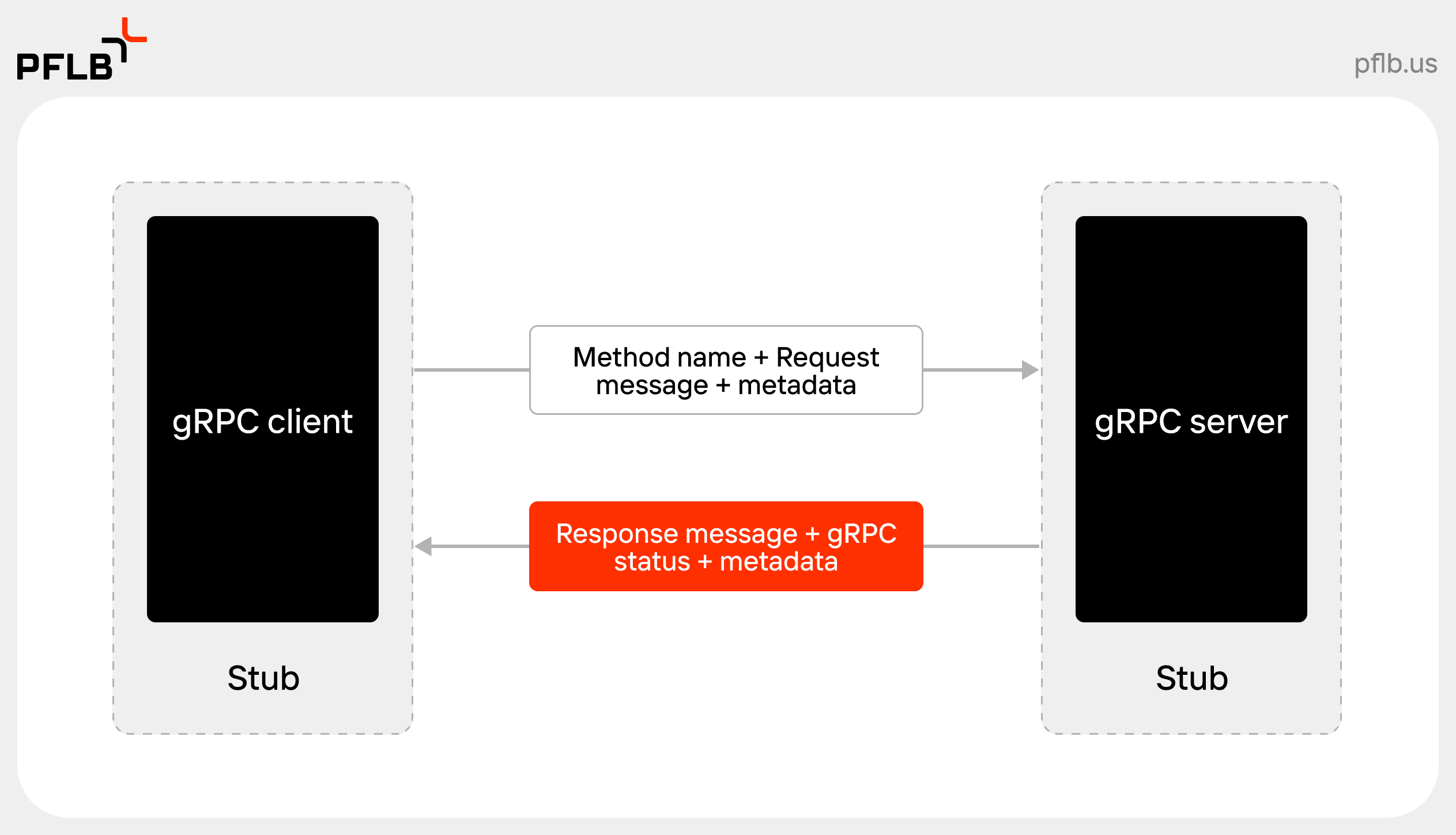

1. Unary RPC

Unary RPC is the simplest form of gRPC communication. The client sends a single request to the server and receives a single response in return.

How it works:

Advantages:

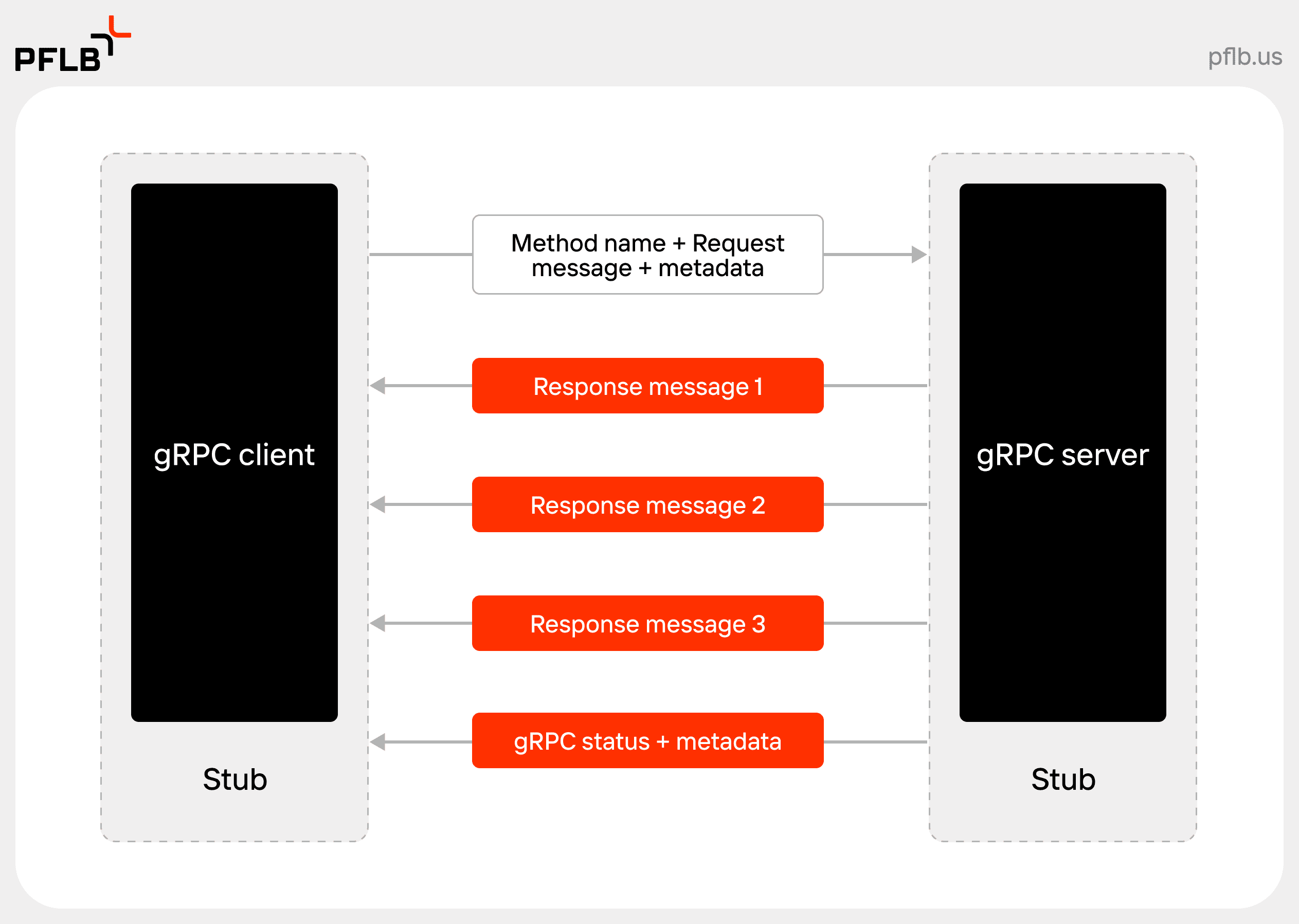

2. Server Streaming RPC

With server streaming RPC, the client sends a single request, but the server sends back a stream of responses over time. The client reads the responses sequentially as they arrive.

How it works:

Advantages:

3. Client Streaming RPC

In client streaming RPC, the client sends a stream of requests to the server. The server processes these requests and sends back a single consolidated response.

How it works:

Advantages:

4. Bidirectional Streaming RPC

Bidirectional streaming RPC allows both the client and server to send a stream of messages to each other independently. This two-way communication happens concurrently, making it ideal for real-time, interactive applications.

How it works:

Advantages:

Key Features of gRPC

gRPC is a robust framework packed with advanced features that make it highly efficient and versatile for modern distributed systems. These features enable developers to create scalable, high-performance applications while simplifying complex communication processes.

Metadata

gRPC allows the inclusion of metadata in RPC calls, enabling clients and servers to exchange additional information along with requests and responses. Metadata can carry authentication tokens, tracing information, or custom headers, making it easier to implement advanced functionalities like security and monitoring.

Synchronous and Asynchronous Calls

gRPC supports both synchronous (blocking) and asynchronous (non-blocking) calls. Developers can choose synchronous calls for straightforward operations where the client waits for a response or asynchronous calls for concurrent processing, enhancing application efficiency.

Channels

Channels represent the communication pathway between a client and a server. They manage the connection lifecycle, including creating, reusing, and closing connections. Channels also provide options for configurations like load balancing, retries, and secure communication using TLS.

Interceptors

Interceptors are middleware components in gRPC that enable developers to intercept and manipulate RPC calls. They are useful for tasks like logging, metrics collection, error handling, or injecting custom behaviors before or after an RPC operation.

Protocol Buffers (Protobuf)

As the backbone of gRPC, Protocol Buffers provide efficient data serialization. Protobuf ensures that messages exchanged between client and server are compact, lightweight, and easy to parse, reducing bandwidth usage and improving communication speed.

HTTP/2 Support

gRPC leverages HTTP/2 as its underlying transport protocol, offering features like multiplexing (multiple streams over a single connection), header compression, and support for bidirectional communication. This makes it highly efficient for real-time applications.

Streaming Capabilities

With support for client streaming, server streaming, and bidirectional streaming, gRPC is well-suited for scenarios that require continuous data exchange, such as real-time updates, live dashboards, and interactive applications.

Language-Agnostic API

gRPC is designed to work seamlessly across various programming languages, thanks to Protobuf’s ability to generate code for multiple platforms. This makes gRPC an ideal choice for polyglot environments where services are developed in different languages.

Built-In Authentication and Security

gRPC supports secure communication through TLS and integrates with authentication mechanisms like OAuth2. This ensures data integrity and confidentiality during transmission.

Automatic Code Generation

By processing .proto files with the Protobuf compiler, gRPC automates the generation of boilerplate client and server code, reducing development effort and potential for errors.

Lightweight and High-Performance

gRPC’s focus on minimal message size and efficient serialization/deserialization enables faster data exchange, making it a high-performance solution for applications requiring low latency and high throughput.

gRPC Benefits

gRPC is a modern communication framework that brings numerous advantages to developers building distributed systems. Its design focuses on efficiency, flexibility, and performance, making it a top choice for many applications. Here are the key benefits of gRPC:

| Benefit | Description |

| Efficiency | Compact messages with low latency. |

| Language Support | Works across multiple programming languages. |

| Smaller Message Size | Reduces bandwidth with Protobuf. |

| Faster Communication | Quick data exchange via HTTP/2. |

| Performance | 7-10x faster than REST for data exchange. |

| Code Generation | Automates client-server code creation. |

| Built-In Security | Ensures safe communication with TLS. |

| Scalability | Handles high loads efficiently. |

| Real-Time Communication | Ideal for instant data exchange. |

Efficiency

gRPC’s reliance on Protocol Buffers (Protobuf) for data serialization ensures compact message sizes. This efficiency reduces bandwidth consumption and speeds up data transmission, making it ideal for environments with limited resources or high traffic. Additionally, gRPC’s use of HTTP/2 enables multiplexing, reducing latency and improving throughput.

Language-Agnosticism

One of gRPC’s strongest features is its language-neutral design. By generating code in multiple programming languages from a single .proto file, gRPC allows seamless communication across services built with different technologies. This makes it particularly valuable in polyglot environments and for teams using diverse tech stacks.

Smaller Message Size

With Protobuf, gRPC minimizes the size of transmitted messages compared to traditional formats like JSON or XML. This compactness not only improves performance but also reduces the load on network infrastructure, which is crucial for mobile applications or systems operating in bandwidth-constrained environments.

Faster Communication

The combination of HTTP/2, gRPC and Protobuf results in faster data exchange. HTTP/2 supports features like multiplexing and header compression, while Protobuf accelerates serialization and deserialization. This speed makes gRPC particularly suitable for real-time applications such as streaming platforms or live data feeds.

Performance

gRPC is significantly faster than traditional REST APIs. Tests show it is 7 times faster for receiving data and 10 times faster for sending data, thanks to Protobuf’s tight packing and HTTP/2’s advanced capabilities. This performance boost is especially critical for applications where speed and efficiency are paramount.

To evaluate the performance of gRPC, check out PFLB’s gRPC load testing tool, or learn more on how to do grpc performance testing.

Code Generation

By using .proto files, gRPC automates the creation of boilerplate code for both client and server implementations. This significantly reduces development time, ensures consistency across platforms, and eliminates the need for manual serialization or deserialization logic. It allows developers to focus more on business logic rather than repetitive infrastructure tasks.

Built-In Security

gRPC supports TLS (Transport Layer Security) for encrypted communication, ensuring that data transmitted between client and server is secure. Its compatibility with authentication mechanisms like OAuth2 enhances security for applications requiring user authentication or sensitive data handling.

Scalability

gRPC’s efficient communication patterns and support for multiplexing make it highly scalable. It can handle high loads with minimal resource consumption, making it an excellent fit for microservices architectures and large-scale distributed systems.

Real-Time Communication

The low latency and bidirectional streaming capabilities of gRPC make it well-suited for real-time communication use cases. Applications like live video streaming, multiplayer gaming, or collaborative tools benefit from its ability to deliver near-instantaneous data exchange.

Drawbacks of gRPC

While gRPC offers numerous benefits, it’s not without its challenges. Understanding these drawbacks is crucial for determining if gRPC is the right fit for your project.

| Drawback | Description |

| Debugging Complexity | Protobuf messages are hard to inspect. |

| Learning Curve | Requires familiarity with gRPC concepts. |

| Limited Tooling | Fewer tools than REST APIs. |

| Firewall Issues | HTTP/2 may face compatibility problems. |

| No Native Browser Support | Needs gRPC-Web for frontend use. |

Debugging Complexity

gRPC’s reliance on HTTP/2 and Protobuf can make debugging more challenging compared to traditional REST APIs. The compact, binary-encoded messages of Protobuf are not human-readable, requiring specialized tools to inspect and troubleshoot communication issues.

Learning Curve

Adopting gRPC requires familiarity with concepts like Protocol Buffers, HTTP/2, and the framework’s unique communication patterns. For teams accustomed to REST APIs, this can involve a steep learning curve, particularly when implementing streaming or advanced features

Limited Tooling

Compared to REST, which has extensive tooling support for testing and debugging, gRPC’s ecosystem is still evolving. While Postman now support gRPC, they are less mature and comprehensive than those available for REST-based workflows.

Firewall Issues

gRPC’s use of HTTP/2 can lead to compatibility issues with firewalls and proxies that do not fully support the protocol. This can require additional configuration or workarounds, complicating deployment in certain network environments.

Lack of Native Browser Support

gRPC is not natively supported by browsers, which can complicate its use in frontend applications. While gRPC-Web exists as a workaround, it adds another layer of complexity and may not provide the full functionality of standard gRPC.

gRPC Use Cases

gRPC’s efficiency and versatility make it a go-to solution for various scenarios in distributed computing. Here are some of the most common use cases where gRPC excels.

What is gRPC used for:

Microservices Architectures

In a microservices architecture, individual services often need to communicate efficiently with each other. gRPC enables this by providing low-latency, language-agnostic communication. Its compact message size and support for multiple programming languages make it ideal for complex, polyglot environments.

Service-to-Service Communication

For systems where real-time or frequent communication between services is essential, gRPC offers high-speed and lightweight communication. Its use of HTTP/2 allows multiplexed connections, ensuring efficient data exchange between services.

Real-Time Applications

gRPC’s support for bidirectional streaming makes it perfect for real-time applications that require continuous data flows. This includes use cases like live chat systems, collaborative tools, or streaming analytics.

IoT Systems

IoT systems often involve a massive number of devices sending data to a central server. gRPC’s small message size and efficient serialization with Protobuf ensure minimal bandwidth consumption, making it a great fit for IoT environments.

APIs for Mobile and Cloud Applications

Mobile and cloud applications require fast and lightweight communication to ensure a seamless user experience. gRPC’s compact message sizes and efficient serialization make it an excellent choice for building APIs that serve mobile or cloud-based systems.

How PFLB Can Help Optimize gRPC Performance

PFLB specializes in performance testing, ensuring that your gRPC-based systems are efficient, scalable, and ready to handle real-world traffic. With a proven track record across industries, PFLB provides tailored solutions to address the unique challenges of gRPC load testing.

Being one of the best gRPC load testing tools – PFLB has successfully executed a wide range of projects involving high-demand systems. The experience spans multiple industries, from finance to IoT, where gRPC plays a critical role in enabling smooth and reliable communication between services.

Banking sector: PFLB partnered with a bank to performance test their complex system in two-week release cycles. The team tests functionality, identifies performance issues, and delivers reports, ensuring timely releases and system reliability under real-world conditions.

Learn more about it- Performance Testing for Massive Bank Systems: Our Experience

Energy sector: During storm-induced power outages, PFLB worked with a utility provider to ensure their systems could handle sudden surges in user demand, resulting in uninterrupted service.

Learn more about it- How Load Testing Helped Texans Survive Power Outages During a Storm

Retail industry: PFLB tested an integrated set of SAP systems for a leading retail chain with an omnichannel approach. By simulating real-world traffic, they identified and resolved issues such as database locking, ActiveMQ overflows, and a critical TSA patch error. The testing ensured optimized system performance and seamless customer experiences across sales and logistics operations.

Learn more about it- Load Testing SAP Systems for Retail Company

Tailored solutions for gRPC

PFLB’s gRPC load testing solutions are designed to simulate high traffic scenarios, allowing businesses to assess scalability, identify bottlenecks, and optimize performance.

Final Thought

gRPC is a game-changer for modern distributed systems. It’s fast, efficient, and works seamlessly across different platforms and languages, making it a favorite among developers.

With features like compact message sizes, streaming options, and strong security, gRPC is well-suited for building scalable and real-time applications.

Of course, like any tool, it has its pros and cons. Understanding when and how to use it is key. Teams that are still comparing protocols can review gRPC alternatives to see when REST or GraphQL might be a better fit.

Whether you’re working on microservices, APIs, or real-time systems, gRPC offers the flexibility and performance you need to create powerful, reliable applications.